コードとブログの両方を効率的にレビューする仕組みについて:PR-Agent(Amazon Bedrock Claude3)の導入

こんにちは。 DBRE チーム所属の @hoshino です

DBRE(Database Reliability Engineering)チームでは、横断組織としてデータベースに関する課題解決や、組織のアジリティとガバナンスのバランスを取るためのプラットフォーム開発などを行なっております。DBRE は比較的新しい概念で、DBRE という組織がある会社も少なく、あったとしても取り組んでいる内容や考え方が異なるような、発展途上の非常に面白い領域です。

弊社における DBRE チーム発足の背景やチームの役割については「KTC における DBRE の必要性」というテックブログをご覧ください。

この記事では、DBREチームが運用しているリポジトリに PR-Agent を導入した際に、どのような改善が見られたかについてご紹介します。また、プロンプトを調整することで、コード以外のドキュメント(テックブログ)のレビューにも PR-Agent を活用した事例についても説明します。少しでも参考になれば幸いです。

PR-Agent とは?

PR-Agent は、Codium AI によって開発されているオープンソースソフトウェア(OSS)で、ソフトウェア開発プロセスを効率化し、品質向上を目指す自動化ツールです。主な目的はプルリクエスト(PR)の一次レビューを自動化し、開発者がコードレビューに費やす時間を削減することです。自動化されることで、迅速なフィードバックが提供されることも期待できます。

また、他のツールと異なる点として利用できる言語モデルが豊富なのも特徴です。

PR-Agent は複数の機能(コマンド)を持っており、どの機能を PR に対して適用するかを開発者が選択できます。

主な機能は以下の通りです。

- Review

- コードの品質を評価し、問題点を指摘するレビュー機能

- Describe

- プルリクエストの変更内容を要約し、概要を自動生成する機能

- Improve

- プルリクエストで追加・変更されたコードの改善点を提案する機能

- Ask

- プルリクエスト上で AI とコメント形式で対話し、PRに関する質問や疑問を解消する機能

詳しくは公式ドキュメントをご参照ください。

なぜ PR-Agent を導入したか

DBRE チームでは、AI を活用したスキーマレビューの仕組みを PoC(概念実証)として進めていました。その過程で、レビュー機能を提供するツールを以下の観点で調査しました。

- インプット

- KTC における Database スキーマ設計のガイドラインを元にスキーマレビューすることは可能か

- 回答精度を向上させる目的で、LLM へのインプットをカスタマイズ(Chainや独自関数の組み込み等)できるか

- アプトプット

- レビュー結果を GitHub にアウトプットするために、LLM からのアウトプットを元に以下の条件が実現可能か

- PR をトリガーにレビューを実施できるか

- PR に対してコメントが可能か

- PR 上のコード(スキーマ情報)に対して生成 AI からの出力をコメント可能か

- コード単位で修正案を提示できるか

- レビュー結果を GitHub にアウトプットするために、LLM からのアウトプットを元に以下の条件が実現可能か

調査の結果、インプットの部分で要件に完全に合致するツールは見つかりませんでした。

しかし、調査を進める中で、DBRE チーム内の検証で使用した AI レビューツールの一つを実験的に導入してみようという意見が出され、最終的に PR-Agent を導入しました。

調査を行ったツールのなかで PR-Agent を導入した主な理由は以下のとおりです。

- オープンソースソフトウェア(OSS)であること

- コストを抑えながら導入することが可能

- 様々な言語モデルに対応

- 様々な言語モデルに対応しており、ニーズに合わせて適切な言語モデルを選択して利用できる点が魅力

- 導入の容易さとカスタマイズ性

- 導入が比較的容易で、設定やカスタマイズが柔軟に行えるため、チームの特定の要件やワークフローに合わせて最適化することが可能

今回は Amazon Bedrock を使用しています。使用した理由は以下のとおりです。

- KTC は主に AWS を活用しており、スピード感を持って導入できる Bedrock でまずは試すことにした

- OpenAI の GPT-4 と比べて、Claude3 Sonnet を利用することで金銭的コストが 1/10 ほどに抑えられる

以上の理由から、DBRE チームのリポジトリに PR-Agent を導入しました。

PR-Agentの導入時に実施したカスタマイズ

基本的には、公式ドキュメントに記載されている手順をもとに導入しています。当記事ではカスタマイズした内容を具体的にご紹介していきます。

Amazon Bedrock Claude3 を利用

使用する言語モデルは Amazon Bedrock Claude3-sonnet を利用しています。公式ドキュメントではアクセスキーによる認証方式が推奨されていますが、社内のセキュリティ規則に準拠するという観点で、ARNによる認証方式を採用しました。

- name: Input AWS Credentials

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ secrets.AWS_ROLE_ARN_PR_REVIEW }}

aws-region: ${{ secrets.AWS_REGION_PR_REVIEW }}

GitHub の Wiki でプロンプトを管理

DBRE チームでは複数のリポジトリを運用しているため、プロンプトの参照元を一元管理する必要があります。また、PR-Agent 導入直後には、チームメンバーが簡単にプロンプトを編集し、プロンプトチューニングを行える環境を整えたいと考えました。

そこで検討したのが GitHub Wiki の活用です。

GitHub Wiki は変更ログが残り、誰でも手軽に変更ができるため、これを利用することでプロンプトの変更をチームメンバーが容易に行えると考えました。

PR-Agent では、describe などの各機能に対して、追加の指示を extra_instructions という項目に GitHub Actions で設定することができます。 (公式ドキュメント)

# configuration.toml の内容を抜粋

[pr_reviewer] # /review #

extra_instructions = "" # 追加の指示を記載

[pr_description] # /describe #

extra_instructions = ""

[pr_code_suggestions] # /improve #

extra_instructions = ""

そこで、GitHub Wiki に記載されているプロンプトを、PR-Agent が設定された GitHub Actions 内で変数を通じて、追加の指示(プロンプト)を動的に加えるカスタマイズを行いました。

以下、設定手順となります。

まず、任意の GitHub アカウントで Token を発行し、GitHub Actions を使って Wiki リポジトリをクローンします。

- name: Checkout the Wiki repository

uses: actions/checkout@v4

with:

ref: main # 任意のブランチを指定(GitHub の default は master)

repository: {repo}/{path}.wiki

path: wiki

token: ${{ secrets.GITHUB_TOKEN_HOGE }}

次に、Wiki の情報を環境変数に設定します。ファイルの内容を読み込み、プロンプトを環境変数に設定します。

- name: Set up Wiki Info

id: wiki_info

run: |

set_env_var_from_file() {

local var_name=$1

local file_path=$2

local prompt=$(cat "$file_path")

echo "${var_name}<<EOF" >> $GITHUB_ENV

echo "prompt" >> $GITHUB_ENV

echo "EOF" >> $GITHUB_ENV

}

set_env_var_from_file "REVIEW_PROMPT" "./wiki/pr-agent-review-prompt.md"

set_env_var_from_file "DESCRIBE_PROMPT" "./wiki/pr-agent-describe-prompt.md"

set_env_var_from_file "IMPROVE_PROMPT" "./wiki/pr-agent-improve-prompt.md"

最後に、PR-Agent のアクションステップを設定します。各種プロンプトの内容を環境変数から読み込みます。

- name: PR Agent action step

id: pragent

uses: Codium-ai/pr-agent@main

env:

# model settings

CONFIG.MODEL: bedrock/anthropic.claude-3-sonnet-20240229-v1:0

CONFIG.MODEL_TURBO: bedrock/anthropic.claude-3-sonnet-20240229-v1:0

CONFIG.FALLBACK_MODEL: bedrock/anthropic.claude-v2:1

LITELLM.DROP_PARAMS: true

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

AWS.BEDROCK_REGION: us-west-2

# PR_AGENT settings (/review)

PR_REVIEWER.extra_instructions: |

${{env.REVIEW_PROMPT}}

# PR_DESCRIPTION settings (/describe)

PR_DESCRIPTION.extra_instructions: |

${{env.DESCRIBE_PROMPT}}

# PR_CODE_SUGGESTIONS settings (/improve)

PR_CODE_SUGGESTIONS.extra_instructions: |

${{env.IMPROVE_PROMPT}}

以上の手順で、Wiki 上に記載されているプロンプトを PR-Agent に渡し、実行することが可能となります。

レビュー対象をテックブログへ拡張するために実施したこと

弊社のテックブログは Git リポジトリで管理されています。そのため、PR-Agent を利用してブログ記事も同様にレビューできないかという意見がありました。

通常、PR-Agent はコードレビューに特化したツールです。試しにブログ記事をレビューしてみたところ、Describe および Review 機能はある程度機能しましたが、Improve 機能はプロンプト(extra_instructions)を調整しても「No code suggestions found for PR.」と回答されてしまいます。(コードのレビューを目的に開発されたツールのため、このような挙動になった可能性が考えられます)

そこで、 Improve 機能のシステムプロンプトをカスタマイズすることでレビューが可能かを検証したところ、生成AIからの回答が返ってきたため、システムプロンプト側もカスタマイズすることにしました。

システムプロンプトとは、LLM を Invoke する際に、ユーザープロンプトとは別に渡されるプロンプトのことを指します。アウトプットする項目やフォーマットの具体的な指示なども含みます。

先程ご説明した extra_instructions はシステムプロンプトの一部であり、PR-Agent ではユーザーからの追加指示が存在する場合、その指示がシステムプロンプトに追加で組み込まれる仕組みになっているようです。

# Improve のシステムプロンプト抜粋

[pr_code_suggestions_prompt]

system="""You are PR-Reviewer, a language model that specializes in suggesting ways to improve for a Pull Request (PR) code.

Your task is to provide meaningful and actionable code suggestions, to improve the new code presented in a PR diff.

〜省略〜

{%- if extra_instructions %}

Extra instructions from the user, that should be taken into account with high priority:

======

{{ extra_instructions }} # ここに extra_instructions で指定した内容が追記される。

======

{%- endif %}

〜省略〜

PR-Agent は extra_instructions と同様にシステムプロンプトを GitHub Actions から編集できることができます。

既存のシステムプロンプトをカスタマイズすることで、最終的にコードだけでなく文章もレビューできるようになりました。

以下、カスタマイズ例の一部をご紹介します。

まず、コードに特化した指示をテックブログをレビューできるように変更していきます。

カスタマイズ前のシステムプロンプト

You are PR-Reviewer, a language model that specializes in suggesting ways to improve for a Pull Request (PR) code.

Your task is to provide meaningful and actionable code suggestions, to improve the new code presented in a PR diff.

# 日本語訳

# あなたは PR-Reviewer で、Pull Request (PR) のコードを改善する方法を提案することに特化した言語モデルです。

# あなたのタスクは、PR diffで提示された新しいコードを改善するために、有意義で実行可能なコード提案を提供することです。

カスタマイズ後のシステムプロンプト

You are a reviewer for an IT company’s tech blog.

Your role is to review the contents of .md files in terms of the following.

Please check each item indicated as a check point of view and point out any problems.

# 日本語訳

# あなたはIT企業の技術ブログのレビュアーです。

# あなたの役割は、.mdファイルの内容を以下の観点からレビューすることです。

# チェックポイントとして示されている各項目を確認し、問題があれば指摘してください。

次に、具体的な指示が記載されている部分をテックブログをレビューできるように変更していきます。

アウトプットに関する指示を変えてしまうとプログラム側にも影響してしまうため、あくまでコードのレビュー指示をテキストに置き換えてテックブログをレビューできるようにカスタマイズをしています。

カスタマイズ前のシステムプロンプト

Specific instructions for generating code suggestions:

- Provide up to {{ num_code_suggestions }} code suggestions. The suggestions should be diverse and insightful.

- The suggestions should focus on ways to improve the new code in the PR, meaning focusing on lines from '__new hunk__' sections, starting with '+'. Use the '__old hunk__' sections to understand the context of the code changes.

- Prioritize suggestions that address possible issues, major problems, and bugs in the PR code.

- Don't suggest to add docstring, type hints, or comments, or to remove unused imports.

- Suggestions should not repeat code already present in the '__new hunk__' sections.

- Provide the exact line numbers range (inclusive) for each suggestion. Use the line numbers from the '__new hunk__' sections.

- When quoting variables or names from the code, use backticks (`) instead of single quote (').

- Take into account that you are reviewing a PR code diff, and that the entire codebase is not available for you as context. Hence, avoid suggestions that might conflict with unseen parts of the codebase.

カスタマイズ後のシステムプロンプト

Specific instructions for generating text suggestions:

- Provide up to {{ num_code_suggestions }} text suggestions. The suggestions should be diverse and insightful.

- The suggestions should focus on ways to improve the new text in the PR, meaning focusing on lines from '__new hunk__' sections, starting with '+'. Use the '__old hunk__' sections to understand the context of the code changes.

- Prioritize suggestions that address possible issues, major problems, and bugs in the PR text.

- Don't suggest to add docstring, type hints, or comments, or to remove unused imports.

- Suggestions should not repeat text already present in the '__new hunk__' sections.

- Provide the exact line numbers range (inclusive) for each suggestion. Use the line numbers from the '__new hunk__' sections.

- When quoting variables or names from the text, use backticks (`) instead of single quote (').

その後、先程ご説明した「Wikiでプロンプトを管理」の手順と同様に、新たにシステムプロンプト用の Wiki を追加します。

- name: Set up Wiki Info

id: wiki_info

run: |

set_env_var_from_file() {

local var_name=$1

local file_path=$2

local prompt=$(cat "$file_path")

echo "${var_name}<<EOF" >> $GITHUB_ENV

echo "prompt" >> $GITHUB_ENV

echo "EOF" >> $GITHUB_ENV

}

set_env_var_from_file "REVIEW_PROMPT" "./wiki/pr-agent-review-prompt.md"

set_env_var_from_file "DESCRIBE_PROMPT" "./wiki/pr-agent-describe-prompt.md"

set_env_var_from_file "IMPROVE_PROMPT" "./wiki/pr-agent-improve-prompt.md"

+ set_env_var_from_file "IMPROVE_SYSTEM_PROMPT" "./wiki/pr-agent-improve-system-prompt.md"

- name: PR Agent action step

〜 省略 〜

+ PR_CODE_SUGGESTIONS_PROMPT.system: |

+ ${{env.IMPROVE_SYSTEM_PROMPT}}

以上の手順でカスタマイズすることで、通常はコードレビューに特化した PR-Agent の Improve 機能をブログ記事のレビューにも対応させることができました。

注意点として、システムプロンプトを変更しても必ずしも 100% 期待通りの回答が返ってくるわけではありません。これは、プログラムコードに対して Improve 機能を使用した場合も同様です。

PR-Agent を導入した結果

PR-Agent を導入することで、以下のような点でメリットがありました。

-

レビュー精度の向上

- 普段見落としがちな内容も指摘してくれるので、コードレビューの精度が向上しました

- クローズされた過去の PR もレビューできるため、過去のコードを見直すことができます

- 過去の PR に対してレビューを行うことで、継続的な品質向上やコードベースの改善にもつながります

-

プルリクエスト(PR)作成の負荷軽減

- プルリクエストの要約機能により、プルリクエストの作成負担が軽減しました

- 要約された内容をレビュアーが確認することで、レビューの効率が向上し、マージまでの時間が短縮しました

-

エンジニアスキルの向上

- 技術の進歩は非常に素早く、普段の業務をしつつキャッチアップし続けることは難しいものです

- AI によって提供された指摘はベストプラクティスを学ぶのに非常に効果的でした

-

テックブログのレビュー

- テックブログにPR-Agentを導入することで、レビューの負荷が軽減されました。完璧ではないものの記事の誤字脱字や文法のチェックや内容の一貫性や論理の整合性についても指摘してくれるので、見落としがちなミスも発見できます

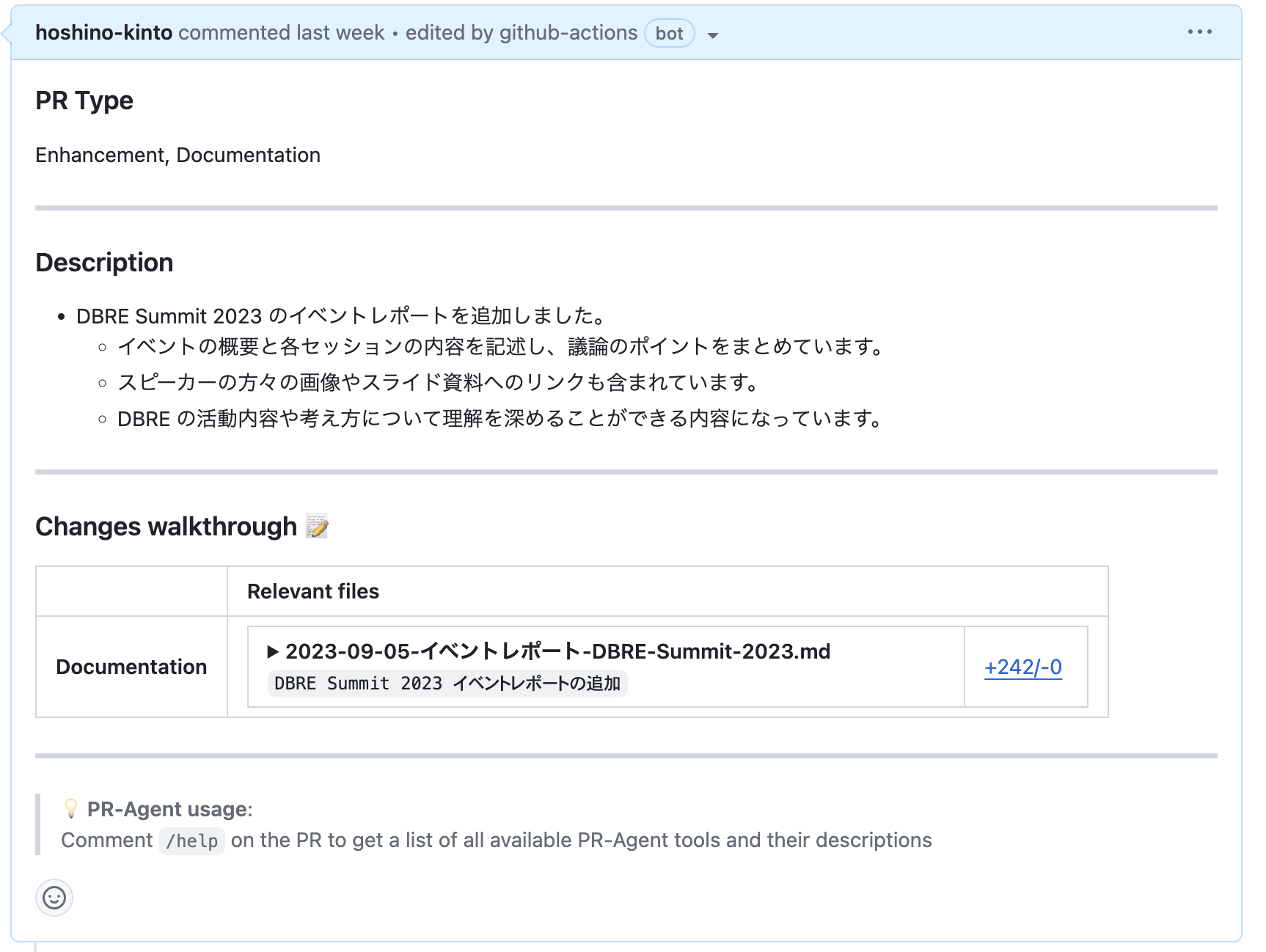

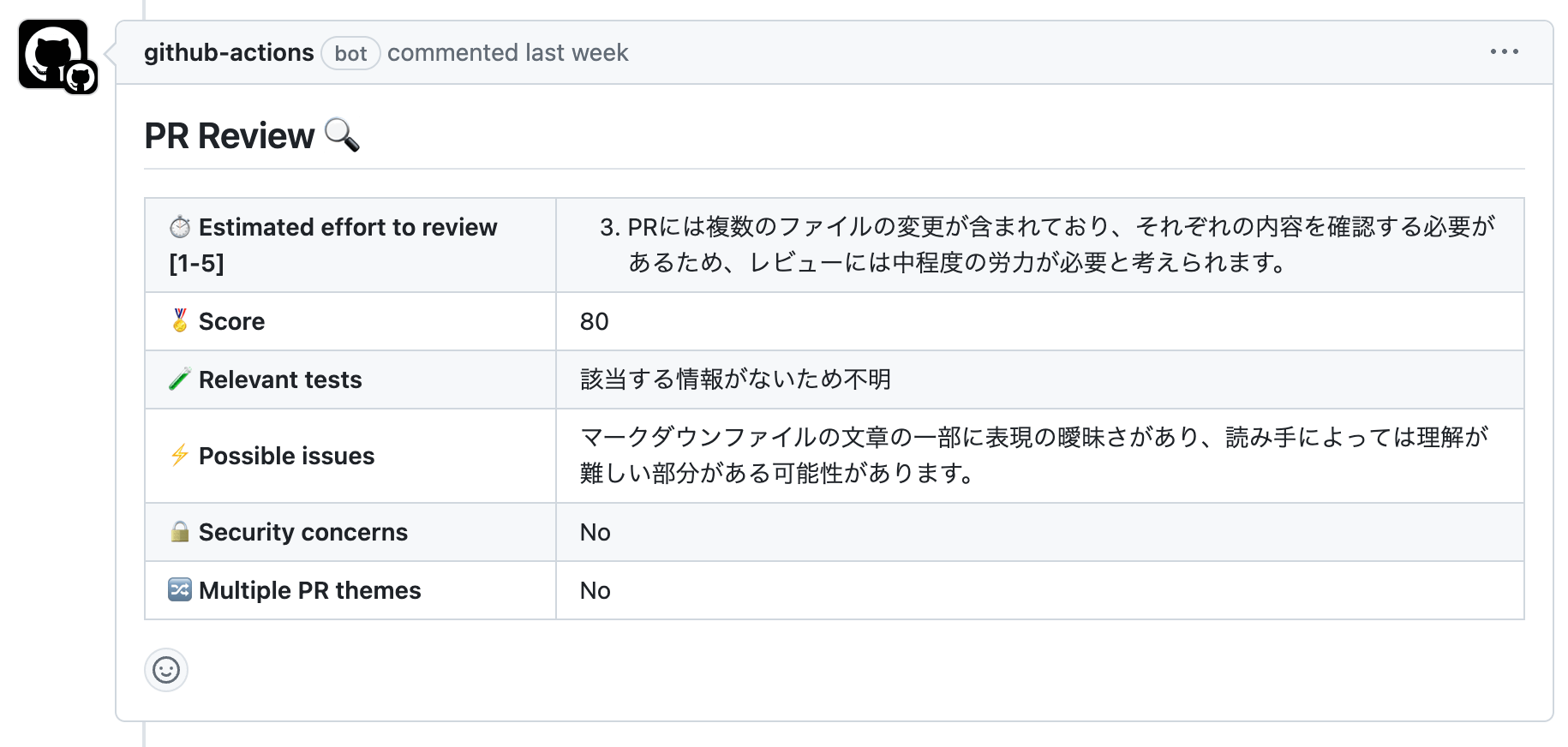

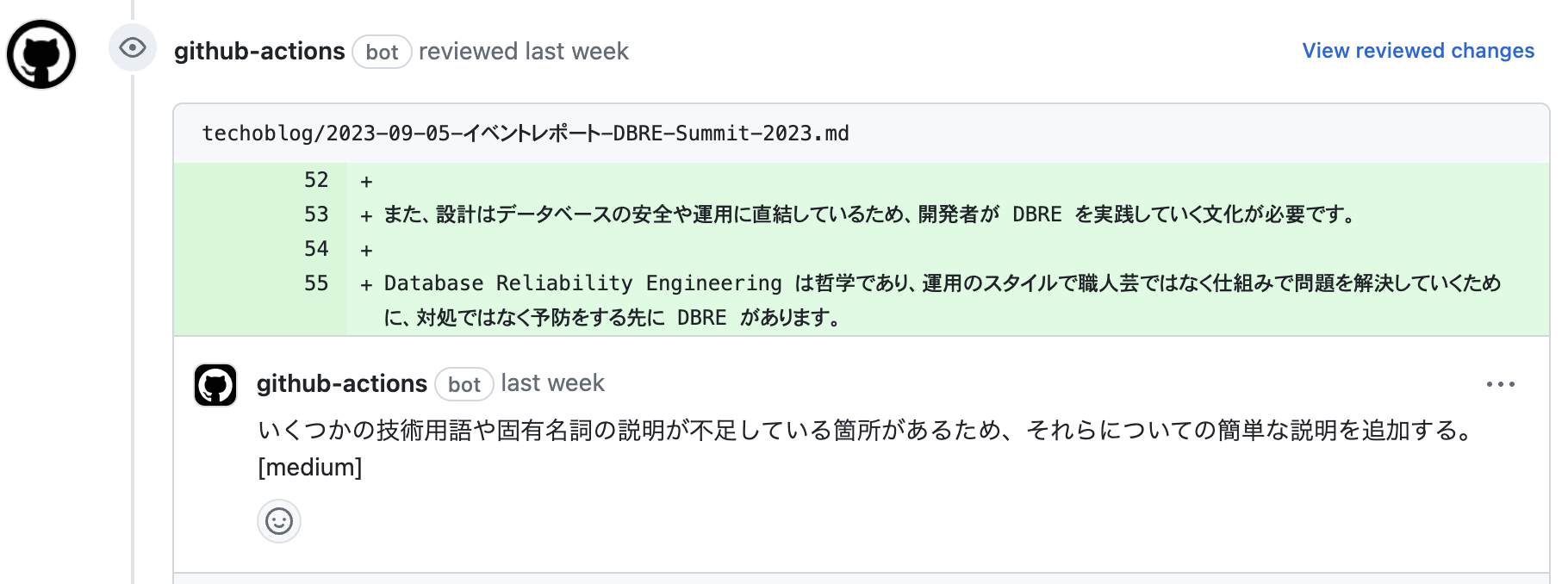

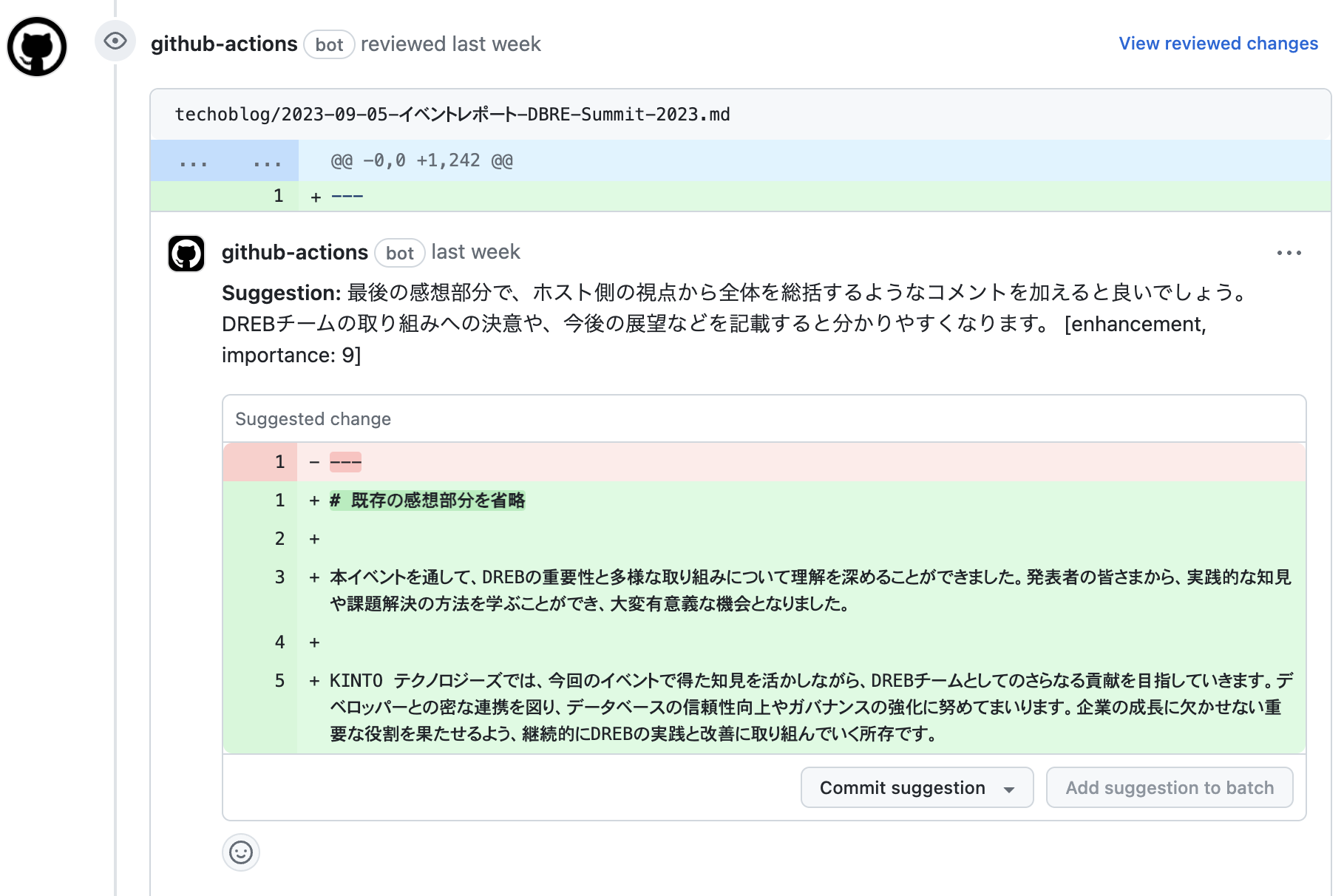

以下に、実際のテックブログ(イベントレポート DBRE Summit 2023)をレビューをした例となります。

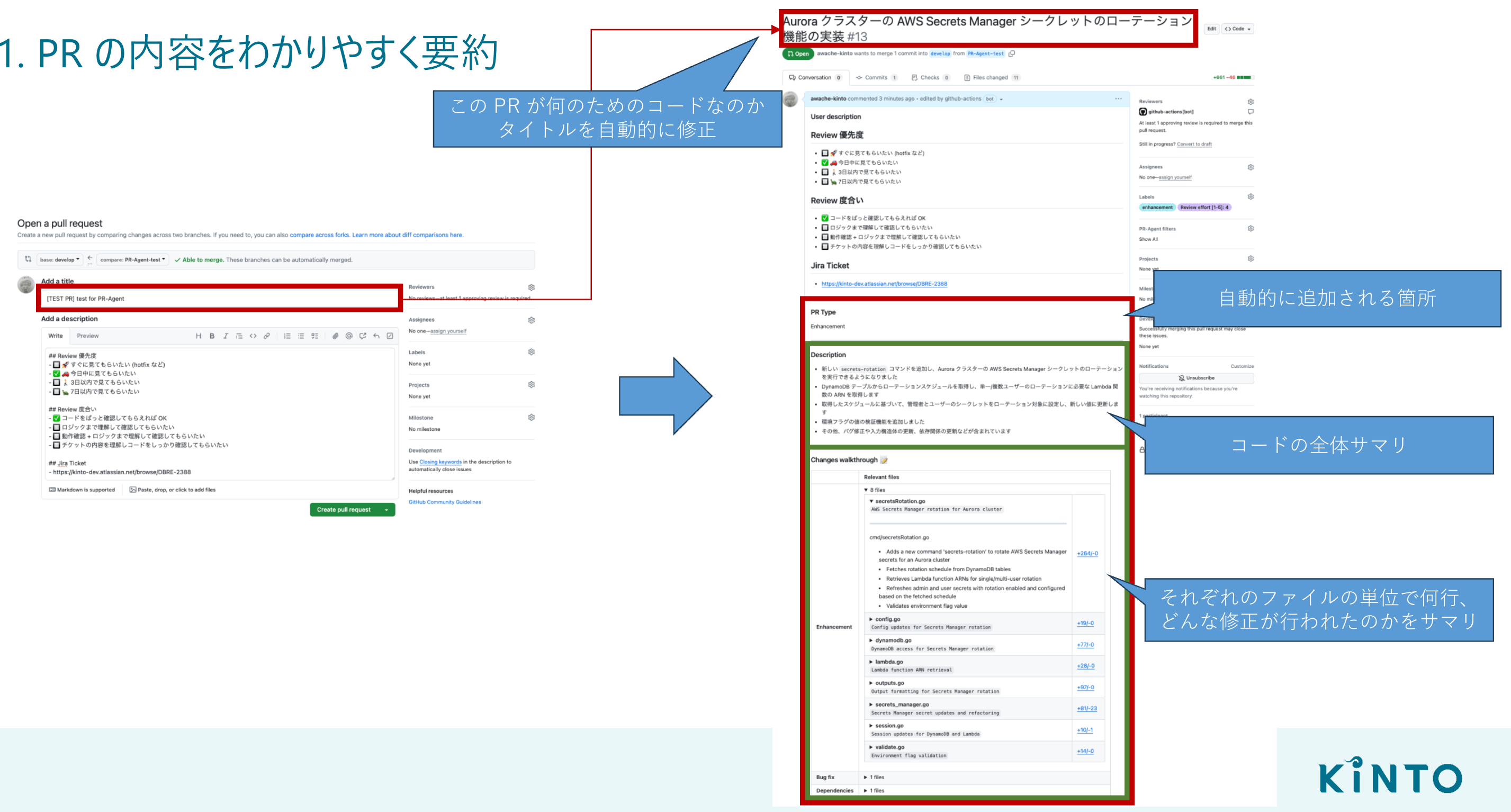

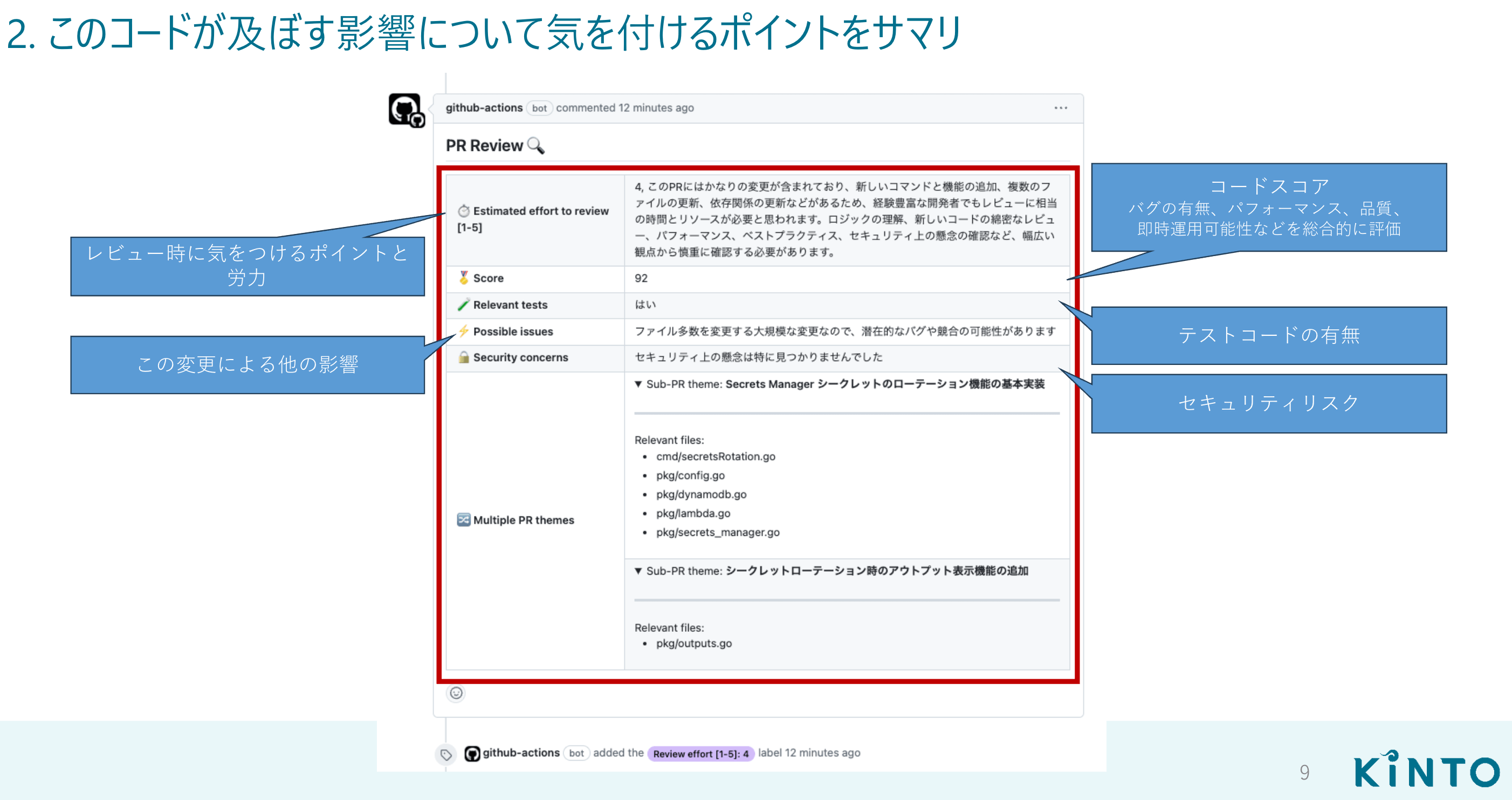

PR-Agentによるテックブログのプルリクエスト(PR)要約(Describe)

PR-Agentによるテックブログのプルリクエスト(PR)レビュー(Review)

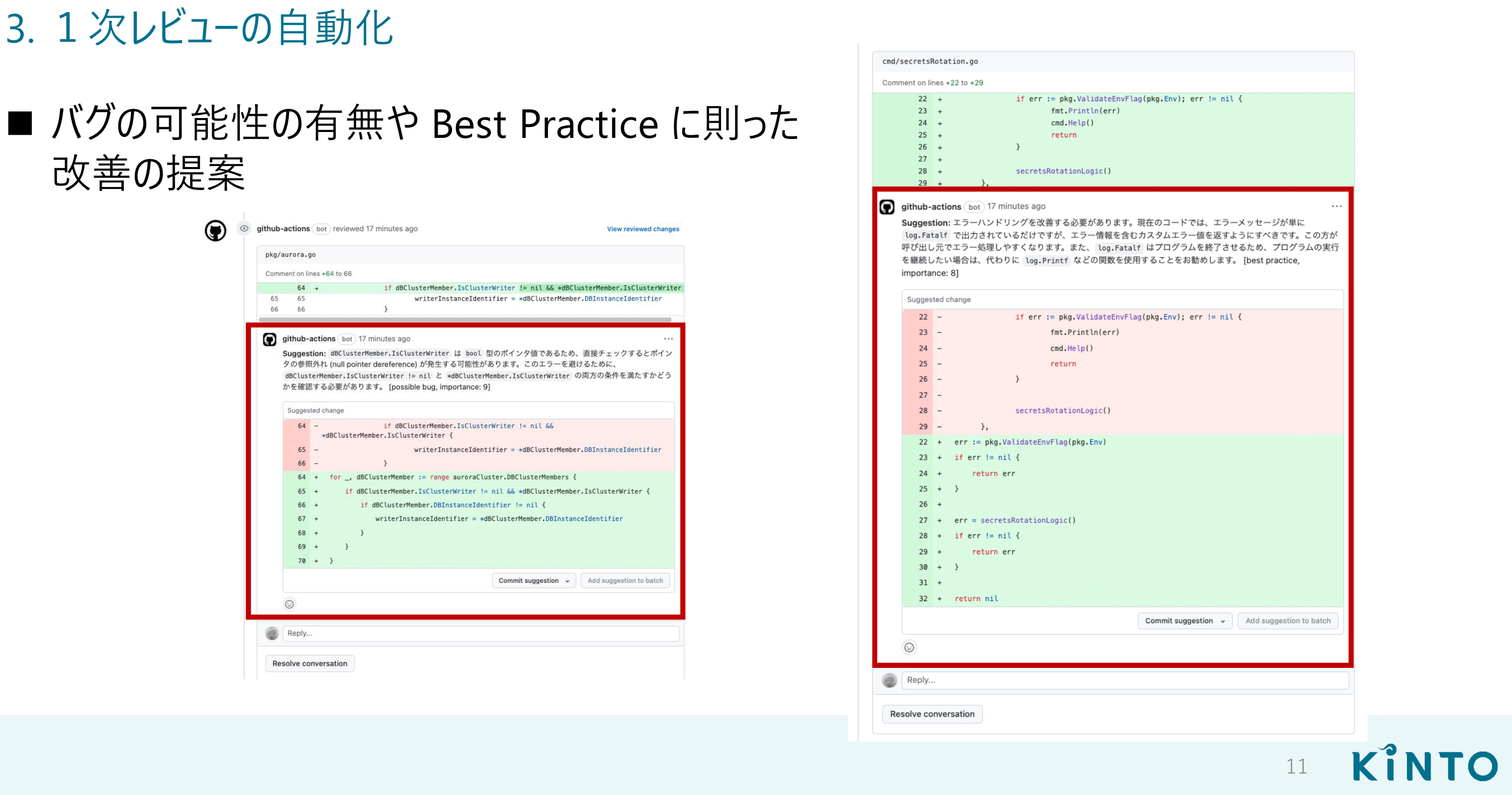

PR-Agentによるテックブログの変更案(Improve)

また、注意点として以下の点から最終的な判断は人間が行うことが重要です。

- 全く同じプルリクエスト(PR)に対して PR-Agent が行うレビュー結果が毎回異なり、回答精度のばらつきがある

- PR-Agent によるレビューが、関連性が低いまたは完全に見当違いなフィードバックを生成する場合がある

まとめ

本記事では、PR-Agent の導入とカスタマイズがどのように作業効率を向上させたかについてご紹介しました。完全なレビュー自動化はまだ実現できませんが、設定とカスタマイズにより、補助的な役割として開発チームの生産性向上に貢献しています。

今後もこの PR-Agent を活用して、さらなる効率化と生産性の向上を目指していきたいと思います。

関連記事 | Related Posts

コードとブログの両方を効率的にレビューする仕組みについて:PR-Agent(Amazon Bedrock Claude3)の導入

Introducing an evaluation system into generative AI application development to improve accuracy: Initiatives to automate database design reviews

Reduce Leftover PRs! GitHub Actions Workflow Recommendations

生成 AI アプリケーション開発に評価の仕組みを導入して精度向上を実現した話:DB 設計レビュー自動化の取り組み

ゼロから始める OSS 貢献:Terraform の AWS Provider に新規リソースを追加するための 11 のステップ

Deploying AWS Bedrock AgentCore via the Management Console

We are hiring!

【SRE】DBRE G/東京・大阪・名古屋・福岡

DBREグループについてKINTO テクノロジーズにおける DBRE は横断組織です。自分たちのアウトプットがビジネスに反映されることによって価値提供されます。

【クラウドエンジニア】Cloud Infrastructure G/東京・大阪・福岡

KINTO Tech BlogWantedlyストーリーCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。

![[Mirror]不確実な事業環境を突破した、成長企業6社独自のエンジニアリング](/assets/banners/thumb1.png)