PromptyとVS Codeでプロンプトエンジニアリングの効率を爆上げしよう!

こんにちは!KINTOテクノロジーズで生成AIエバンジェリストをしている和田(@cognac_n)です。

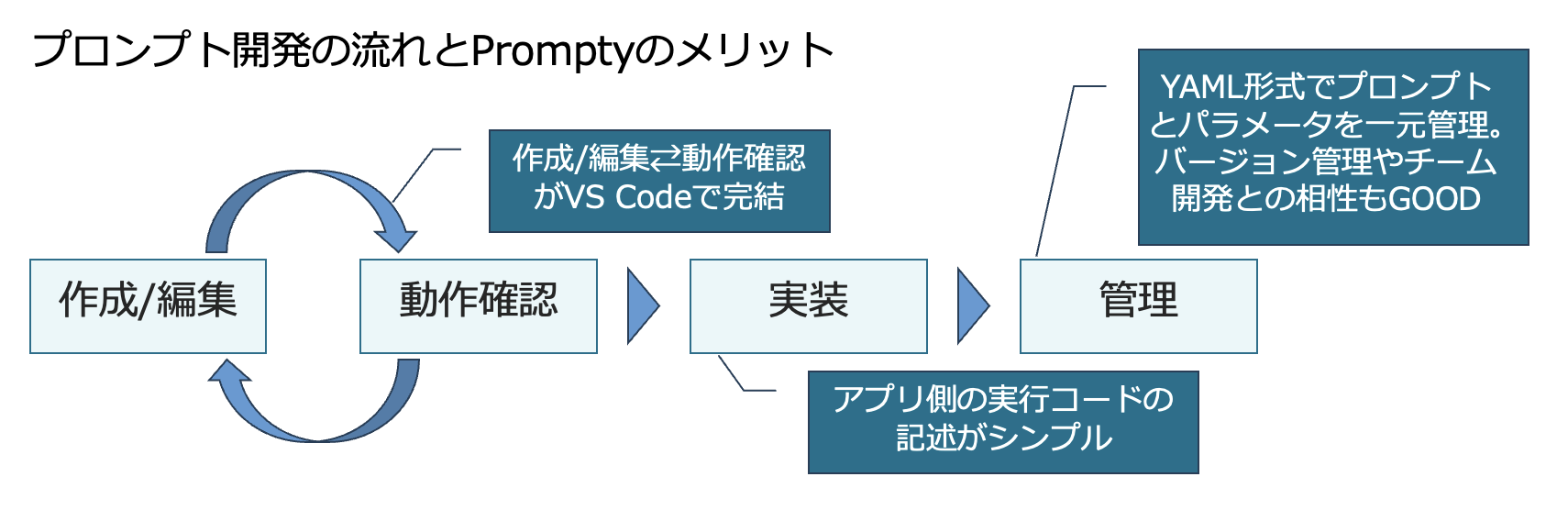

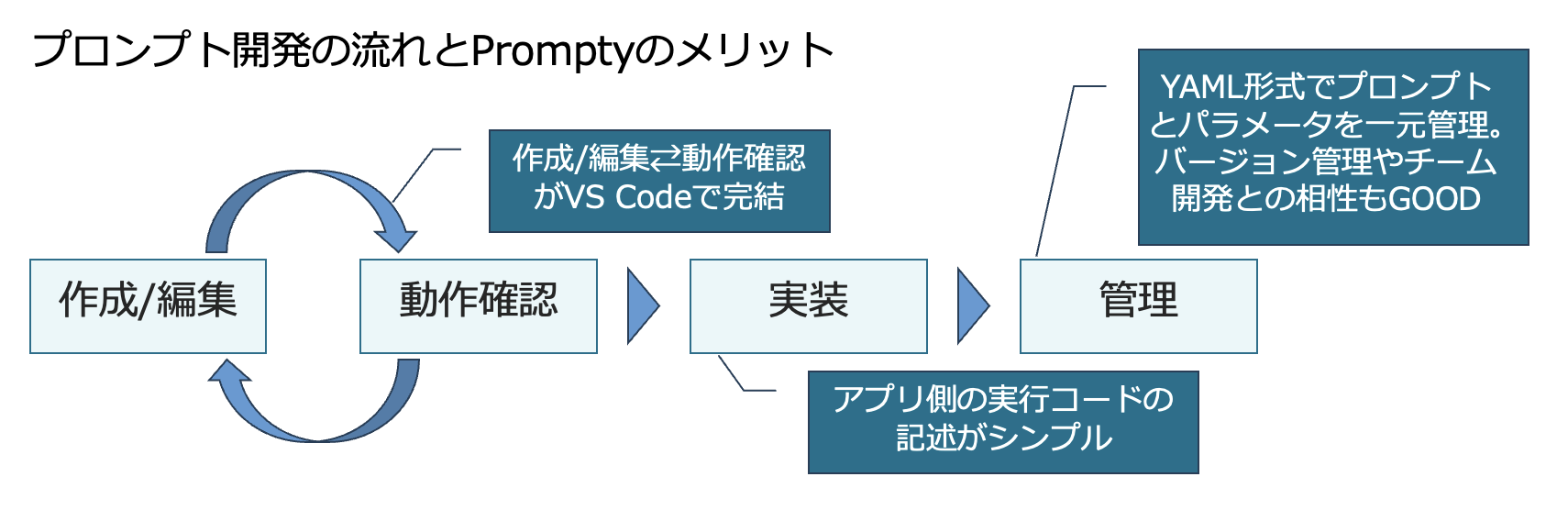

皆さんはプロンプトの管理をどのように行なっていますか?今回はプロンプトの作成/編集、動作確認、実装、管理が簡単にできるPromptyを紹介します!

1. Promptyとは?

Promptyは、大規模言語モデル(LLM)を使用する際のプロンプトを効率的に開発するためのツールです。YAML形式でプロンプトとパラメータを一元管理でき、GitHubなどバージョン管理ツールでのプロンプト管理や、チームでの開発にもピッタリです。Visual Studio Code (以下 VS Code)の拡張機能を使用することで、プロンプトエンジニアリングの作業効率を大幅に向上させることができます。

Prompty導入のメリット

Azure AI Studioとの連携やPrompt Flowとの連携も魅力的ですが、今回はVS Codeとの連携を中心に紹介していきます。

Promptyはこんな人におすすめ!

- プロンプト開発を高速化したい

- プロンプトのバージョン管理が必要

- チームでプロンプト開発をしている

- プロンプトを実行するアプリ側の記述をシンプルにしたい

2. 前提条件

必要な前提条件(執筆時点)

- Python 3.9以上

- VS Code(拡張機能を使用する場合)

- OpenAI APIキーまたはAzure OpenAI Endpoint(使用するLLMに応じて)

インストール手順と初期設定

VS Codeの拡張機能をインストールしましょう

pipなどを用いてライブラリをインストールしましょう

pip install prompty

3. 実際に使ってみる

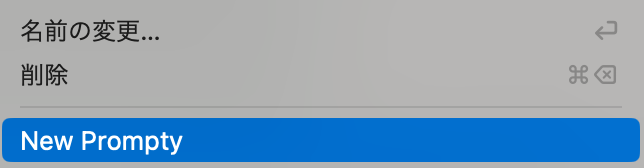

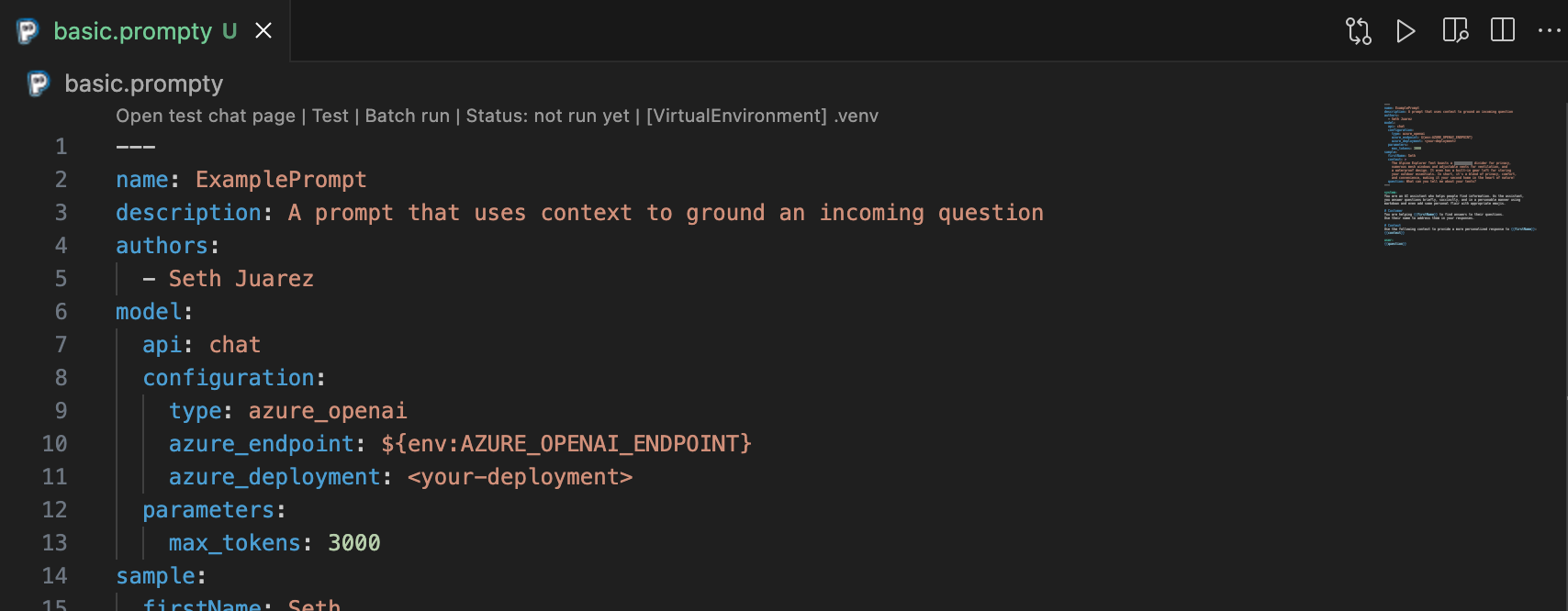

3-1. Promptyファイルを新規作成

エクスプローラータブで右クリック→「New Prompty」を選択することで、雛形が作成されます。

New Prompty

作成される雛形は以下の内容です。

---

name: ExamplePrompt

description: A prompt that uses context to ground an incoming question

authors:

- Seth Juarez

model:

api: chat

configuration:

type: azure_openai

azure_endpoint: ${env:AZURE_OPENAI_ENDPOINT}

azure_deployment: <your-deployment>

parameters:

max_tokens: 3000

sample:

firstName: Seth

context: >

The Alpine Explorer Tent boasts a detachable divider for privacy,

numerous mesh windows and adjustable vents for ventilation, and

a waterproof design. It even has a built-in gear loft for storing

your outdoor essentials. In short, it's a blend of privacy, comfort,

and convenience, making it your second home in the heart of nature!

question: What can you tell me about your tents?

---

system:

You are an AI assistant who helps people find information. As the assistant,

you answer questions briefly, succinctly, and in a personable manner using

markdown and even add some personal flair with appropriate emojis.

# Customer

You are helping {{firstName}} to find answers to their questions.

Use their name to address them in your responses.

# Context

Use the following context to provide a more personalized response to {{firstName}}:

{{context}}

user:

{{question}}

---で挟まれた領域に、パラメータを記述します。その下に、プロンプト本体を記述します。system:やuser:を用いることでroleの定義を行うことができます。

基本的なパラメータ紹介

| パラメータ | 説明 |

|---|---|

| name | プロンプトの名称を記述します |

| description | プロンプトの説明を記述します |

| authors | プロンプト作成者の情報を記述します |

| model | プロンプトで使用する生成AIモデルの情報を記述します |

| sample | プロンプトに{{context}}などのプレースホルダーがある場合、ここに記述した内容が動作確認時に代入されます |

3-2. APIキーやパラメータなどの設定

APIの実行に必要なAPIキーやエンドポイントの情報、実行時のパラメータ設定を行う方法がいくつかあります。

【パターン1】 .promptyファイルへの記述

.promptyファイルにそのまま記述する方法です。

model:

api: chat

configuration:

type: azure_openai

azure_endpoint: ${env:AZURE_OPENAI_ENDPOINT}

azure_deployment: <your-deployment>

parameters:

max_tokens: 3000

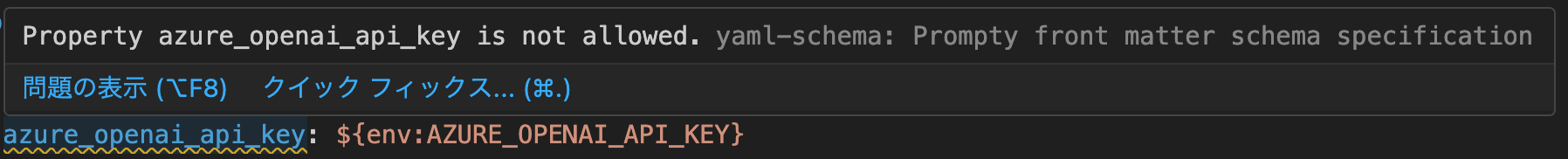

${env:AZURE_OPENAI_ENDPOINT}のように環境変数を参照させることもできます。ただし、azure_openai_api_keyをこの方法で設定することはできません。

azure_openai_api_keyは.promptyファイルに記述できない

【パターン2】 settings.jsonを使った設定

VS Codeのsettings.jsonを用いる方法です。設定が不足している状態で画面右上の再生ボタンをクリックすると、settings.jsonの編集に誘導されます。defaultの定義以外にconfigを複数作成でき、これらを切り替えながら動作確認をすることが可能です。typeをazure_openaiとしているときにapi_keyを空に設定して実行すると、後述するAzure Entra IDでの認証に誘導されます。

{

"prompty.modelConfigurations": [

{

"name": "default",

"type": "azure_openai",

"api_version": "2023-12-01-preview",

"azure_endpoint": "${env:AZURE_OPENAI_ENDPOINT}",

"azure_deployment": "",

"api_key": "${env:AZURE_OPENAI_API_KEY}"

},

{

"name": "gpt-3.5-turbo",

"type": "openai",

"api_key": "${env:OPENAI_API_KEY}",

"organization": "${env:OPENAI_ORG_ID}",

"base_url": "${env:OPENAI_BASE_URL}"

}

]

}

【パターン3】 .envを使った設定

.envファイルを作成しておくことで、そこから環境変数を読み取ってくれます。.envファイルは使用する.promptyファイルと同じ階層に配置する必要があるので注意です。手元で試す際にはとてもお手軽な設定方法です。

AZURE_OPENAI_API_KEY=YOUR_AZURE_OPENAI_API_KEY

AZURE_OPENAI_ENDPOINT=YOUR_AZURE_OPENAI_ENDPOINT

AZURE_OPENAI_API_VERSION=YOUR_AZURE_OPENAI_API_VERSION

【パターン4】 Azure Entra IDを用いた設定

適切な権限が割り当てられたAzure Entra IDでログインすることで、APIの利用が可能です。

私はまだ試せていません

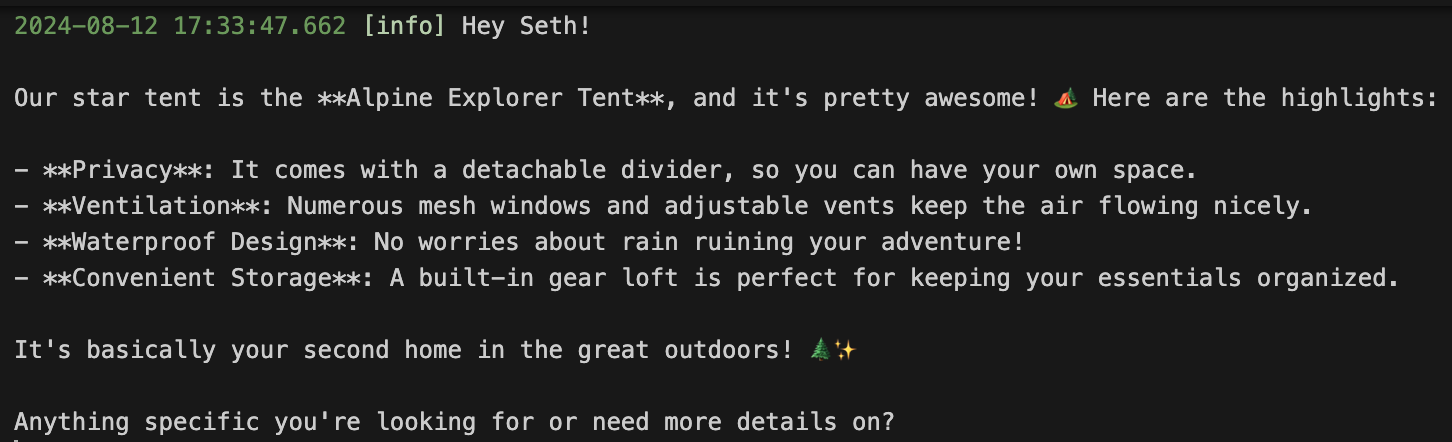

3-3. VS Codeでのプロンプト実行

右上の再生ボタンから簡単にプロンプトを実行することができます。結果は出力(OUTPUT)に表示されます。出力内のドロップダウンから「Prompty Output(Verbose)」を選択すると、結果の生データを確認できます。プレースホルダーへの代入状況やtoken usageなど細かな情報を確認したい場合に便利です。

右上の再生ボタンでプロンプトが実行できます

結果は出力(OUTPUT)で確認できます

3-4. その他のパラメータ紹介

以下のページで様々なパラメータが紹介されています。

inputsや、jsonモードを使用する際のoutputsなどはプロンプトの可視性が上がるので是非定義しましょう。

inputs:

firstName:

type: str

description: The first name of the person asking the question.

context:

type: str

description: The context or description of the item or topic being discussed.

question:

type: str

description: The specific question being asked.

3-5. アプリケーションへの組み込み

アプリケーションで使用しているライブラリによって記法が異なります。Prompty自体のバージョンアップも盛んなため、常に最新のドキュメントを確認するようにしましょう。参考までに、Prompt Flowとの組み合わせで使用したコードを記載します。シンプルな記述でプロンプトの実行が可能です。

from promptflow.core import Prompty, AzureOpenAIModelConfiguration

# AzureOpenAIModelConfiguration を使って Prompty をロードするための設定を行う

configuration = AzureOpenAIModelConfiguration(

azure_deployment="gpt-4o", # Azure OpenAI のデプロイメント名を指定

api_key="${env:AZURE_OPENAI_API_KEY}", # APIキーを環境変数から取得

api_version="${env:AZURE_OPENAI_API_VERSION}", # APIバージョンを環境変数から取得

azure_endpoint="${env:AZURE_OPENAI_ENDPOINT}", # Azureエンドポイントを環境変数から取得

)

# モデルのパラメータを上書きするための設定を行う

# サンプルとしてmax_tokensを上書きしています

override_model = {"configuration": configuration, "max_tokens": 2048}

# 上書きされたモデル設定を使ってPromptyをロード

prompty = Prompty.load(

source="to_your_prompty_file_path", # 使用するPromptyファイルを指定

model=override_model # 上書きされたモデル設定を適用

)

# promptyを実行

result = prompty(

firstName=first_name, context=context, question=question

) # 与えられたテキストを元にPromptyを実行し結果を取得

4. まとめ

Promptyは、プロンプトエンジニアリングの作業を大幅に効率化できる強力なツールでした!特に、VS Codeと連携した開発環境は、プロンプトの作成、動作確認、実装、管理までをシームレスに行えるため、非常に使いやすいです。Promptyを使いこなすことで、プロンプトエンジニアリングの効率と品質を大幅に向上させることができると思います。ぜひ、皆さんも試してみてください!

Prompty導入のメリット(再掲)

We Are Hiring!

KINTOテクノロジーズでは、事業における生成AIの活用を推進する仲間を探しています。まずは気軽にカジュアル面談からの対応も可能です。少しでも興味のある方は以下のリンク、またはXのDMなどからご連絡ください。お待ちしております!! 弊社における生成AIの取り組みについてはこちらで紹介しています。

ここまでお読みいただき、ありがとうございました!

関連記事 | Related Posts

PromptyとVS Codeでプロンプトエンジニアリングの効率を爆上げしよう!

Building an AI Agent with GitHub Copilot

Exploring DeepSeek R1 with Azure AI Foundry

Introducing an evaluation system into generative AI application development to improve accuracy: Initiatives to automate database design reviews

A system for efficiently reviewing code and blogs: Introducing PR-Agent (Amazon Bedrock Claude3)

How I Learned to Work with AI by Programming with GitHub Copilot (Mobile Engineer Edition)

We are hiring!

【PdM】オープンポジション/東京・名古屋・大阪

募集背景KINTOテクノロジーズでは新たな事業展開と共に開発するプロダクトが拡大しています。サービスの新規立ち上げ、立ち上げたプロダクトのグロースを推進し、KINTOの事業展開を支えるプロダクトマネージャーを求めています。

【QAエンジニア(リーダークラス)】QAG/東京・大阪・福岡

QAグループについて QAグループでは、自社サービスである『KINTO』サービスサイトをはじめ、提供する各種サービスにおいて、リリース前の品質保証、およびサービス品質の向上に向けたQA業務を行なっております。QAグループはまだ成⾧途中の組織ですが、テスト管理ツールの導入や自動化の一部導入など、QAプロセスの最適化に向けて、積極的な取り組みを行っています。