Exploring DeepSeek R1 with Azure AI Foundry

Hello!

I am Wada (@cognac_n), a Generative AI Evangelist at KINTO Technologies (KTC), part of the Generative AI Development Project.

Trying DeepSeek R1 Using Azure AI Foundry

1. Introduction

Recently, large language models (LLMs) have seen significant progress, with numerous companies announcing their own versions. One standout gaining attention is DeepSeek R1, developed by the Chinese AI company DeepSeek.

On January 30, 2025, Microsoft made the DeepSeek R1 model available on Azure AI Foundry and GitHub. In this article, I’ll share a summary of the process I followed to try out DeepSeek R1 using Azure AI Foundry, along with my impressions.

2. What is Azure AI Foundry?

Azure AI Foundry is Microsoft's comprehensive platform for AI development. It offers a unified set of tools and services for developers to build, evaluate, and deploy generative AI solutions and custom copilots.

Main features

-

Utilizing various AI models:

Supports a wide range of cutting-edge and open-source models provided by partner companies, including Microsoft, OpenAI, Hugging Face, Meta, and Mistral. DeepSeek R1 was also provided as part of this initiative. -

Integrated AI toolchain:

An SDK, API, and portal are provided to accelerate the entire development lifecycle, offering a seamless experience from data preprocessing to model inference and continuous monitoring. -

Responsible AI practices:

It has incorporated evaluation features, safety filters, and security controls to provide mechanisms to enhance the reliability and transparency of AI. -

Scalability for enterprises:

It ensures high availability and scalability on Azure's managed infrastructure, supporting enterprise-level monitoring and governance.

The Azure AI Foundry portal states that when using models through the model catalog, prompts and outputs are not shared with Microsoft or the model provider. This allows for secure use of a variety of models.

Microsoft provides and manages the hosting infrastructure and API endpoints. Models hosted in this "Model as a Service" (MaaS) scenario are subject to Azure data, privacy, and security commitment. Check the details of the Azure compliance certifications applicable to Azure AI Foundry. Microsoft functions as a data processor for prompts and outputs sent and generated by models (MaaS) deployed for pay-as-you-go billing system inference. Microsoft does not share these prompts and outputs with the model provider. Additionally, Microsoft does not use these prompts and outputs to train or improve Microsoft, model provider, or third-party models.

3. Building a DeepSeek R1 Environment on Azure AI Foundry

From this point forward, I’ll walk through the steps to actually use DeepSeek R1 on Azure AI Foundry. Please note that the detailed screen transitions and settings are based on the specifications as of January 31, 2025. Since changes to the UI and operations occur frequently, I will quote the official documentation as much as possible.

Prerequisite preparations and account settings

- Preparation of an Azure account

- Access to Azure AI Foundry

Steps for installing DeepSeek R1

- Creating a Project:

Create a new project on the Azure AI Foundry portal. DeepSeek-R1 is available in the following regions: [eastus2, westus3, northcentralus, eastus, southcentralus, westus]. (As of January 31, 2025) - Selecting DeepSeek R1 model:

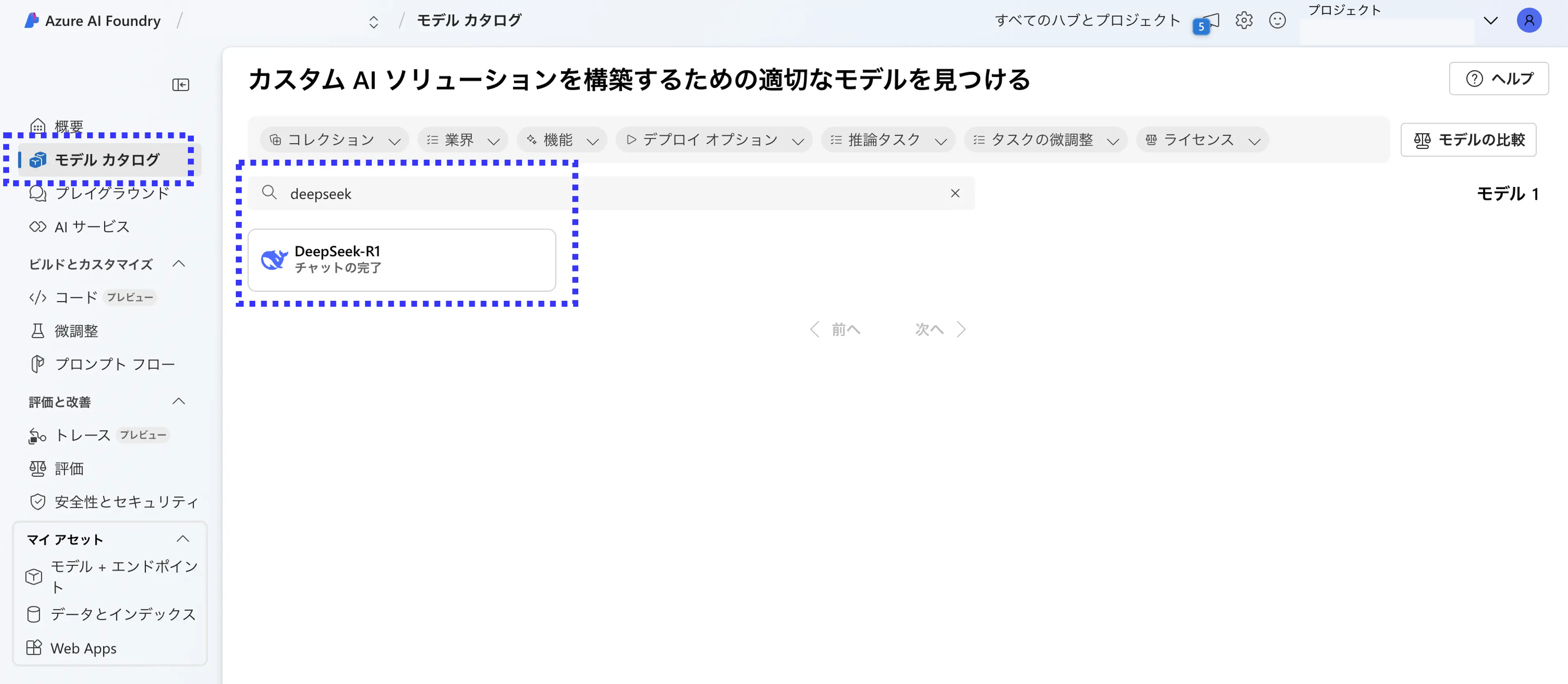

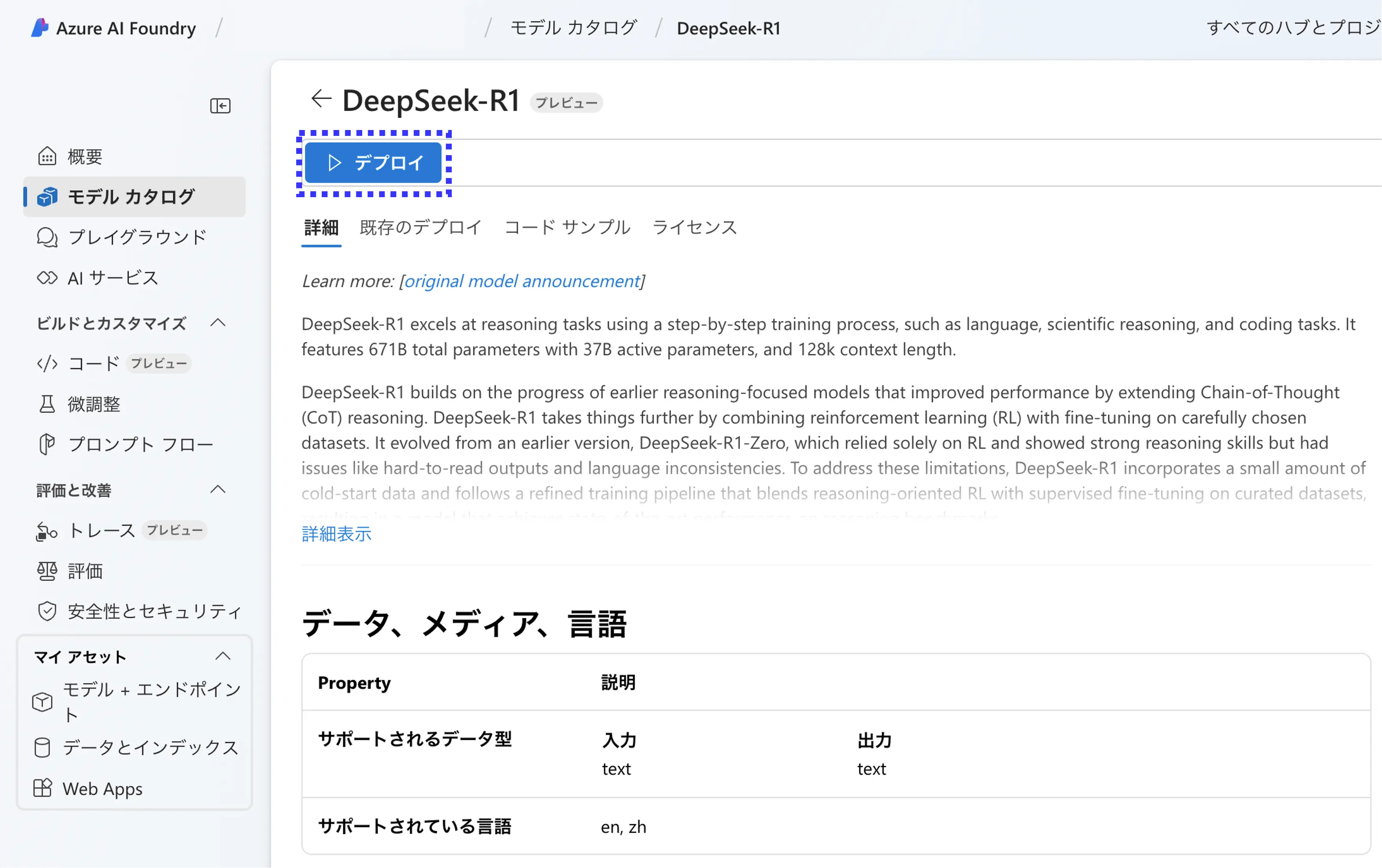

Search for DeepSeek R1 in the "Model Catalog" and transition to the model page.

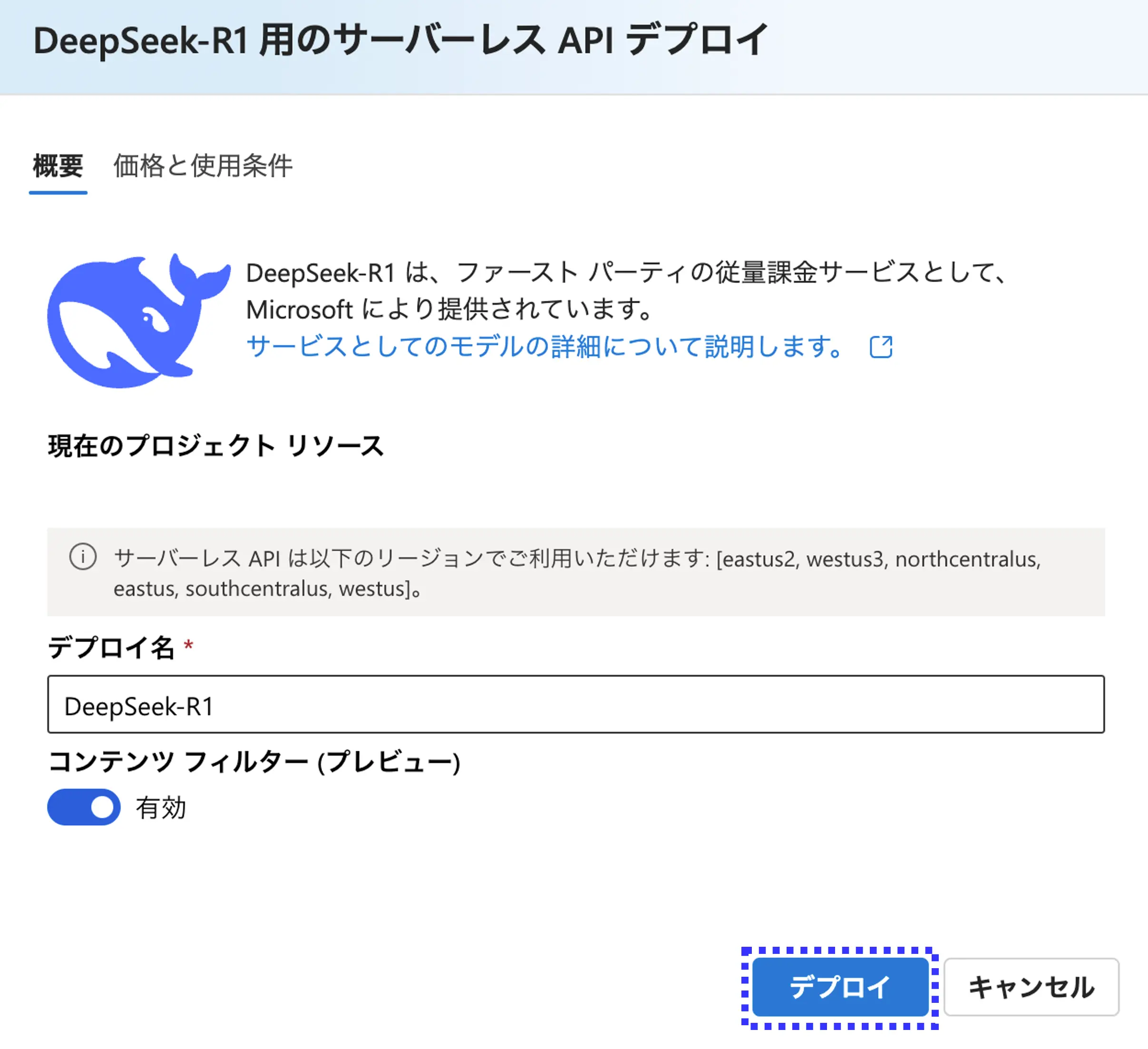

- Set deployment name and check the price:

- Set a deployment name of your choice.

- Set the content filter to ON/OFF.

- As of January 31, 2025, DeepSeek-R1 is provided free of charge. The price is expected to change in the future, so be sure to check back regularly for the latest information.

DeepSeek R1 is currently available at no cost, though usage is subject to rate limits that may change at any time. Pricing may be updated in the future, and continued use will be subject to the new rates. As the model is in preview, a new deployment may be required to maintain access.

4. Deployment and endpoint creation:

An endpoint is automatically generated in the Azure AI Foundry workspace, and a URI and API key for calling the model are issued.

4. A simple demo using DeepSeek R1

Here, I will introduce an example code to try out inference with DeepSeek R1. Install necessary libraries.

pip install azure-ai-inference

Below is an example code for using the API.

Azure AI Foundry also provides code samples, making it very easy to try out.

from azure.ai.inference import ChatCompletionsClient

from azure.ai.inference.models import SystemMessage, UserMessage

from azure.core.credentials import AzureKeyCredential

client = ChatCompletionsClient(

endpoint="<YOUR_TARGET_URI>",

credential=AzureKeyCredential("<YOUR_KEY>"),

)

deploy_name = "<YOUR_DEPLOY_NAME>"

response = client.complete(

messages=[

SystemMessage(content="You are a helpful assistant."),

UserMessage(

content="Please think about ways to promote the use of generative AI in companies. Answer in Japanese." Switch the legend to Japanese,

),

],

max_tokens=2048,

model=deploy_name,

)

print(response.choices[0].message.content)

Example of response

<think>

The first step is to clearly understand what the problem is. You need to think about how to promote the use of generative AI in the company.

Generative AI has a wide range of potential applications, so it's important to first assess and understand your company’s current situation. It's essential to identify which departments could benefit from AI adoption and what potential challenges they may face. For example, marketing, customer support, and business process automation are some potential areas.

Next, education and training are important. If employees are not provided with opportunities to learn how to use AI, it will be difficult to actually utilize it. Especially, to alleviate concerns and lack of understanding about AI, it might be a good idea to conduct workshops and training programs.

Selecting the right tools is also necessary. It is important to select a generative AI platform or tools that suit your company's needs Depending on the situation, customization may be necessary, and addressing security and data privacy issues is also required.

Support from the management is also essential. Without support from management, it will be challenging to secure the necessary budget and resources. Clearly communicating the benefits of AI implementation is essential to gaining management’s understanding and support.

Furthermore, making successful cases is effective. If certain departments successfully pilot AI and demonstrate tangible results, it becomes easier to scale adoption across other departments. Sharing the results company-wide and expanding on them can be an effective strategy.

It is also important to develop ethical guidelines and policies. Clear rules will need to be established to prevent the misuse of AI and encourage its responsible use.

Continuous evaluation and improvement also should not be overlooked. Even after implementing AI, it's crucial to evaluate its effectiveness and make improvements based on feedback to ensure ongoing progress.

It seems important to consolidate these points and consider concrete steps to move forward.

</think>

To promote the utilization of generative AI within a company, it is effective to systematically follow the steps below:

---

### **1. Current situation analysis and goal setting**

- **Identifying needs**: Identify the business challenges of each department (planning, sales, development, support, etc.) and clarify the areas where generative AI can provide solutions (document creation, data analysis, customer support, etc.).

- **Prioritization**: Prioritize the implementation of use cases with high impact and feasibility (e.g., automatic response to inquiries and automated report generation).

---

### **2. Building a system and changing awareness**

- **Launching a promotion team**: Establish a promotion team consisting of representatives from management, the IT department, and each department to collaborate to secure the budget and resources.

- **Implementation of educational programs**:

- **Basic understanding**: Share the mechanisms, benefits, and ethical risks (e.g., data leakage and bias) of generative AI through training.

- **Hands-on training**: Provide hands-on training to promote learning of prompt design and output verification methods.

---

### **3. Tool selection and test operation**

- **Choosing the right AI for your company**:

- Compare open sources (e.g., Llama 2) and cloud services (ChatGPT Enterprise, Google Vertex AI, etc.).

- Select based on security standards (e.g., data encryption and whether the pre-trained model can be customized).

- **PoC (Proof of Concept)**: Conduct test operations in a limited scope to verify accuracy and business efficiency improvement.

---

### **4. Establishment of governance**

- **Development of usage guidelines**:

- Set clear rules to prevent the input of confidential information and implement a system for double-checking output content.

- Address legal risks regarding copyright and privacy.

- **Monitoring System**: Regularly monitor the output quality and ethical aspects of AI and continuously improve them.

---

### **5. Cultivating a company culture**

- **Sharing successful cases**: Boost motivation by sharing success stories from departments that have achieved positive results, through internal presentations or reports.

- **Incentive design**: Recognize and reward teams that have improved business efficiency through the use of AI.

---

### **6. Collaboration with external entities and adaptation to evolution**

- **Collaboration with vendors and experts:**: Seek support for staying up to date with the latest technological trends and for implementing necessary customizations.

- **Continuous AI Updates**: Regularly review AI usage methods in response to advancements in model accuracy and changes in regulations.

---

### **Key points**

- **Management commitment**: Demonstrating return on investment (ROI) and securing management support with a long-term perspective is crucial.

- **Improvement driven by the field:**: Utilize feedback from employees (i.e., users) to optimize tools and processes.

Generative AI is a "tool," and the success or failure of its utilization depends on "how humans use it." In addition to introducing technology, improving digital literacy throughout the organization and ensuring psychological safety are keys.

5. Impressions from actual use

-

Response:

- It took about 4 minutes for the response this time. It appears that the latency was caused by both the LLM's own inference time and the increased processing time resulting from heavy usage.

-

Output precision:

- Since it's an inference model designed for "thinking," it provides responses that convey a sense of deep insight, even when handling highly abstract or complex prompts.

- In API usage, the thinking process is output in a format <think>enclosed by the <think> tag. This is easy to use.

- <think>Looking at the contents inside the <think> tag reveals a thinking process as if a discussion were taking place among multiple people.

-

Conclusion:

- Azure AI Foundry provides a convenient way to experiment with the latest models developed by third parties.

- In the generative AI space, where "trying it out first" is key, this ease of experimentation is extremely valuable.

We Are Hiring!

KINTO Technologies is looking for passionate individuals to help drive AI adoption in our business. We’re happy to start with a casual interview. If you’re even slightly interested, feel free to reach out via the link below or through X DMs. We look forward to hearing from you! Great place to stay!

Thank you for reading this far!

関連記事 | Related Posts

We are hiring!

生成AIエンジニア/AIファーストG/東京・名古屋・大阪・福岡

AIファーストGについて生成AIの活用を通じて、KINTO及びKINTOテクノロジーズへ事業貢献することをミッションに2024年1月に新設されたプロジェクトチームです。生成AI技術は生まれて日が浅く、その技術を業務活用する仕事には定説がありません。

【UI/UXデザイナー】クリエイティブ室/東京・大阪・福岡

クリエイティブGについてKINTOやトヨタが抱えている課題やサービスの状況に応じて、色々なプロジェクトが発生しそれにクリエイティブ力で応えるグループです。所属しているメンバーはそれぞれ異なる技術や経験を持っているので、クリエイティブの側面からサービスの改善案を出し、周りを巻き込みながらプロジェクトを進めています。

![[Mirror]不確実な事業環境を突破した、成長企業6社独自のエンジニアリング](/assets/banners/thumb1.png)