Load balancing on a global scale

Introduction

My name is Hoang, I am a backend engineer who is responsible for Europe and South America as part of the Global KINTO ID Platform (GKIDP) team at Global Group in KINTO Technologies (KTC). We deal with global problems to authenticate users all over the world. Providing a fast, reliable, and highly available Identity and Access Management (IAM) system is a MUST with GKIDP. In this article, I would like to share my thoughts on how we could do load-balancing/route traffic on any kind of cross-border system.

We know that HTTP (or UDP, DNS also) are stateless protocols, which means that each request from clients to servers does not retain any information from the previous request, but why it should be stateless, and what for?

The deep reason is a stateless protocol can be load balanced for scale architecture since any request can be routed to any web server without concern about each request state. That makes web servers can be horizontally scalable by spreading servers in global maps for making the system resilient and high-performance.

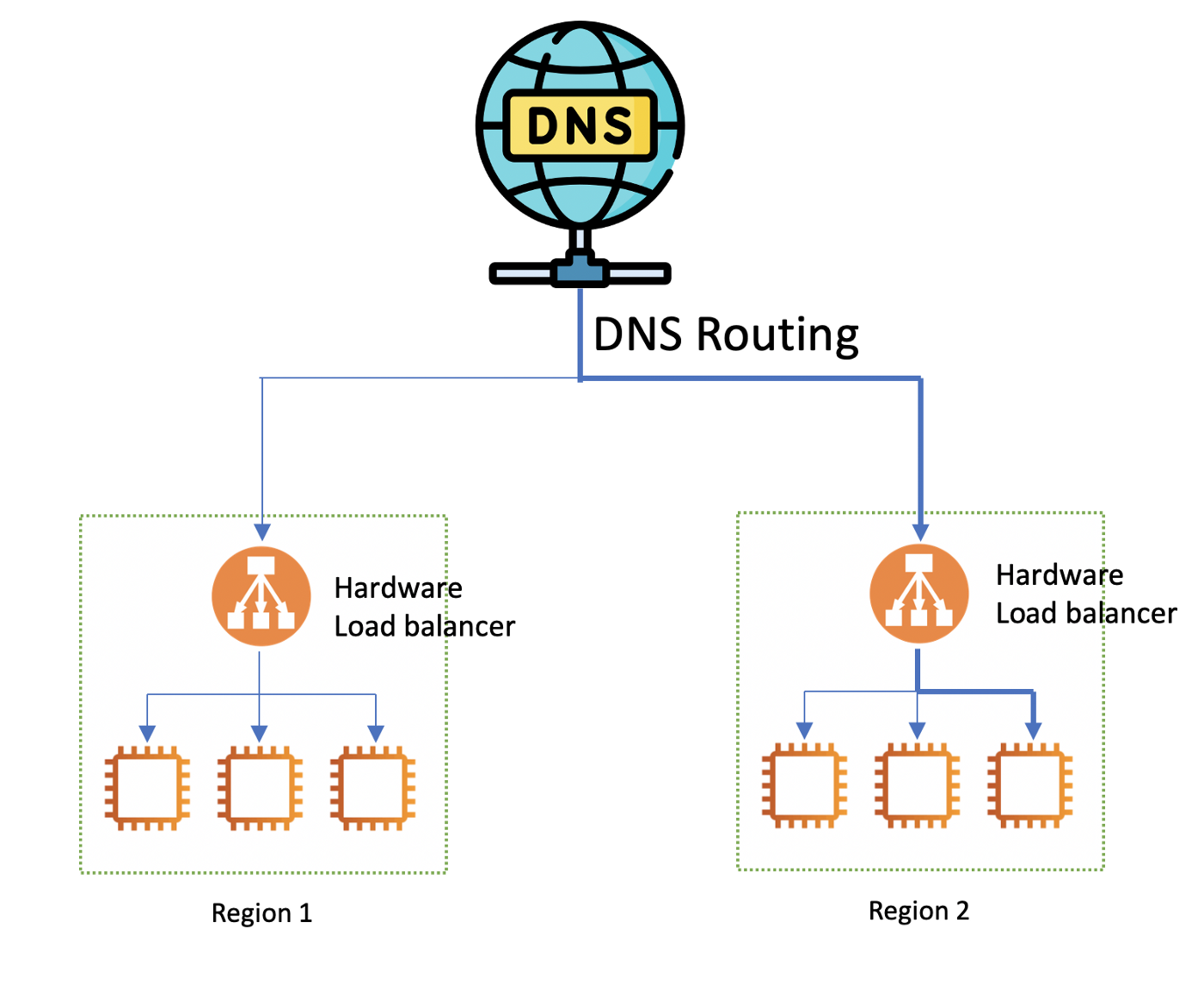

In this article, I would like to introduce two main types of load balancing: DNS Routing and hardware load balancer (a.k.a Load balancer). These two methods are separate from each other but can be mixed to enhance global systems like KINTO vehicle subscription services.

DNS Routing

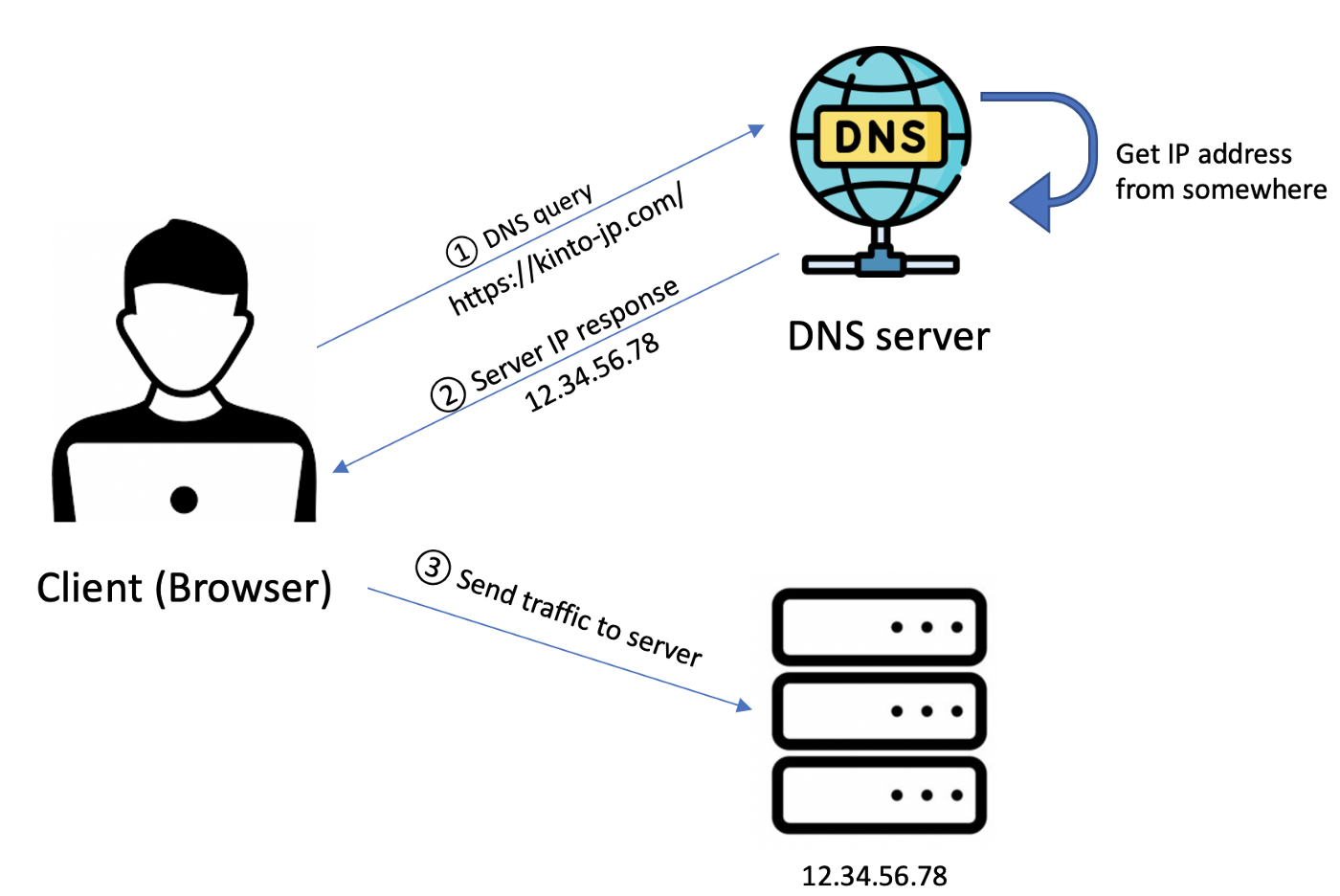

When the user types a URL into the browser, the browser will send a DNS query to the DNS server to get the IP address associated with the hostname of the website. The browser will use that IP address and not the domain of the website to access the server. The simplest flow is like the below:

Figure 2. DNS Routing

In this case, where the system contains multiple servers across regions, the DNS server's responsibility is to route the client to the most appropriate server to improve performance and availability. The way DNS decides where to forward requests is called DNS routing.

DNS routing is an easy configuration with a high scalability method because it does not touch user requests. Frequently, it is used to route users among data centers or regions. Some common DNS routing methods are simple Round Robin, and dynamic methods like geo-location-based, latency-based, and health based.

DNS routing has drawbacks like outdated DNS cache problems: DNS always returns the same IP address for one domain in TTL (time-to-live) duration even if that server is down.

There is a confusion that must be explained here: DNS does not route any traffic, it only responds to the DNS queries the IP address — the location where the user should send traffic. In Fig. 2, from step 3: After getting the server IP address, the client really sends traffic (like HTTP requests) to the target server, and the hardware load balancer takes the place to distribute traffic among multiple backend servers behind it. I will explain in detail the hardware load balancer in the next part.

Hardware Load balancer

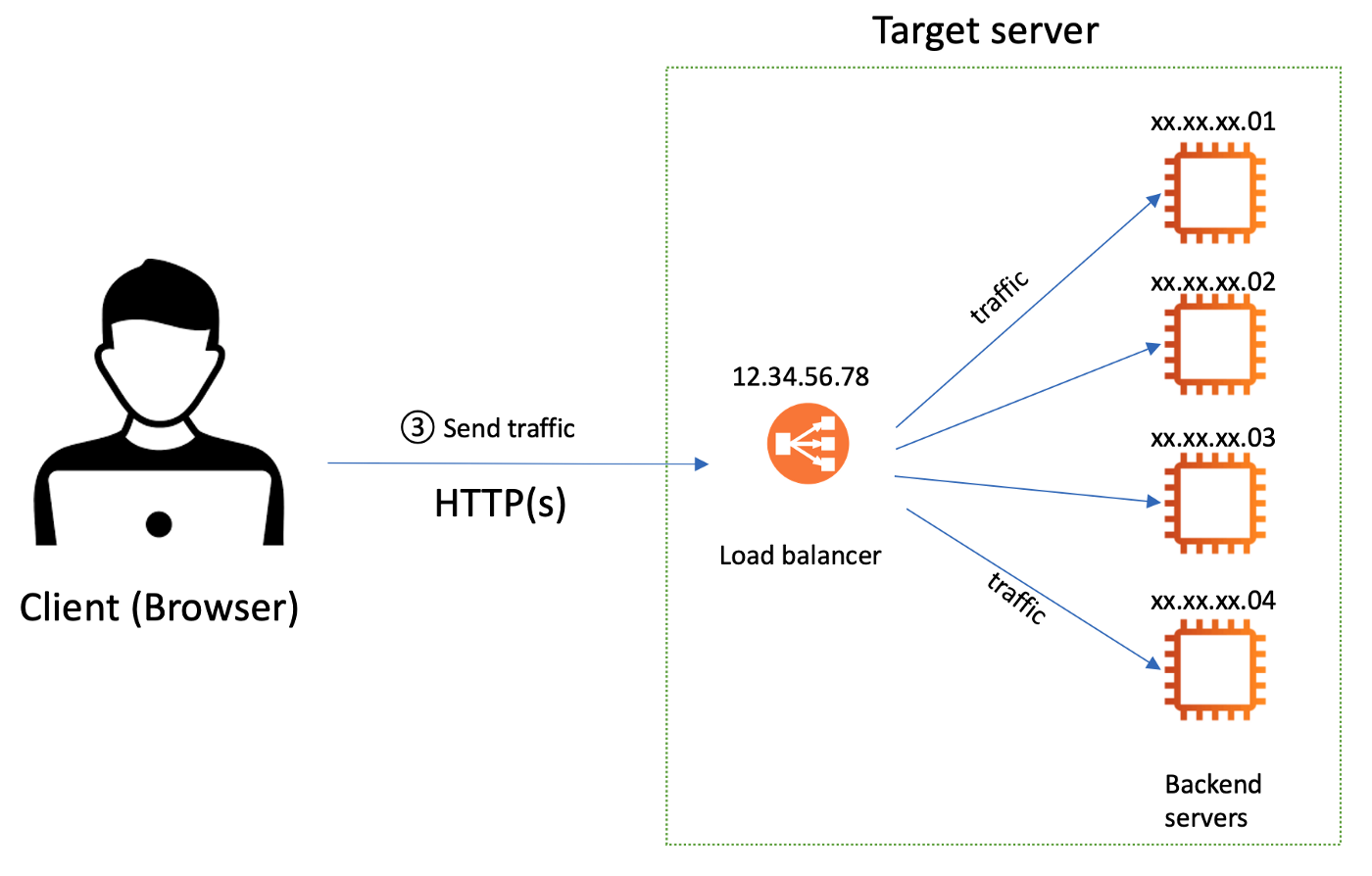

After receiving the translated IP address corresponding to the domain, the client will send the traffic to the target server. From now, the hardware load balancer stands in front of a fleet of backend servers and distributes traffic to these web servers. Indeed, the hardware load balancer is nothing but a reversed proxy — a physical device that takes responsibility as a coordinator of the system.

Figure 3. Load balancer as reversed proxy

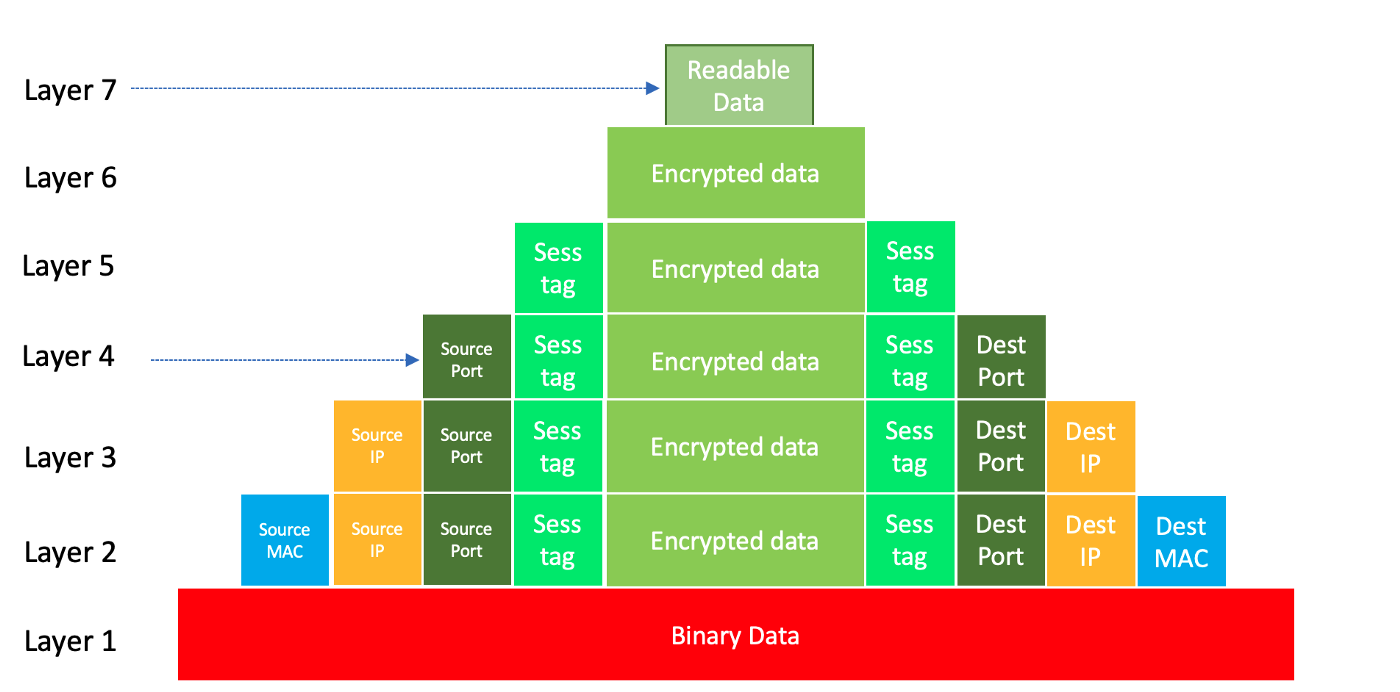

There are 2 main kinds of hardware load balancing: layer 4 load balancing and layer 7 load balancing which occurs in the Transport Layer and Application layer of the OSI model, respectively. Briefly explaining the OSI model, the requests are compressed by 7 layers, like Matryoshka dolls. The deeper (from layer 1 to layer 7) the data is extracted, the more information is revealed. It means that the load balancer in layer 7 has more information about the incoming requests compared to the level 4 load balancer.

Take a look at the data on each layer of the OSI model below:

Figure 4. Data on each layer of the OSI Model

Layer 4 and layer 7 load balancers differ in how deep they interfere with the incoming request.

Layer 4 load balancing

At layer 4, the load balancer knows little information about incoming requests, only the client IP address and ports. Because it is encrypted data, the load balancer can not understand anything about the content of the request data. That makes load balancing at layer 4 not smart like layer 7 load balancing.

Advantages:

- Simple load balancing

- No decrypt/lookup on data => Faster, efficient and secure

Disadvantages:

- Only a few load balancing methods (not smart load balancing)

- No caching, cause you can’t access data.

Layer 7 load balancing

At layer 7, the load balancer really can access readable data of the incoming request (for example, HTTP request headers, URL, cookie, etc. ). The layer 7 load balancer can distribute traffic much smarter than layer 4, for example, a very convenient strategy is path-based routing.

Advantages:

- Smart load balancing

- Caching enabled

Disadvantages:

- Decrypts data in middle (TLS termination) => Slower, less secure because load balancer has rights to look at data.

Routing/Load balancing methods list

There are some routing/load balancing methods that vary in their purposes.

- Round Robin algorithm: The simplest method to implement: The server address is returned in a random or rotating sequential manner.

- Weighted-based algorithm: Control the percentage of the requests that go to each server. For example, if you want to introduce a Canary release for a small group of users, so you will set up one small server for the Canary release for getting user feedback, and route only 5% of users to this server. The left 95% of users still go to the stable application version.

- Latency-based policy (usually on DNS routing): Route to the server that has the least latency close to the client. In case low latency is a priority, this policy is a suitable method.

- Least connections (usually on load balancer): Traffic is directed to the server having the least traffic. This algorithm helps for better performance during peak hours by preventing big requests converge on one particular server.

- Health Checks (heartbeats): a.k.a failover. Monitor the health of each server by establishing a live session. The load balancer will check the heartbeat of each server registered with it, and it will stop routing requests to one server if that server’s health is not good, then forward it to another healthy server.

- IP Hash (usually on load balancer): Assign the client’s IP address to a fixed server for optimal performance (for example, caching)

- Geo-location-based routing (usually on DNS routing): Based on user location by continent or country then return the appropriate server on each location.

- Multi-Value (Only for DNS Routing): Return the numbers of IP addresses instead of one.

- Path-based routing (Only for Layer 7 Load balancer): according to the path of the request, decide which server should handle the request. For example: /processing, LB will forward the request to the processing server, /image, LB will forward to the image server.

Before we leave

The hardware load balancer and DNS routing can be easily confused with each other. They are not substitutes for each other but are usually mixed with each other. The thing is, they use DNS routing for large scales like between data centers or large regions because it’s much cheaper and faster than a hardware load balancer. Following that are hardware load balancers, which often distribute traffic inside that data center or region. DNS routing deals with DNS query, while hardware load balancer deals with traffic.

Understanding these 2 definitions are essential for scaling a global system with high performance and availability.

References

関連記事 | Related Posts

We are hiring!

【クラウドエンジニア】Cloud Infrastructure G/東京・大阪

KINTO Tech BlogWantedlyストーリーCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。

【バックエンドエンジニア】my route開発G/東京

my route開発グループについてmy route開発グループは、my routeに関わる開発・運用に取り組んでいます。my routeの概要 my routeは、移動需要を創出するために「魅力ある地域情報の発信」、「最適な移動手段の提案」、「交通機関や施設利用のスムーズな予約・決済」をワンストップで提供する、スマートフォン向けマルチモーダルモビリティサービスです。