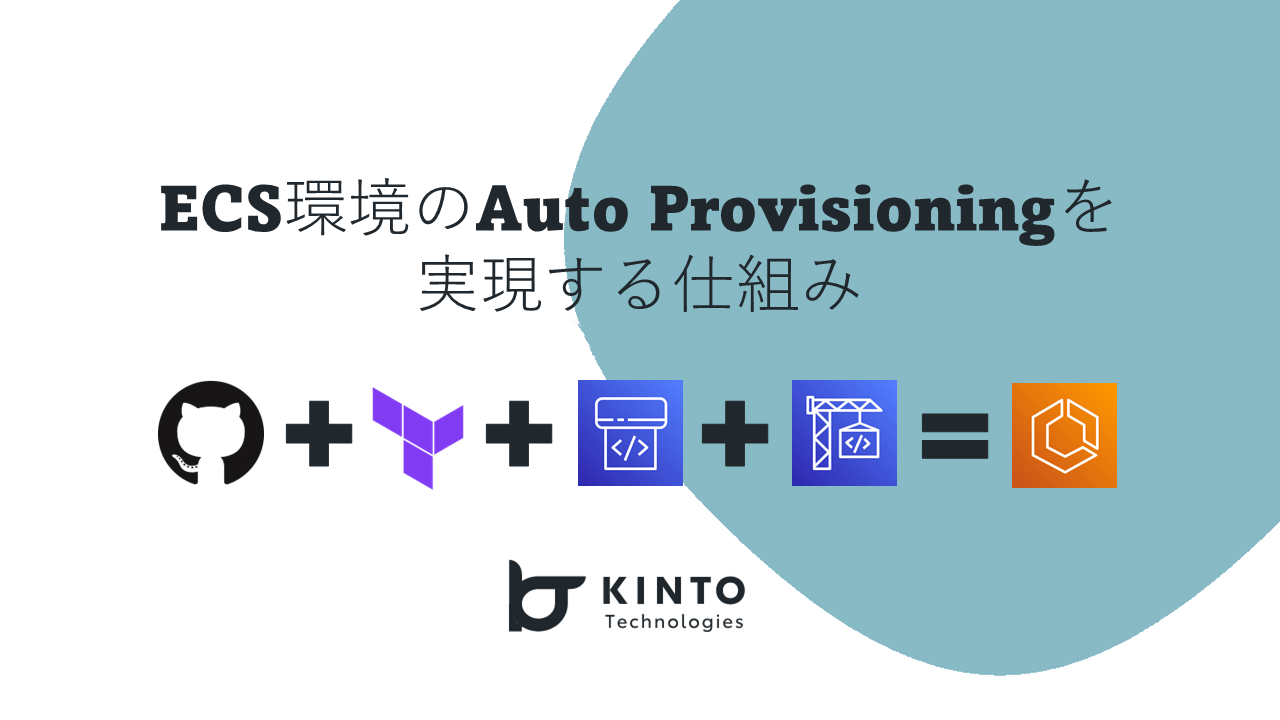

Achieving Auto Provisioning in ECS Environments

Introduction

Hello. My name is Shimamura, and I used to be a DevOps engineer in the Platform Group, but now I'm on the Operation Tool Manager team within the same gorup, where I'm responsible for Platform Engineering and tool-related development and operations.

KINTO Technologies' Platform Group promotes IaC using Terraform. We define design patterns that are frequently used within the company and provide them as reference architectures, and each environment is built based on those patterns. For the sake of control, each environment from development to production is built upon ticket-based requests. Before building the development environment, we prepare a sandbox environment (AWS account) for the application department's verification. However, this is often built manually and there are many differences with the environment built by Platform Group.

If a design pattern were available, the environment could be automatically built upon developer request, which would eliminate the waiting time between the request and the creation of the environment, and improve development efficiency. I think this kind of request-based automated building is a common requirement in DevOps, but it seems that Kubernetes is still the most commonly used application execution platform.

KINTO Technologies uses Amazon ECS + Fargate as its application execution platform, so I would like to introduce this as a (probably) rare example of automated environment building for ECS.

Background

Challenges

- The system is not around when application developers need it (during verification/launch)

- As part of the DevOps activities, I researched AutoProvisioning (automated environment building) and felt that it was common, but it is not present within our company.

- There is a large difference between an environment built in a sandbox environment with a relatively high degree of freedom and an environment built according to the design patterns provided by Platform Group.

- IAM permissions and security

- Presence of common components such as VPC/Subnet/NAT Gateway

- etc.

- As a result, the communication costs becomes higher for both parties when requesting a build.

Solution

Why not create an automated building mechanism?

Since this is a design pattern, there are some AWS services that may be missing, but it's tolerable and presumably they will be added manually.

As a first step, it's worthwhile to automatically build an environment on AWS in about an hour so that you can check the operation of your application and prepare for CICD.

Let's Make It

Thankfully, Terraform is becoming more modular so we can build environments in a variety of patterns by simply writing a single file (locals.tf), so I think of the below as a base:

- Used in-house created modules (Must)

- Built with in-house design patterns as a base (Must)

- Made sure that DNS is automatically configured and communication is possible via HTTPS.

- It should be able to automatically generate locals.tf

- Prototyped the application to see if it can be structured and generated using Golang's HCLWrite

- After prototyping, I found that structuring was difficult, so I eventually gave up on automatic generation.

- I took care of it by replacing some parameters from the template file

- Since the process was about replacing, detailed settings for each component are not possible.

The Final Result

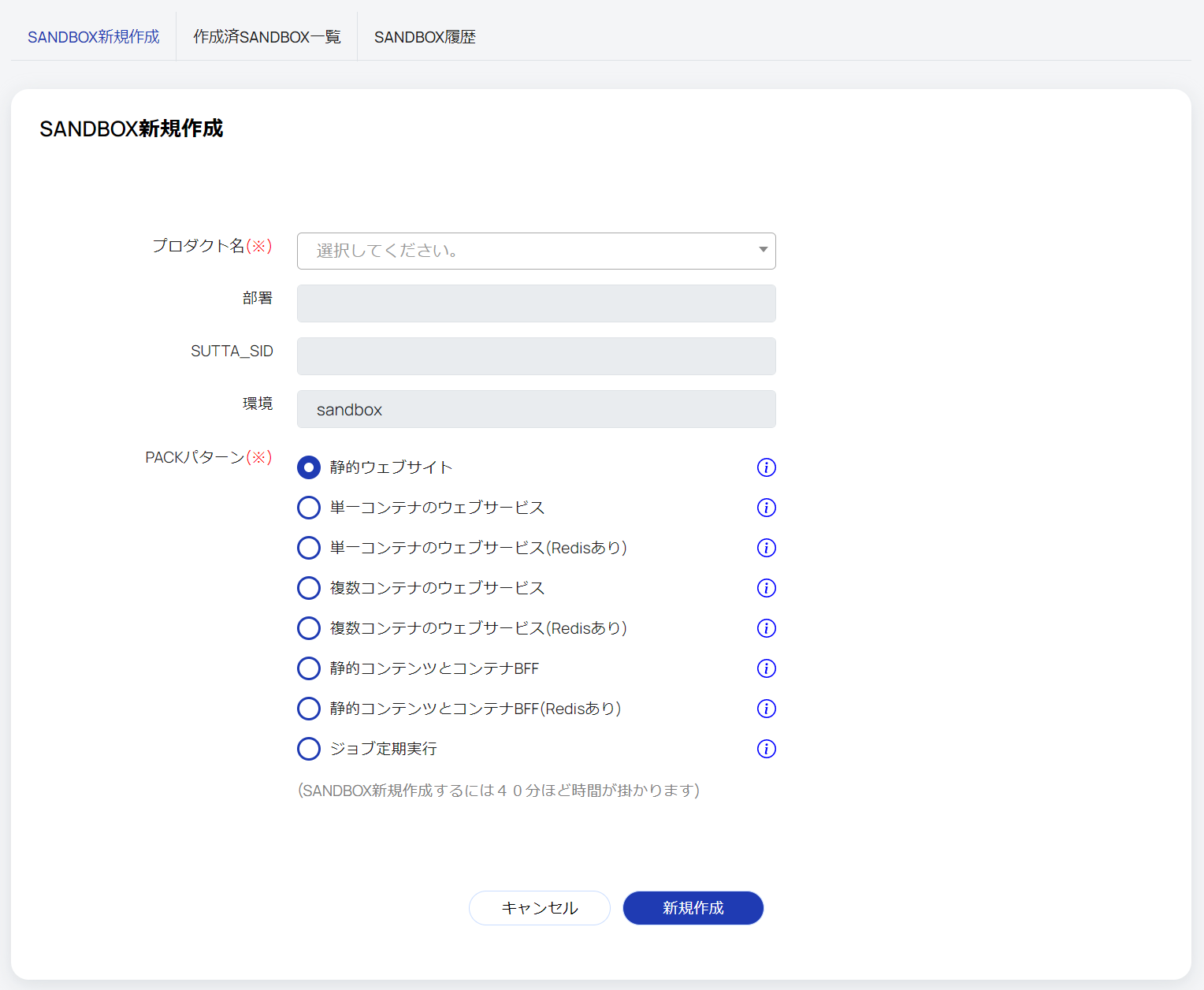

From the GUI on the CMDB select

- product

- design patterns

When you select this and click Create New, the specified configuration will be built in the sandbox environment of the department associated with the product in 10 to 40 minutes (depending on the configuration).

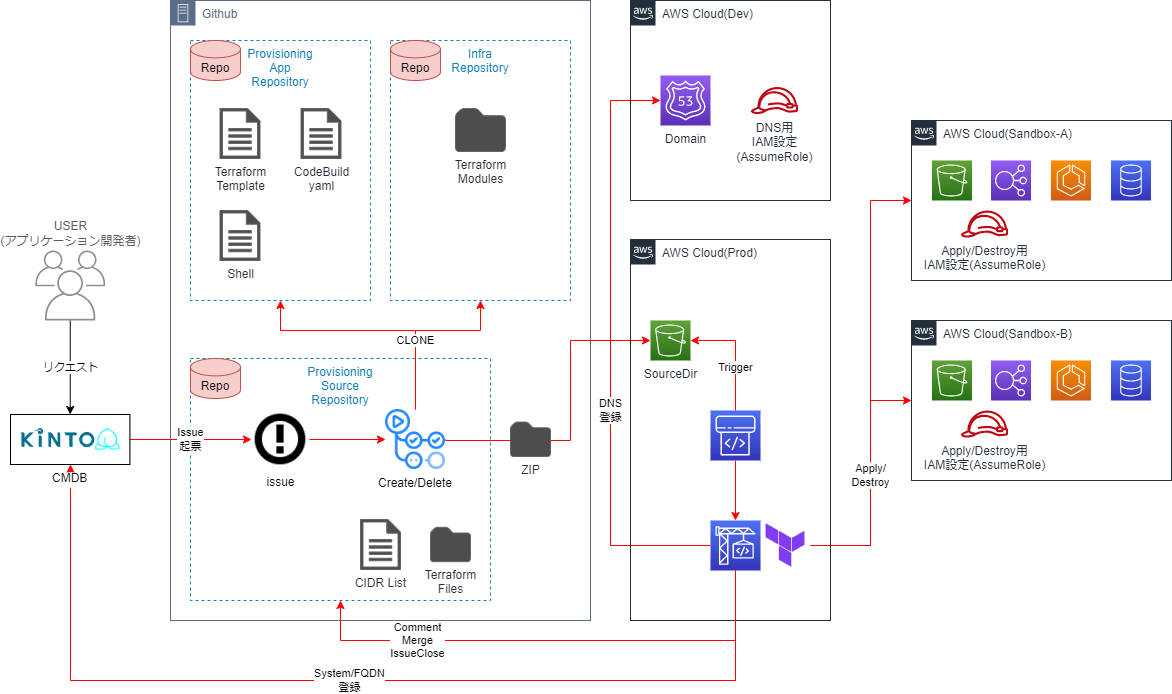

Overall Configuration

Individual Explanation

I separated the part that creates the Terraform code from the part that actually builds it in the sandbox environment so that they could be tested separately.

Terraform Code Generation Parts

- ProvisioningSourceRepo

- Issue management

- GitHub Actions execution

- Terraform code for the created sandbox environment

- CIDR list for each sandbox environment

- ProvisioningAppRepo

- Template for design pattern

- Yaml (buildspec.yml) in CodeBuild

- Various ShellScripts running on CodeBuild

- InfraRepo

- TerraformModule

AWS Environment Building Part

- S3

- Source and Artifact in CodePipeline

- EventBridge

- CodePipeline Trigger

- CodePipeline/CodeBuild

- Actual construction environment

- Route53 (Dev)

- Delegate authority from the production DNS and use Route53 in the Dev environment

Terratest (Apply)

The Terratest sample looks like this. The test is nested so that if any of the Init, Plan, or Apply steps fail, the test will end. If the Apply step fails midway, Destroy what was applied up to that point. I think you will be able to write it more neatly if you have knowledge of Golang.

package test

import (

"github.com/gruntwork-io/terratest/modules/terraform"

"testing"

)

func TestTerraformInitPlanApply(t *testing.T) {

t.Parallel()

awsRegion := "ap-northeast-1"

terraformOptions := &terraform.Options{

TerraformDir: "TerraformファイルがあるPATH" + data.uuid,

EnvVars: map[string]string{

"AWS_DEFAULT_REGION": awsRegion,

},

}

// InitでErrorがなければPlan、PlanでErrorがなければApplyと

// IFで入れ子構造の対応を実施(並列だとInitで失敗してもテストとしてすべて走る)

if _, err := terraform.InitE(t, terraformOptions); err != nil {

t.Error("Terraform Init Error.")

} else {

if _, err := terraform.PlanE(t, terraformOptions); err != nil {

t.Error("Terraform Plan Error.")

} else {

if _, err := terraform.ApplyE(t, terraformOptions); err != nil {

t.Error("Terraform Apply Error.")

terraform.Destroy(t, terraformOptions)

} else {

// 正常終了

}

}

}

}

Elements

| Name | Overview |

|---|---|

| CMDB (in-house production) | Configuration Management Database to manage databases Since rich functions were unnecessary, KINTO Technologies has developed an in-house CMDB. On top of that, we are creating a request form for automatic building. In addition, after being built, FQDN and other information are automatically registered in the CMDB. |

| Terraform | A product for coding various services, AWS among them. IaC. In-house design patterns and modules are created with Terraform. |

| GitHub | A version control system for storing source code. Build requests are logged by raising an Issue. Also, since Terraform code is required for deletion, etc., we also save each code for the sandbox environment. |

| GitHubActions | The CI/CD tool included in GitHub. At KINTO Technologies, we utilize GitHub Actions for tasks such as building and releasing applications In this case, we are using the issue filing as a trigger to determine whether to Create/Delete, select the necessary code group, compress it, and connect to AWS. |

| CodePipeline/CodeBuild | CICD-related tools provided by AWS. Using it to run Terraform code. We could run Terraform/Terratest on GitHubActions, but since we use GitHubActions daily for application builds, we chose to use this to avoid the impact on each product team due to usage limits, etc. |

| Terratest | A Go library for testing infrastructure code, etc. You can also test modules, but in this case we are using it to recover from failures in the middle of Terraform Apply. Click here for the official site |

Restrictions

- We target multiple sandbox environments (AWS accounts) associated with each development team, but only one can be created at a time (exclusive).

- Since CodePipeline/CodeBuild are running in the same environment due to DNS

- We also create parts that are not run in the application.

- It may seem like there is a lot of waste, but this is due to the build design pattern.

- It is built as a seamless line from FQDN to DB.

- You need to set the VPC, etc. in the Module beforehand.

- You need to build a set of common components such as VPC beforehand.

What to Do if There Are No Modules

KINTO Technologies has been working on design patterns for some time, so we have the advantage of being able to easily use Terraform to build everything from CloudFront to RDS. What can you do if you haven't progressed that far but still want to implement AutoProvisioning using ECS?

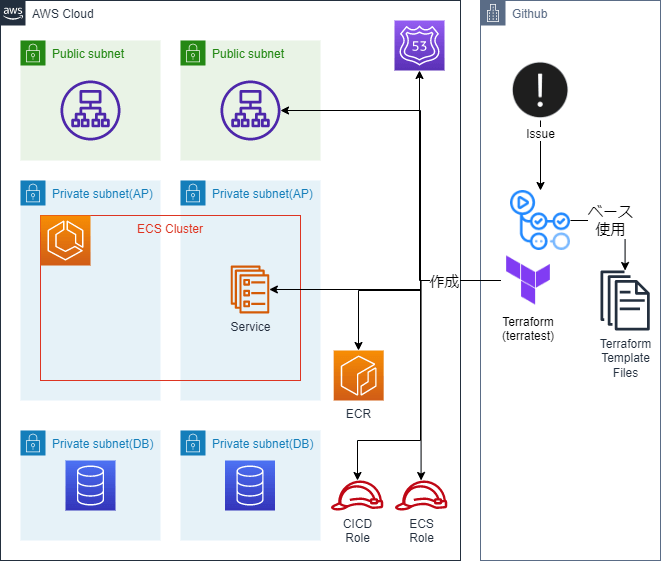

I Thought About It

Create up until the ECS Cluster in advance.

- ECS Service

- ECR Repository

- ALB TargetGroup

- ALB ListenerRule

- IAM Role

- Route53

I think it would be easier to prepare a Terraform file with the above, and then build it. TaskDefinition can be created if you have permission, so it's up to the user.

Configuration Proposal

I think CodePipeline/CodeBuild would be fine instead of GitHubActions, but when you consider the need to prepare a GUI like CodeCommit, wouldn't it be easier to just put it all together on GitHub? So, here is the configuration. I haven't used AWS Proton yet, so I haven't considered it.

I think it would be possible to separate the Parameter parts such as locals.tf and create them using the sed command or Golang's HCL library. Once you have confirmed the build using Terratest, etc., add any FQDN to the ALB alias and match it with the ListenerRule.

Next Steps

Originally, we had hoped to offer it in advance to get feedback, but at present it hasn't been used much. We have provided a GUI for this purpose, and we plan to start by having a variety of people use it and receive feedback.

However, I think there are many things we can do, such as increasing the number of compatible design patterns and simplifying the associated CICD settings. I would really like to introduce Kubernetes and then move on to AutoProvisioning, which has many applications.

|・ω・`) Is that not possible?

Impressions

To be honest, I tried hard to automatically generate templates using Golang, but gave up because the HCL structure of our in-house design patterns was difficult to analyze and reconstruct. There was some talk internally about this being a reinvention of the console, but if we could get that far, I think we might be able to automate not only the sandbox environment but also the STG environment. For Platform Group, the environment can be created simply by tapping and selecting a few items on the GUI. It's really simple.

To be honest, I wanted to reach that level, but I think it was good that I was able to take even the first step.

In Kubernetes, I think it might be possible to create something similar by preparing a Helm chart as a template. I would like to consider alternative methods and try various things.

Summary

The Operation Tool Manager Team oversees and develops tools used internally throughout the organization. As I wrote in my previous O11y article, we organize the mechanisms and present them to application developers so that they can use them on a self-service basis, supporting the creation of value by these developers.

A PlatformEngineering meet up was held a little while ago, and it's reassuring to know that this is in line with the direction we're moving forward in. The Operation Tool Manager team also has an in-house tool building department, allowing developers to quickly and intensively create value for their applications.

Please feel free to contact us if you are interested in any of these activities or would like to hear from us.

関連記事 | Related Posts

We are hiring!

【クラウドエンジニア】Cloud Infrastructure G/東京・大阪・福岡

KINTO Tech BlogWantedlyストーリーCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。

【クラウドエンジニア(クラウド活用の推進)】Cloud Infrastructure G/東京・名古屋・大阪・福岡

KINTO Tech BlogCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。