MinIOを用いたS3ローカル開発環境の構築ガイド(AWS SDK for Java 2.x)

加筆・修正

ブログの初版では、AWS SDK for Java V1 を使用した Java コードを掲載していましたが、V1 はメンテナンスモードに移行したため、Spring Boot の起動時に警告メッセージが表示されるようになりました。

このため、AWS SDK for Java V2 に移行し、ソースコードを V2 で書き直しました。使いそうな操作をコピペで簡単に使えるように、コードスニペットを追加しています。

また、GitHub Actions 上で Docker Compose を使用して MinIO をセットアップした際に、mc(MinIO Client)が MinIO の起動完了を待たずに実行され、エラーが発生する問題に直面しました。

この問題を解決するため、MinIO の起動が完了したことを確認してから mc が実行されるように、ヘルスチェックを追加しました。

自己紹介・記事要約

こんにちは。KINTOテクノロジーズの共通サービス開発グループ[1][2][3][4]で会員管理のエンジニアを担当している宮下です。

今日は、私たちの開発現場で直面したS3互換のローカルストレージ環境構築の課題をどのように解決したかをお話しします。

具体的には、オープンソースのMinIOを活用してAWS S3の機能をエミュレートする方法について、実践的なアプローチを共有します。

この記事が、同様の課題に直面しているエンジニアの方々にとって、参考になれば幸いです。

MinIOとは?

MinIOはS3互換機能を備えたオープンソースのオブジェクトストレージサーバーツールです。NASのように、ファイルをアップロードやダウンロードすることができます。

この分野にはLocalStackという似たようなサービスもあります。LocalStackはAWSのエミュレーションに特化し、S3をはじめとするLambda, SQS, DynamoDBなどのサービスをローカルでエミュレートできるツールです。

これらは目的が異なる2つのツールですが、ローカル環境でのS3互換環境を設定するための要件はどちらも満たしています。

MinIO website

LocalStack website

MinIO と LocalStack で ツール選定

開発の要件

開発の要件として、docker-composeを実行するだけで、自動で任意のS3バケットを作成し、そのバケットにメールテンプレートやCSVファイルなどが登録されていることが必要でした。

コンテナが起動してからコマンドやGUIでファイル登録するのは面倒くさいですからね。

また、自動化されたローカルでのS3接続テストを行う際も、コンテナが起動すると同時にバケットとファイルが準備済みでなければなりません。

ツールの比較

どちらのツールが簡単に要件を実現できるか比較した結果、LocalStackはaws-cliでバケット作成やファイル操作をするのに対し、MinIOは専用のコマンドラインツールであるmc(MinIO Client)を提供しています。これにより、より簡単にシステムの構築が可能でした。

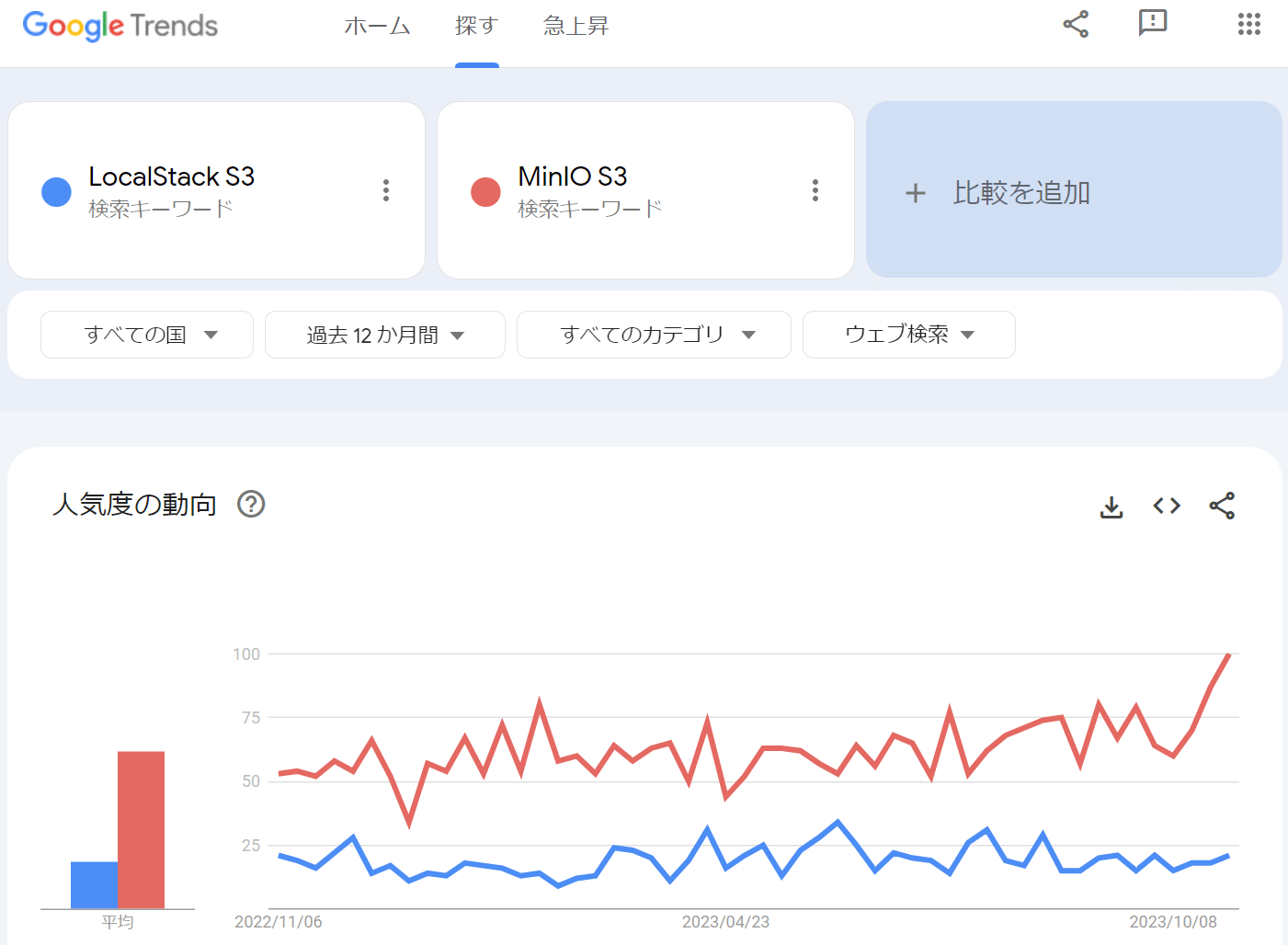

さらに、GUIによる管理コンソールにおいても、MinIOの方が洗練されていると感じました。Google Trendsでの比較では、MinIOがより人気があることが分かります。これらの理由から、MinIOを採用する事に決定しました。

composeファイル

MinIOのローカル環境をセットアップするために、最初に「compose.yaml」ファイルを用意する必要があります。

以下のステップに従って進めましょう。

- 任意のディレクトリを作成します。

- そのディレクトリ内にファイル名「compose.yaml」のテキストファイルを作成します。

- 下記のcompose.yamlの内容をコピペして保存します。

※docker-compose.ymlは非推奨になりました。composeファイルの仕様はこちら

※docker-compose.ymlでも後方互換性機能で動作します。詳細はこちら

services:

# MinIOサーバーコンテナの設定

minio:

container_name: minio_test

image: minio/minio:latest

# MinIOサーバーを開始し、管理コンソール(GUI)のアクセスポートを指定

command: ['server', '/data', '--console-address', ':9001']

ports:

- "9000:9000" # APIアクセス用

- "9001:9001" # 管理コンソール(GUI)用

# USERとPASSWORDを省略する事も可能です。

# その場合は minioadmin | minioadmin に自動で設定されます。

environment:

- "MINIO_ROOT_USER=minio"

- "MINIO_ROOT_PASSWORD=minio123"

# MinIOの起動が完了した事を確認するためのヘルスチェック

healthcheck:

test: [ "CMD", "curl", "-f", "http://localhost:9000/minio/health/live" ]

interval: 1s

timeout: 20s

retries: 20

# minioが管理する設定ファイルや、アップロードしたファイルを

# ローカルで参照したい場合や、登録したファイルを永続化したい場合は

# ローカルのディレクトリをマウントします。

# volumes:

# - ./minio/data:/data

# PC再起動後にminioコンテナが自動で起動してほしい場合など

# 停止していたら自動で起動していてほしい場合は有効化します。

# restart: unless-stopped

# MinIOクライアント(mc)コンテナの設定

mc:

image: minio/mc:latest

container_name: mc_test

depends_on:

minio:

# MinIOの起動が完了したのを確認してから、mcを実行する設定

condition: service_healthy

environment:

- "MINIO_ROOT_USER=minio" # 上と同じユーザー名

- "MINIO_ROOT_PASSWORD=minio123" # 上と同じパスワード

# mcコマンドでバケット作成と、作成したバケットにファイルを配置します。

# まずは aliasを設定して、それ以降のコマンドで簡単にminio本体を

# 指定できるようにします。

# 今回は myminio というエイリアス名にしました。

# mbは、バケットの新規作成を行う。make bucketの略

# cpは、ローカルのファイルをminioにコピーします。

entrypoint: >

/bin/sh -c "

mc alias set myminio http://minio:9000 minio minio123;

mc mb myminio/mail-template;

mc mb myminio/image;

mc mb myminio/csv;

mc cp init_data/mail-template/* myminio/mail-template/;

mc cp init_data/image/* myminio/image/;

mc cp init_data/csv/* myminio/csv/;

"

# minioにアップロードしたいファイルが入っているディレクトリをマウントします。

volumes:

- ./myData/init_data:/init_data

ディレクトリとファイル構成

適当なダミーファイルを作成し、以下のディレクトリとファイル構成で起動してみます。

minio_test# tree .

.

├── compose.yaml

└── myData

└── init_data

├── csv

│ └── example.csv

├── image

│ ├── slide_01.jpg

│ └── slide_04.jpg

└── mail-template

└── mail.vm

起動と動作確認

MinIOとそのクライアントをDocker上で稼働させて、その後の動作を確認する流れを紹介します。

Dockerコンテナは、以下のコマンドでバックグラウンドで起動させます(-d フラグを使用)。

Docker Desktop(for Windows)をインストールした場合は、コマンドプロンプトや

PowerShellといったコマンドラインで、コンテナの作成が可能です。

※Docker Desktopのダウンロードはこちら

docker compose up -d

※docker-compose の真ん中のハイフンは付けなくなりました。詳細はこちら

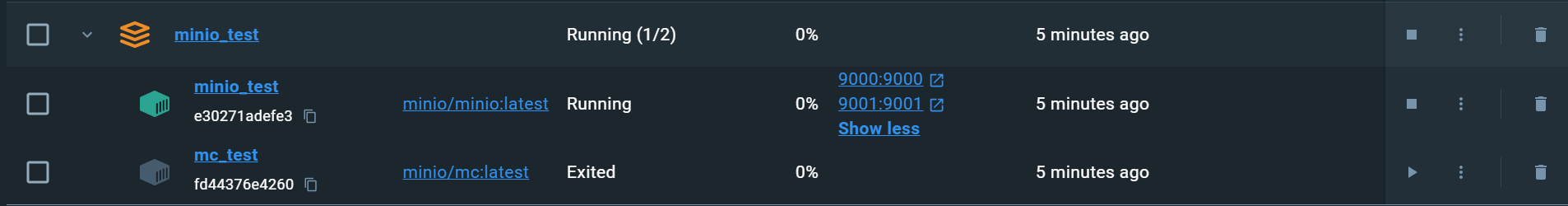

Docker Desktop

Docker Desktopを開いて、コンテナの状態をチェックします。

minio_test コンテナは起動していますが、mc_test コンテナが停止していることが確認できます。

mc_test コンテナの実行ログを確認してみましょう。

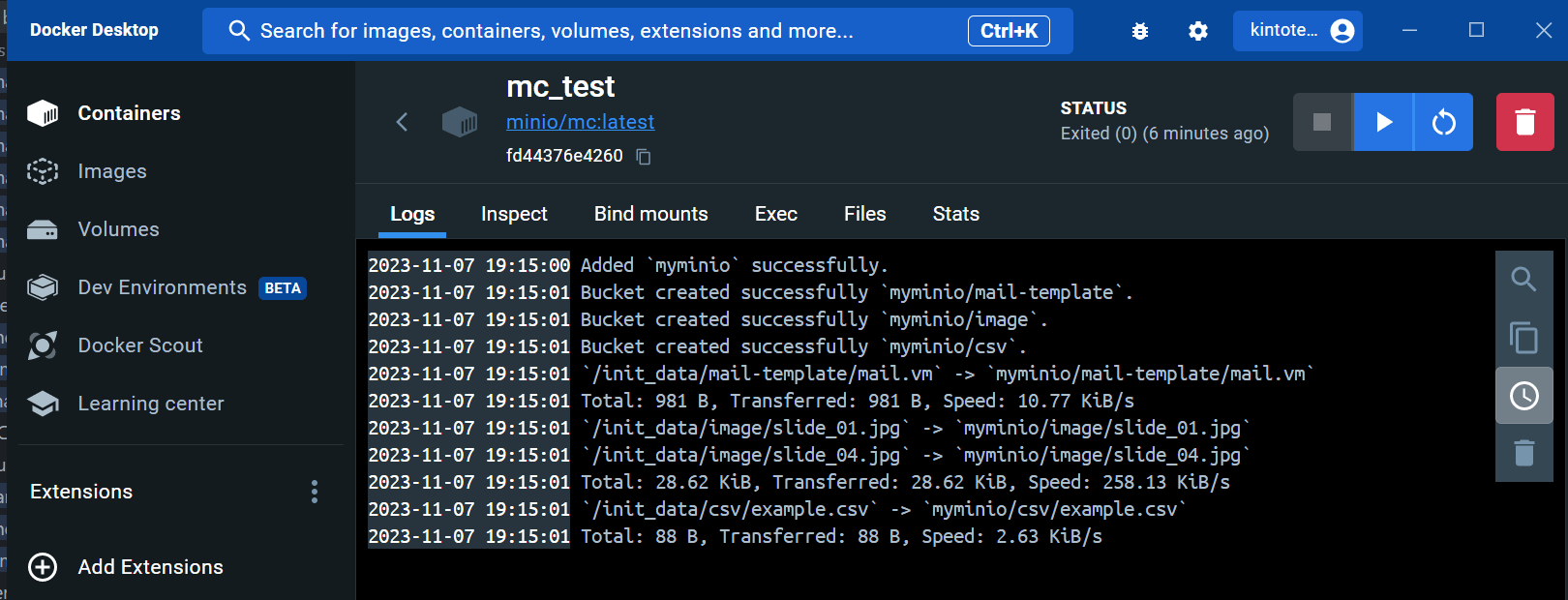

mcの実行ログ

MinIOクライアント(mc)が実行され、すべてのコマンドが正常に終了したことがログから分かります。

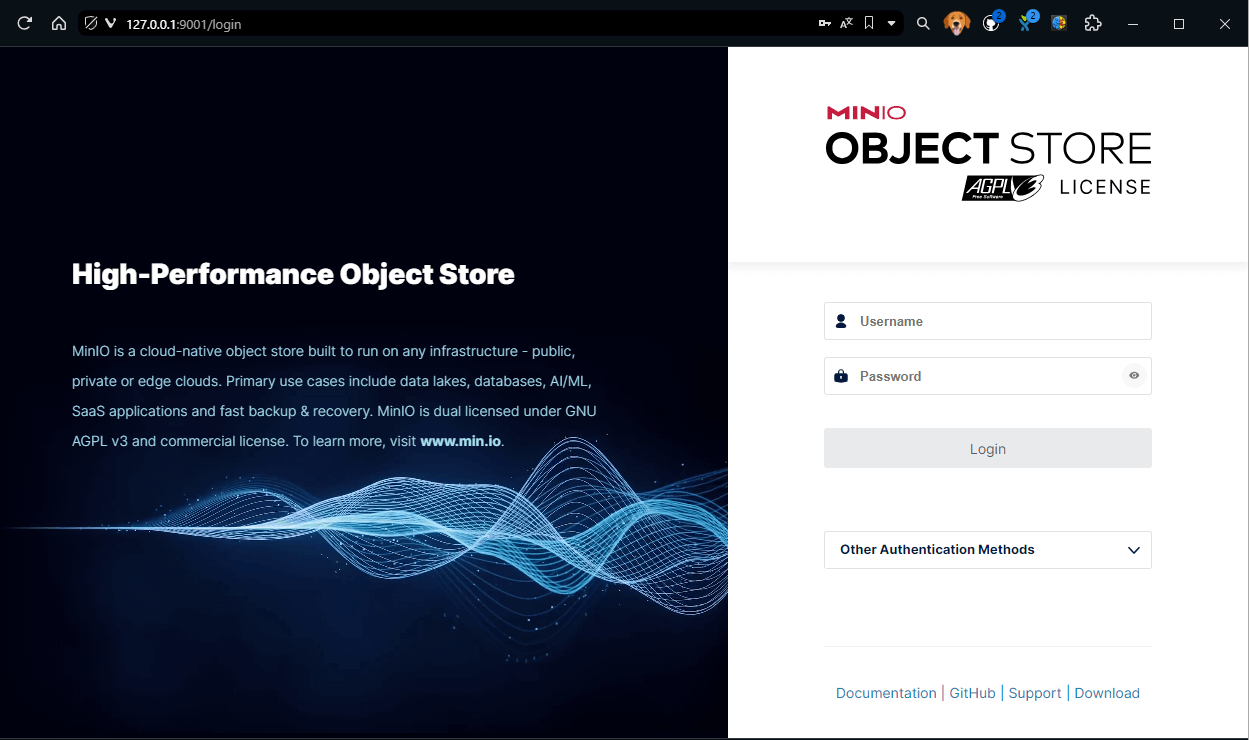

管理コンソール

次に、MinIOのGUI管理コンソールを見てみます。ブラウザで localhost の 9001 ポートにアクセスします。

http://127.0.0.1:9001

ログイン画面が表示されたら、compose.yaml で設定したユーザー名とパスワード

(この例では minio と minio123)を入力します。

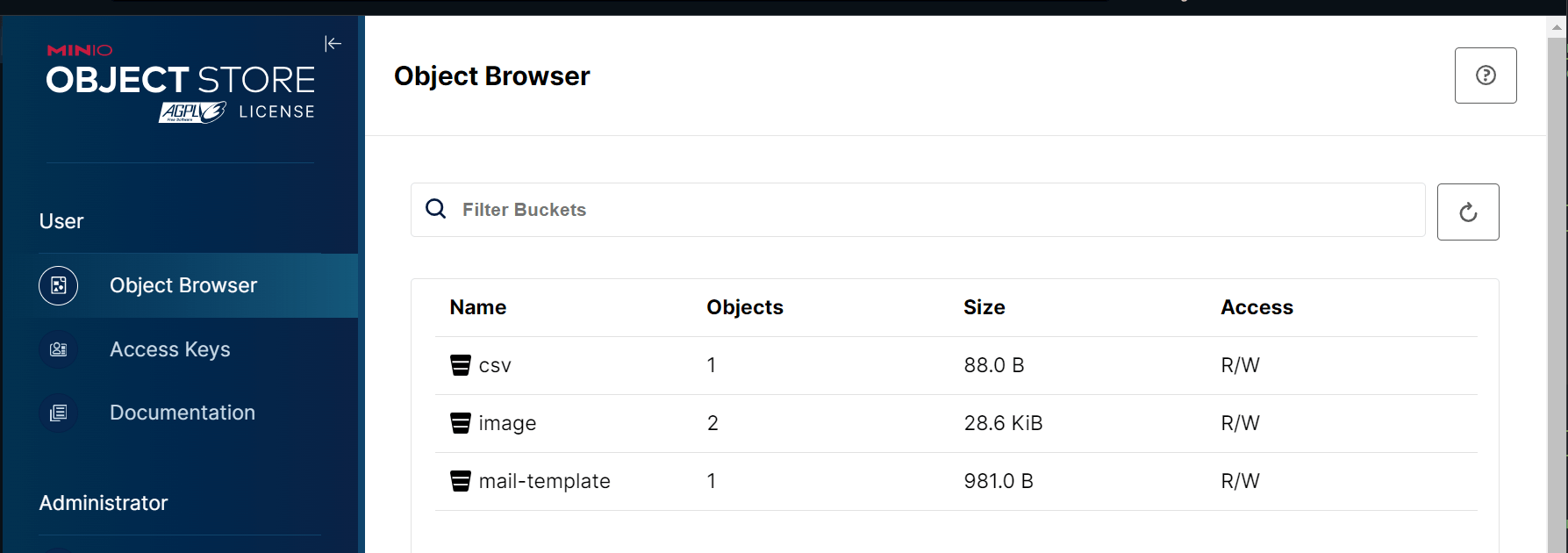

バケット一覧

左側のメニューから「Object Browser」を選択すると、

作成したバケットとそこに保存されているファイルの数が一覧で表示されます。

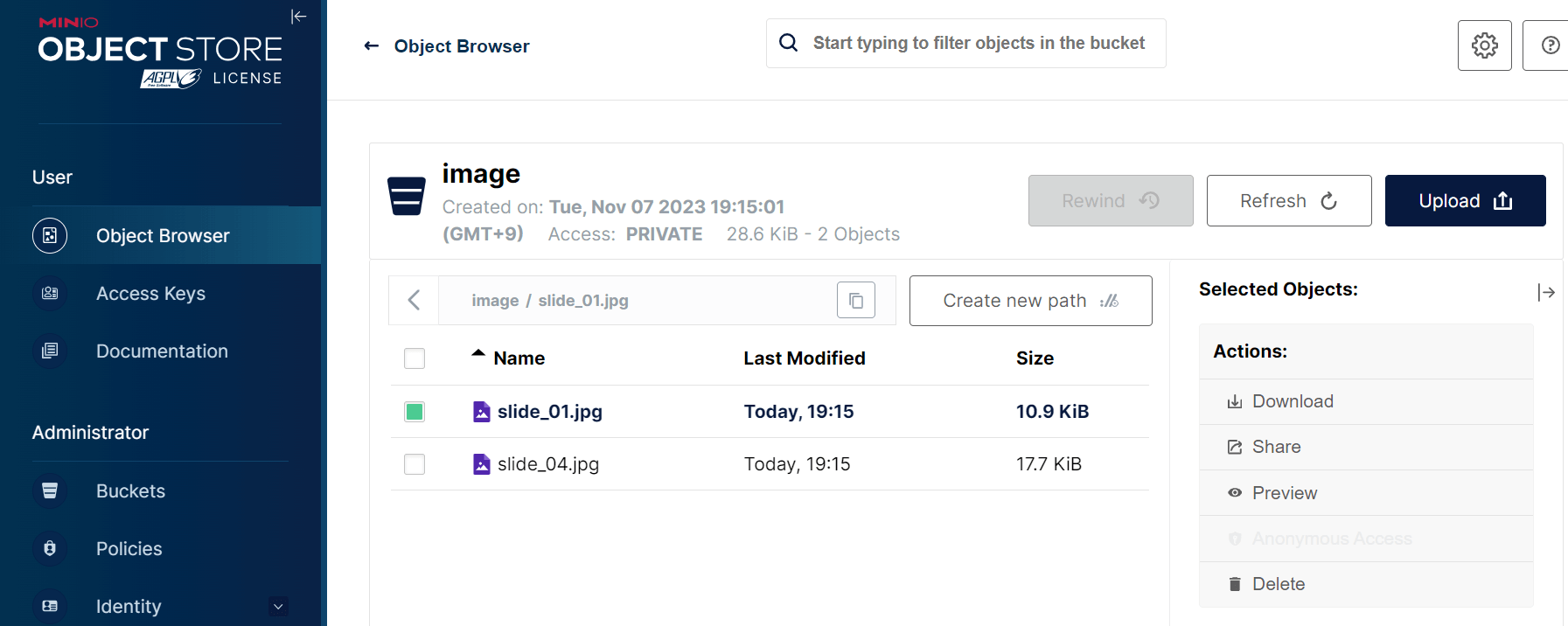

ファイル一覧

例として「image」バケットを選んで中を見てみます。

予めアップロードされているファイルが見えます。

ファイルの隣にあるアクションメニューから「プレビュー」を選ぶと、ファイルを直接確認できます。

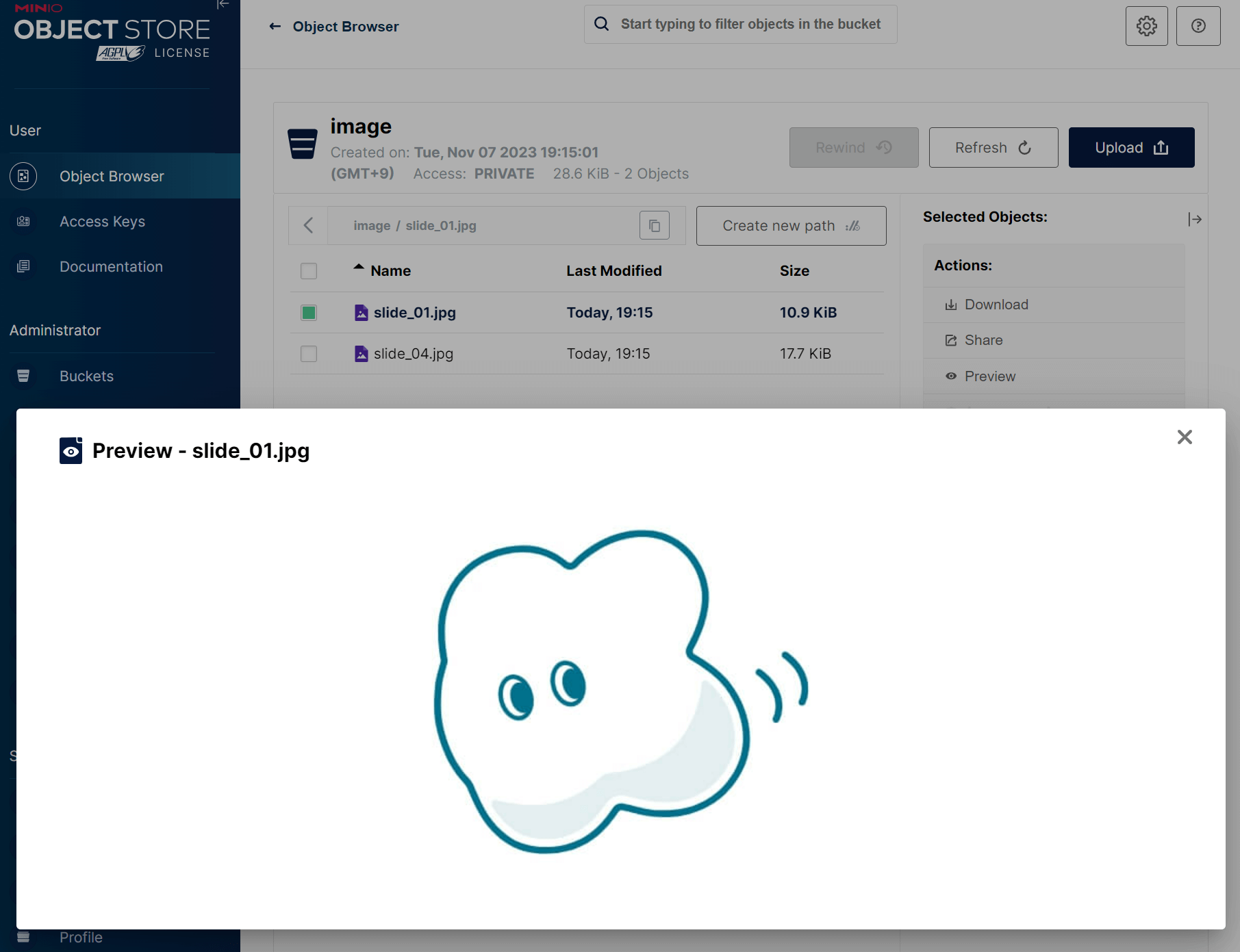

ファイルプレビュー機能

弊社のマスコットキャラクター くもびぃ がプレビューされました。

MinIOの管理コンソールで画像を直接プレビューできる機能は非常に便利です。

mc(MinIO Client)のインストール

GUIよりコマンドラインを使った方が、大量のファイル操作が効率的な場合があります。

また、開発時にソースコードからMinIOにアクセスしてエラーが出たとき、

ファイルパスの確認を行うにはコマンドラインが非常に便利です。

ここでは、MinIOクライアントのインストール方法と基本的な操作を説明します。

※GUIの管理コンソールで必要十分という方はこのセクションをスキップしてください。

# 以下のコマンドを使用して、mcをダウンロードしてください。実行ファイルは任意のディレクトリに保存されます。

minio_test/mc# curl https://dl.min.io/client/mc/release/linux-amd64/mc \

--create-dirs \

-o ./minio-binaries/mc

# 動作確認

# インストールしたmcが最新版か確認し、バージョンを表示させて正しくインストールされたかをチェックします。

# mcコマンドをPathに通すかはお好みで。今回は通さずにいきます。

minio_test/mc# ./minio-binaries/mc update

> You are already running the most recent version of ‘mc’.

minio_test/mc# ./minio-binaries/mc -version

> mc version RELEASE.2023-10-30T18-43-32Z (commit-id=9f2fb2b6a9f86684cbea0628c5926dafcff7de28)

> Runtime: go1.21.3 linux/amd64

> Copyright (c) 2015-2023 MinIO, Inc.

> License GNU AGPLv3 <https://www.gnu.org/licenses/agpl-3.0.html>

# エイリアスの設定

# MinIOサーバへのアクセスに必要なエイリアスを設定します。

minio_test/mc# ./minio-binaries/mc alias set myminio http://localhost:9000 minio minio123;

> Added `myminio` successfully.

# ファイル操作の例

# バケット内のファイル一覧を表示

minio_test/mc# ./minio-binaries/mc ls myminio/image

> [2023-11-07 21:18:54 JST] 11KiB STANDARD slide_01.jpg

> [2023-11-07 21:18:54 JST] 18KiB STANDARD slide_04.jpg

minio_test/mc# ./minio-binaries/mc ls myminio/csv

> [2023-11-07 21:18:54 JST] 71B STANDARD example.csv

# ファイルの中身を画面出力

minio_test/mc# ./minio-binaries/mc cat myminio/csv/example.csv

> name,age,job

> tanaka,30,engineer

> suzuki,25,designer

> satou,,40,manager

# ファイルの一括アップロード

minio_test/mc# ./minio-binaries/mc cp ../myData/init_data/image/* myminio/image/;

> ...t_data/image/slide_04.jpg: 28.62 KiB / 28.62 KiB

# ファイルの削除

minio_test/mc# ./minio-binaries/mc ls myminio/mail-template

> [2023-11-15 11:46:25 JST] 340B STANDARD mail.txt

minio_test/mc# ./minio-binaries/mc rm myminio/mail-template/mail.txt

> Removed `myminio/mail-template/mail.txt`.

mcコマンド一覧

MinIOクライアントに関する詳細なドキュメントが必要な場合は、公式マニュアルをご覧ください。

MinIO Client 公式マニュアルはこちら

Javaのソースコードからアクセス(AWS SDK for Java 2.x)

ローカルでMinIOを使ってS3互換の開発環境を構築した後、実際のJavaアプリケーションからMinIOにアクセスする方法を紹介します。

まず、Gradleの設定を行います。

plugins {

id 'java'

}

java {

sourceCompatibility = '17'

}

repositories {

mavenCentral()

}

dependencies {

// ブログの初期バージョンでは1系でしたが、AWS SDK for Java 2.x に更新しました

// https://mvnrepository.com/artifact/software.amazon.awssdk/s3

implementation 'software.amazon.awssdk:s3:2.28.28'

}

次に、MinIOにアクセスするためのJavaクラスを作成します。

使いそうな操作を、コピペで簡単に使えるように、思いつく限り書きました。

import java.io.*;

import java.net.URI;

import java.nio.charset.StandardCharsets;

import java.nio.file.Files;

import java.nio.file.Path;

import java.nio.file.Paths;

import java.nio.file.StandardCopyOption;

import java.time.LocalDateTime;

import java.time.format.DateTimeFormatter;

import java.util.ArrayList;

import java.util.List;

import java.util.stream.Collectors;

import software.amazon.awssdk.auth.credentials.AwsBasicCredentials;

import software.amazon.awssdk.auth.credentials.InstanceProfileCredentialsProvider;

import software.amazon.awssdk.auth.credentials.StaticCredentialsProvider;

import software.amazon.awssdk.core.ResponseInputStream;

import software.amazon.awssdk.core.sync.RequestBody;

import software.amazon.awssdk.regions.Region;

import software.amazon.awssdk.services.s3.S3Client;

import software.amazon.awssdk.services.s3.model.*;

import software.amazon.awssdk.services.s3.paginators.ListObjectsV2Iterable;

public class Main {

public static void main(String[] args) {

try {

new Main().execute();

} catch (Exception e) {

System.out.println("ERROR: " + e.getMessage());

}

}

/** S3クライアント */

private S3Client s3Client;

/**

* spring boot のプロファイルで切り替える想定

* バッチの場合は、起動引数で切り替える

*/

private final boolean isLocal = true;

/** MinIOのS3互換性テスト */

private void execute() throws IOException {

System.out.println("----- Start -----");

// S3クライアントを初期化 localの場合はminioに接続、serverはAWSに接続

if (isLocal) {

s3Client = getS3ClientForLocal();

} else {

s3Client = getS3ClientForAwsS3();

}

final String bucketName = "csv";

// 1. バケット内のファイル一覧を取得

List<String> fileList = getFileList(bucketName);

System.out.println(bucketName + " バケット内のファイル一覧:");

fileList.forEach(file -> System.out.println(" - " + file));

// 2. ファイルの内容をストリーム(ファイルをダウンロードしない)で取得

for (String fileKey : fileList) {

System.out.println("\nストリームで一行ずつ取得 ファイル名: " + fileKey);

try (InputStream s3is =

s3Client.getObject(

GetObjectRequest.builder().bucket(bucketName).key(fileKey).build());

BufferedReader reader = new BufferedReader(new InputStreamReader(s3is))) {

String line;

while ((line = reader.readLine()) != null) {

System.out.println(line);

}

}

}

// 3. ファイルの内容を一括でStringで取得

final String sourceKey = "example.csv";

System.out.println("\n一括で取得 ファイル名: " + sourceKey);

String fileContents = getStringFromS3File(bucketName, sourceKey);

System.out.println(fileContents);

// 4. 同じバケット内でファイルのコピー

final String destinationKey = "example_copy.csv";

copyObject(bucketName, sourceKey, destinationKey);

System.out.println(

"\nファイルコピー成功: "

+ bucketName

+ "/"

+ sourceKey

+ " -> "

+ bucketName

+ "/"

+ destinationKey);

// 5. バケットの作成

final String tmpBucketName = "tmp-bucket";

createBucketIfNotExists(tmpBucketName);

System.out.println("\nバケットの作成成功: " + tmpBucketName + ": " + doesBucketExist(tmpBucketName));

// 6. バケット間のファイル移動(move)

moveObjectBetweenBuckets(bucketName, destinationKey, tmpBucketName, destinationKey);

System.out.println(

"\nmove成功: "

+ tmpBucketName

+ "/"

+ destinationKey

+ ": "

+ doesFileExistInS3(tmpBucketName, destinationKey));

// 7. Stringからファイルアップロード

String newFileName = "string.txt";

String fileContent = "memo memo memo";

putObject(tmpBucketName, newFileName, fileContent);

System.out.println("\nファイルアップロード成功 (String): " + tmpBucketName + "/" + newFileName);

// 8. Fileオブジェクトからファイルアップロード

Path filePath = Files.writeString(Paths.get("file.txt"), "This is a sample file content.");

putFileObject(tmpBucketName, filePath.toFile());

System.out.println(

"\nファイルアップロード成功 (File): " + tmpBucketName + "/" + filePath.toFile().getName());

// 9. ファイルのリネーム

String renameFileName = "renamed.txt";

renameObject(tmpBucketName, filePath.toFile().getName(), renameFileName);

System.out.println(

"\nファイルリネーム成功: "

+ renameFileName

+ ": "

+ doesFileExistInS3(tmpBucketName, renameFileName));

// 10. ファイルをローカルにダウンロード

String downloadFileName = "download.csv";

downloadObject(bucketName, sourceKey, downloadFileName);

System.out.println(

"\nファイルダウンロード成功: " + downloadFileName + ": " + new File(downloadFileName).exists());

// 11. 今日の日付でディレクトリを作成し、ファイルをバックアップ

String backupFilePath =

LocalDateTime.now().format(DateTimeFormatter.ofPattern("/yyyy/MM/dd/"))

+ filePath.toFile().getName();

putFileObjectWithKey(tmpBucketName, backupFilePath, filePath.toFile());

System.out.println("\nバックアップ成功: " + doesFileExistInS3(tmpBucketName, backupFilePath));

// 12. ファイルの削除

deleteObject(bucketName, renameFileName);

System.out.println("\nファイル削除成功: " + renameFileName);

System.out.println("----- End -----");

}

/** S3クライアントの設定 (ローカル向け) */

private S3Client getS3ClientForLocal() {

final String id = "minio";

final String pass = "minio123";

final String endpoint = "http://127.0.0.1:9000";

return S3Client.builder()

.credentialsProvider(StaticCredentialsProvider.create(AwsBasicCredentials.create(id, pass)))

.endpointOverride(URI.create(endpoint))

.region(Region.AP_NORTHEAST_1)

.forcePathStyle(true)

.build();

}

/** S3クライアントの設定 (AWS向け) */

private S3Client getS3ClientForAwsS3() {

return S3Client.builder()

.credentialsProvider(InstanceProfileCredentialsProvider.builder().build())

.region(Region.AP_NORTHEAST_1)

.build();

}

/** 指定されたバケット内のファイル一覧を取得 */

public List<String> getFileList(String bucketName) throws S3Exception {

List<String> fileNameList = new ArrayList<>();

ListObjectsV2Request request = ListObjectsV2Request.builder().bucket(bucketName).build();

ListObjectsV2Iterable response = s3Client.listObjectsV2Paginator(request);

response.stream()

.forEach(result -> result.contents().forEach(s3Object -> fileNameList.add(s3Object.key())));

return fileNameList;

}

/** S3のファイルの内容をStringで取得 */

public String getStringFromS3File(String bucketName, String s3key) throws IOException {

String fileContentsString;

GetObjectRequest getObjectRequest =

GetObjectRequest.builder().bucket(bucketName).key(s3key).build();

try (ResponseInputStream<GetObjectResponse> s3InputStream =

s3Client.getObject(getObjectRequest);

BufferedReader reader =

new BufferedReader(new InputStreamReader(s3InputStream, StandardCharsets.UTF_8))) {

fileContentsString = reader.lines().collect(Collectors.joining("\n"));

}

return fileContentsString;

}

/** バケットの作成(存在しない場合にのみ作成) */

public void createBucketIfNotExists(String bucketName) {

if (doesBucketExist(bucketName)) {

System.out.println("バケットは既に存在します: " + bucketName);

} else {

createBucket(bucketName);

}

}

/** バケットの存在確認 */

private boolean doesBucketExist(String bucketName) {

try {

s3Client.headBucket(HeadBucketRequest.builder().bucket(bucketName).build());

return true;

} catch (NoSuchBucketException e) {

return false;

}

}

/** バケットの作成 */

private void createBucket(String bucketName) {

CreateBucketRequest createBucketRequest =

CreateBucketRequest.builder()

.bucket(bucketName)

.createBucketConfiguration(

CreateBucketConfiguration.builder()

.locationConstraint(Region.AP_NORTHEAST_1.id())

.build())

.build();

s3Client.createBucket(createBucketRequest);

}

/** バケット間のファイル移動 */

public void moveObjectBetweenBuckets(

String sourceBucket, String sourceKey, String targetBucket, String targetKey) {

copyObjectBetweenBuckets(sourceBucket, sourceKey, targetBucket, targetKey);

deleteObject(sourceBucket, sourceKey);

}

/** バケット間のファイルコピー */

public void copyObjectBetweenBuckets(

String sourceBucket, String sourceKey, String destinationBucket, String destinationKey)

throws S3Exception {

CopyObjectRequest copyRequest =

CopyObjectRequest.builder()

.sourceBucket(sourceBucket)

.sourceKey(sourceKey)

.destinationBucket(destinationBucket)

.destinationKey(destinationKey)

.build();

s3Client.copyObject(copyRequest);

}

/** バケット内でファイルコピー */

public void copyObject(String sourceBucket, String sourceKey, String destinationKey)

throws S3Exception {

CopyObjectRequest copyRequest =

CopyObjectRequest.builder()

.sourceBucket(sourceBucket)

.sourceKey(sourceKey)

.destinationBucket(sourceBucket)

.destinationKey(destinationKey)

.build();

s3Client.copyObject(copyRequest);

}

/** StringからファイルをS3にアップロード */

public void putObject(String bucketName, String s3key, String content) {

PutObjectRequest putObjectRequest =

PutObjectRequest.builder().bucket(bucketName).key(s3key).build();

s3Client.putObject(putObjectRequest, RequestBody.fromString(content));

}

/** FileをS3にアップロード */

public void putFileObject(String bucketName, File file) {

PutObjectRequest putObjectRequest =

PutObjectRequest.builder().bucket(bucketName).key(file.getName()).build();

s3Client.putObject(putObjectRequest, RequestBody.fromFile(file));

}

/** keyを指定してFileオブジェクトをS3にアップロード */

public void putFileObjectWithKey(String bucketName, String key, File file) {

PutObjectRequest putObjectRequest =

PutObjectRequest.builder().bucket(bucketName).key(key).build();

s3Client.putObject(putObjectRequest, RequestBody.fromFile(file));

}

/** S3からローカルにファイルをダウンロード */

public void downloadObject(String bucketName, String s3key, String localFilePath)

throws IOException {

GetObjectRequest getObjectRequest =

GetObjectRequest.builder().bucket(bucketName).key(s3key).build();

try (ResponseInputStream<GetObjectResponse> s3InputStream =

s3Client.getObject(getObjectRequest)) {

Files.copy(s3InputStream, Path.of(localFilePath), StandardCopyOption.REPLACE_EXISTING);

}

}

/** ファイル名を変更 */

public void renameObject(String bucketName, String oldKey, String newKey) {

copyObject(bucketName, oldKey, newKey);

deleteObject(bucketName, oldKey);

}

/** S3上のファイルの存在確認 */

public String doesFileExistInS3(String bucketName, String key) {

try {

s3Client.headObject(HeadObjectRequest.builder().bucket(bucketName).key(key).build());

return "exists";

} catch (NoSuchKeyException e) {

return "not exists";

}

}

/** ファイル削除 */

public void deleteObject(String bucketName, String s3key) throws S3Exception {

DeleteObjectRequest deleteObjectRequest =

DeleteObjectRequest.builder().bucket(bucketName).key(s3key).build();

s3Client.deleteObject(deleteObjectRequest);

}

}

実行結果

----- Start -----

csv バケット内のファイル一覧:

- example.csv

ストリームで一行ずつ取得 ファイル名: example.csv

name,age,job

tanaka,30,engineer

suzuki,25,designer

satou,40,manager

一括で取得 ファイル名: example.csv

name,age,job

tanaka,30,engineer

suzuki,25,designer

satou,40,manager

ファイルコピー成功: csv/example.csv -> csv/example_copy.csv

バケットの作成成功: tmp-bucket: true

ファイルコピー完了: csv/example_copy.csv → tmp-bucket/example_copy.csv

コピー元ファイル削除完了: example_copy.csv

move成功: tmp-bucket/example_copy.csv: exists

ファイルアップロード成功 (String): tmp-bucket/string.txt

ファイルアップロード成功 (File): tmp-bucket/file.txt

ファイルリネーム成功: renamed.txt: exists

ファイルダウンロード成功: download.csv: true

バックアップ成功: exists

ファイル削除成功: renamed.txt

----- End -----

ソースコード解説

このコードの注目すべき点は、AWS SDK for Java 2.xがMinIOとAWS S3の両方に対応していることです。

ローカルのMinIOインスタンスに接続する際にはgetS3ClientForLocalメソッドを、

AWS S3に接続する場合にはgetS3ClientForAwsS3メソッドを使用してクライアントを初期化します。

このアプローチにより、異なるバックエンド環境間で同一のSDKを利用することが可能となり、

同じインターフェースでの操作を実現します。

このように、追加コストをかけずに実際のAWS環境へのデプロイ前に気軽にアプリケーションをテストできるのはいいですよね。

このガイドが何かのお役に立てば幸いです。

最後までお読みいただき、ありがとうございました🙇♂

共通サービス開発グループメンバーによる投稿 1

[ グローバル展開も視野に入れた決済プラットフォームにドメイン駆動設計(DDD)を取り入れた ] ↩︎共通サービス開発グループメンバーによる投稿 2

[入社 1 年未満メンバーだけのチームによる新システム開発をリモートモブプログラミングで成功させた話] ↩︎共通サービス開発グループメンバーによる投稿 3

[JIRA と GitHub Actions を活用した複数環境へのデプロイトレーサビリティ向上の取り組み] ↩︎共通サービス開発グループメンバーによる投稿 4

[ VSCode Dev Container を使った開発環境構築 ] ↩︎

関連記事 | Related Posts

MinIOを用いたS3ローカル開発環境の構築ガイド(AWS SDK for Java 2.x)

Getting Started with Minimal CI/CD: Streamlining EOL and SBOM Management

Creating a Development Environment Using VS Code's Dev Container

VSCode Dev Containerを使った開発環境構築

How We Reduced AWS Costs by 65%—and What We Discovered Beyond That

I Tried Transfer Family Web App

We are hiring!

【クラウドエンジニア】Cloud Infrastructure G/東京・大阪・福岡

KINTO Tech BlogWantedlyストーリーCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。

【ソフトウェアエンジニア(リーダークラス)】共通サービス開発G/東京・大阪・福岡

共通サービス開発グループについてWebサービスやモバイルアプリの開発において、必要となる共通機能=会員プラットフォームや決済プラットフォームなどの企画・開発を手がけるグループです。KINTOの名前が付くサービスやKINTOに関わりのあるサービスを同一のユーザーアカウントに対して提供し、より良いユーザー体験を実現できるよう、様々な共通機能や顧客基盤を構築していくことを目的としています。

![[Mirror]不確実な事業環境を突破した、成長企業6社独自のエンジニアリング](/assets/banners/thumb1.png)