Bedrock Knowledge baseにTerraformで入門してみた

はじめに

こんにちは🎄クラウドインフラグループに所属している島川です。クラウドインフラグループではAWSをはじめとした社内全体のインフラ領域の設計から運用まで担当しています。弊社でも様々なプロダクトで生成AIの活用が進んできておりクラウドインフラグループとしても様々な支援を実施しています。

本記事ではAmazon BedrockのKnowledge BaseをTerraformで構築した際の情報を共有します。またre:Invent 2024で発表されたRAG評価にも触れていきたいと思います。

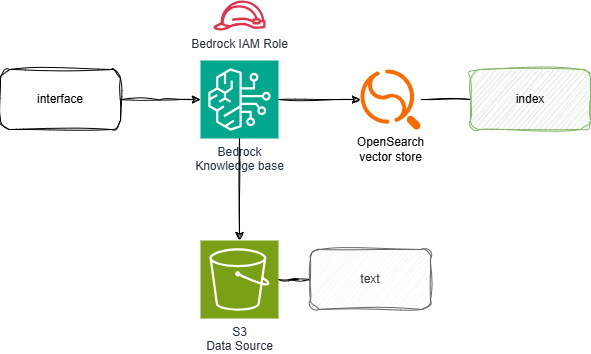

構成

今回構築する構成はこちらです。

Bedrock Knowledge BaseのVector storeとしてOpenSearch Serverlessを利用し、データソースはS3を指定します。

Terraformで構築

ディレクトリ構造はこちら。それぞれ内容を説明していきます。

なお今回使用しているTerraformバージョンは1.7.5です。

$ tree

.

├── aoss.tf # OpenSearch Serverless

├── bedrock.tf # Bedrockリソース

├── iam.tf # iam

├── s3.tf # bedrock用のS3

├── locals.tf # 変数定義

├── provider.tf # provider定義

└── terraform.tf # Backendなどの設定

ここでは変数定義をしています。

locals {

env = {

environment = "dev"

region_name = "us-west-2"

sid = "test"

}

aoss = {

vector_index = "vector_index"

vector_field = "vector_field"

text_field = "text_field"

metadata_field = "metadata_field"

vector_dimension = 1024

}

}

AWS provider、OpenSearch providerのバージョンを指定かつtfstateを保存するバックエンドS3を指定しています。ここで使用しているS3は別途手動で作成したものなので今回のterraformコードには含まれていません。

terraform {

required_providers {

# https://registry.terraform.io/providers/hashicorp/aws/

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

opensearch = {

source = "opensearch-project/opensearch"

version = "2.2.0"

}

}

backend "s3" {

bucket = "***-common-bucket"

region = "ap-northeast-1"

key = "hogehoge-terraform.tfstate"

encrypt = true

}

}

AWSとOpenSearchのプロバイダーを定義します。OpenSearchのプロバイダーはindexを追加するために使用します。

provider "aws" {

region = local.env.region_name

default_tags {

tags = {

SID = local.env.sid

Environment = local.env.environment

}

}

}

provider "opensearch" {

url = aws_opensearchserverless_collection.collection.collection_endpoint

aws_region = local.env.region_name

healthcheck = false

}

OpenSearch Serverlessのリソースとindexを作成します。

Deploy Amazon OpenSearch Serverless with Terraformを参考にしました。

今回はセキュリティポリシーでpublicにしていますが、VPCエンドポイントで制御するのが理想です。

data "aws_caller_identity" "current" {}

# Creates a collection

resource "aws_opensearchserverless_collection" "collection" {

name = "${local.env.sid}-collection"

type = "VECTORSEARCH"

standby_replicas = "DISABLED"

depends_on = [aws_opensearchserverless_security_policy.encryption_policy]

}

# Creates an encryption security policy

resource "aws_opensearchserverless_security_policy" "encryption_policy" {

name = "${local.env.sid}-encryption-policy"

type = "encryption"

description = "encryption policy for ${local.env.sid}-collection"

policy = jsonencode({

Rules = [

{

Resource = [

"collection/${local.env.sid}-collection"

],

ResourceType = "collection"

}

],

AWSOwnedKey = true

})

}

# Creates a network security policy

resource "aws_opensearchserverless_security_policy" "network_policy" {

name = "${local.env.sid}-network-policy"

type = "network"

description = "public access for dashboard, VPC access for collection endpoint"

policy = jsonencode([

###VPC エンドポイントを利用する際の参考

# {

# Description = "VPC access for collection endpoint",

# Rules = [

# {

# ResourceType = "collection",

# Resource = [

# "collection/${local.env.sid}-collection}"

# ]

# }

# ],

# AllowFromPublic = false,

# SourceVPCEs = [

# aws_opensearchserverless_vpc_endpoint.vpc_endpoint.id

# ]

# },

{

Description = "Public access for dashboards and collection",

Rules = [

{

ResourceType = "collection",

Resource = [

"collection/${local.env.sid}-collection"

]

},

{

ResourceType = "dashboard"

Resource = [

"collection/${local.env.sid}-collection"

]

}

],

AllowFromPublic = true

}

])

}

# Creates a data access policy

resource "aws_opensearchserverless_access_policy" "data_access_policy" {

name = "${local.env.sid}-data-access-policy"

type = "data"

description = "allow index and collection access"

policy = jsonencode([

{

Rules = [

{

ResourceType = "index",

Resource = [

"index/${local.env.sid}-collection/*"

],

Permission = [

"aoss:*"

]

},

{

ResourceType = "collection",

Resource = [

"collection/${local.env.sid}-collection"

],

Permission = [

"aoss:*"

]

}

],

Principal = [

data.aws_caller_identity.current.arn,

iam_role.bedrock.arn,

]

}

])

}

resource "opensearch_index" "vector_index" {

name = local.aoss.vector_index

mappings = jsonencode({

properties = {

"${local.aoss.metadata_field}" = {

type = "text"

index = false

}

"${local.aoss.text_field}" = {

type = "text"

index = true

}

"${local.aoss.vector_field}" = {

type = "knn_vector"

dimension = "${local.aoss.vector_dimension}"

method = {

engine = "faiss"

name = "hnsw"

}

}

}

})

depends_on = [aws_opensearchserverless_collection.collection]

}

Knowledge Baseとデータソースの作成をします。

data "aws_bedrock_foundation_model" "embedding" {

model_id = "amazon.titan-embed-text-v2:0"

}

resource "aws_bedrockagent_knowledge_base" "this" {

name = "test-kb"

role_arn = iam_role.bedrock.arn

knowledge_base_configuration {

type = "VECTOR"

vector_knowledge_base_configuration {

embedding_model_arn = data.aws_bedrock_foundation_model.embedding.model_arn

}

}

storage_configuration {

type = "OPENSEARCH_SERVERLESS"

opensearch_serverless_configuration {

collection_arn = aws_opensearchserverless_collection.collection.arn

vector_index_name = local.aoss.vector_index

field_mapping {

vector_field = local.aoss.vector_field

text_field = local.aoss.text_field

metadata_field = local.aoss.metadata_field

}

}

}

depends_on = [iam_role.bedrock]

}

resource "aws_bedrockagent_data_source" "this" {

knowledge_base_id = aws_bedrockagent_knowledge_base.this.id

name = "test-s3-001"

data_source_configuration {

type = "S3"

s3_configuration {

bucket_arn = "arn:aws:s3:::****-dev-test-***" ### バケット名マスク

}

}

depends_on = [aws_bedrockagent_knowledge_base.this]

}

bedrockが使用するサービスロールを設定します。

resource "aws_iam_role" "bedrock" {

name = "bedrock-role"

managed_policy_arns = [aws_iam_policy.bedrock.arn]

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Sid = ""

Principal = {

Service = "bedrock.amazonaws.com"

}

},

]

})

}

resource "aws_iam_policy" "bedrock" {

name = "bedrock-policy"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = ["bedrock:InvokeModel"]

Effect = "Allow"

Resource = "*"

},

{

Action = [

"s3:GetObject",

"s3:ListBucket",

]

Effect = "Allow"

Resource = "***-dev-test-***" ### 作成したS3のARN

},

{

Action = [

"aoss:APIAccessAll",

]

Effect = "Allow"

Resource = "arn:aws:aoss:us-west-2:12345678910:collection/*"

},

]

})

}

Bedrockで使用するS3バケットを作成します。またCORSの設定もしておきます。下記エラー参考画像です。

resource "aws_s3_bucket" "bedrock" {

bucket = "***-dev-test-***" ### マスキング

}

resource "aws_s3_bucket_cors_configuration" "this" {

bucket = aws_s3_bucket.bedrock.id

cors_rule {

allowed_headers = ["*"]

allowed_methods = [

"GET",

"PUT",

"POST",

"DELETE"

]

allowed_origins = ["*"]

}

}

実行

terraform applyで一括で作成します。

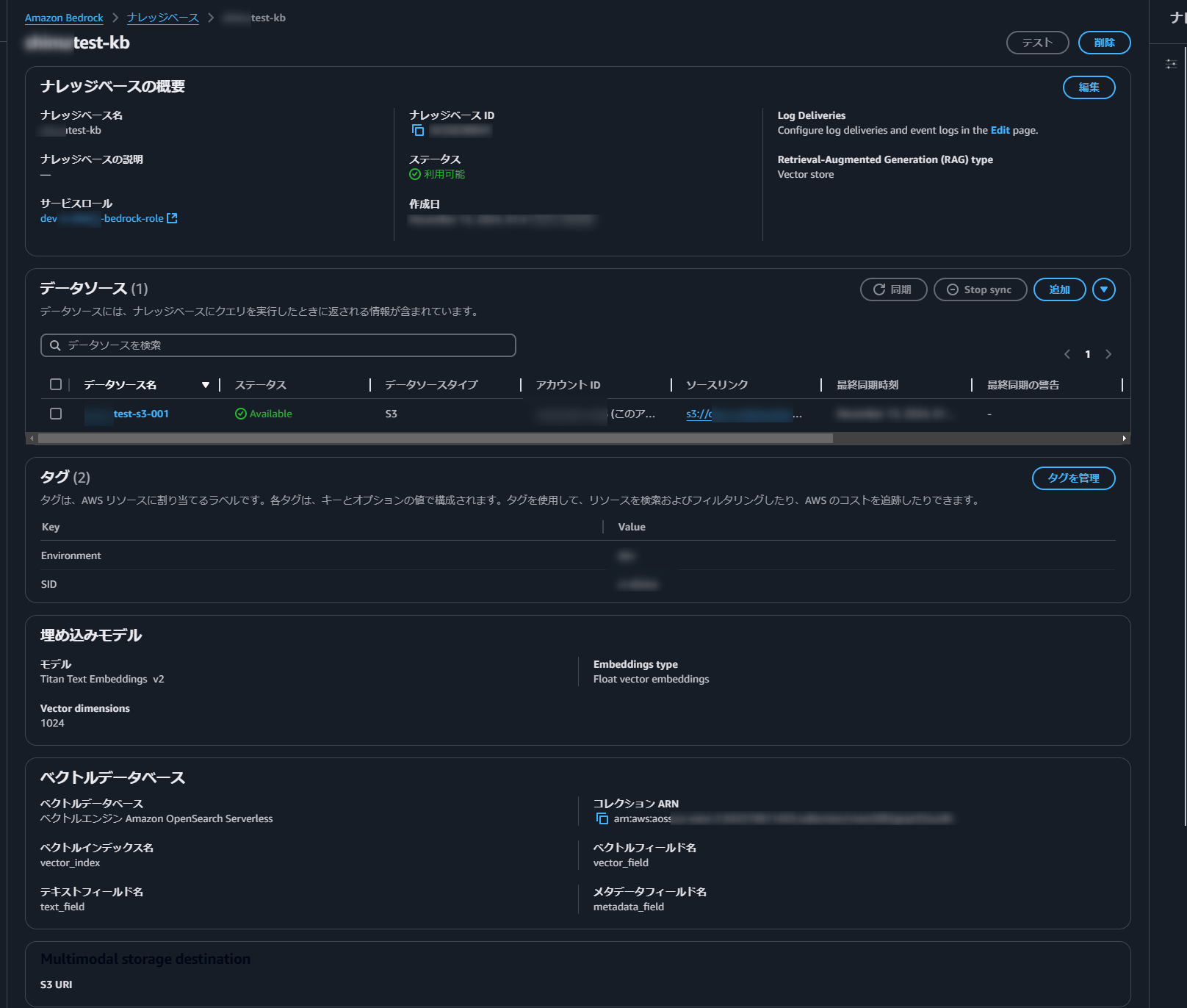

作成されたものの確認

BedrockでKnowledge Baseが作成されていてデータソースがあることを確認します。

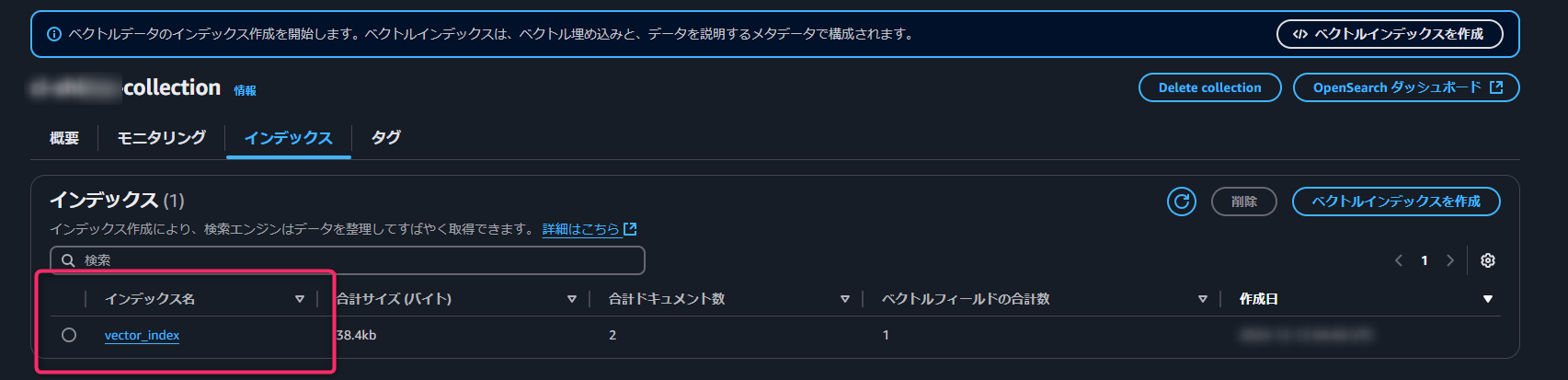

次にOpenSearchのコレクションが作成されていることとindexが設定されていることを確認します。

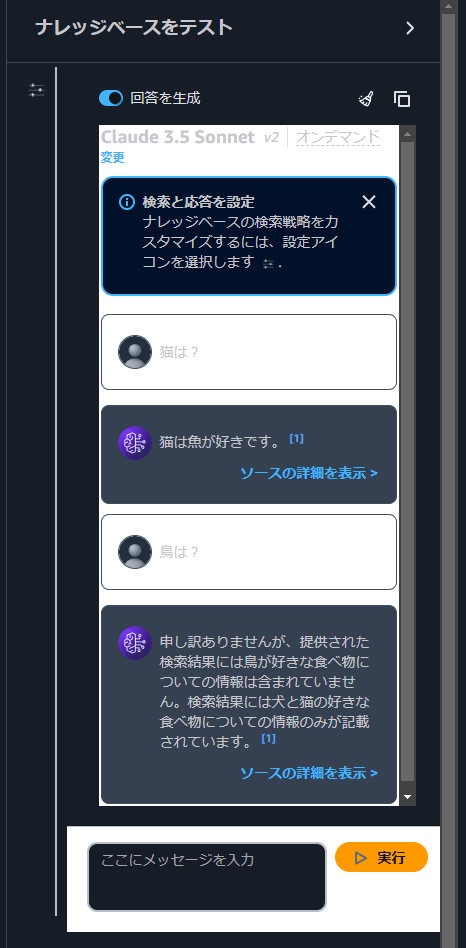

Knowledge baseの動作確認

S3にデータソースとして使用する適当なテキストを送ります。

犬が好きなものは肉です

猫が好きなものは魚です

aws s3 cp ./test001.txt s3://[S3 Bucket Name]/test001.txt

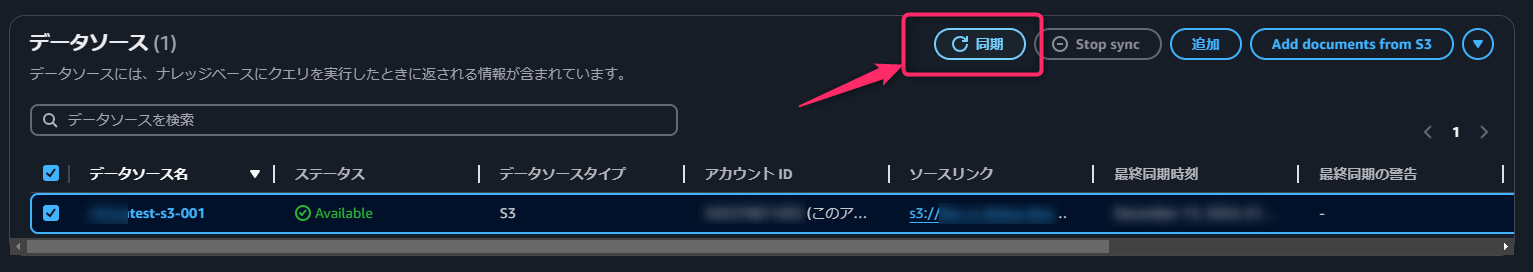

次にデータソースの同期を実施します。

実際に質問を投げかけてみます。(PromptはClaude 3.5 Sonnetを使用)

テキストに記載された回答を答えてくれているのと記載されていない内容については回答はしないようになっています。

簡単ではありますが以上Terraformを用いたKnowledge BaseとOpenSearch Serverlessの内容でした。

RAG評価を試してみる

次にここで作成したKnowledge Baseに対してre:Invent 2024で発表されたRAG評価を試してみます。

事前準備

評価のためのデータセットファイル(jsonl)を用意します。ここではプロンプトとそれに期待する答えを記載します。

{"conversationTurns":[{"referenceResponses":[{"content":[{"text":"猫の好きな食べ物は魚です"}]}],"prompt":{"content":[{"text":"猫の好きなものは?"}]}}]}

{"conversationTurns":[{"referenceResponses":[{"content":[{"text":"犬の好きな食べ物は肉です"}]}],"prompt":{"content":[{"text":"犬の好きなものは?"}]}}]}

S3から参照させるのでテキストをS3にコピーします。

aws s3 cp ./dataset001.txt s3://[S3 Bucket Name]/datasets/dataset001.txt

jobの作成

次にjobを作成します。

マネジメントコンソールからでもOKですが今回はCLIで実施しました。

aws bedrock create-evaluation-job \

--job-name "rag-evaluation-complete-stereotype-docs-app" \

--job-description "Evaluates Completeness and Stereotyping of RAG for docs application" \

--role-arn "arn:aws::iam:<region>:<account-id>:role/AmazonBedrock-KnowledgeBases" \

--evaluation-context "RAG" \

--evaluationConfig file://knowledge-base-evaluation-config.json \

--inference-config file://knowledge-base-evaluation-inference-config.json \

--output-data-config '{"s3Uri":"s3://docs/kbevalresults/"}'

knowledge-base-evaluation-config.json内でjsonlのファイルと結果の保存先の指定が必要です。

jobの確認

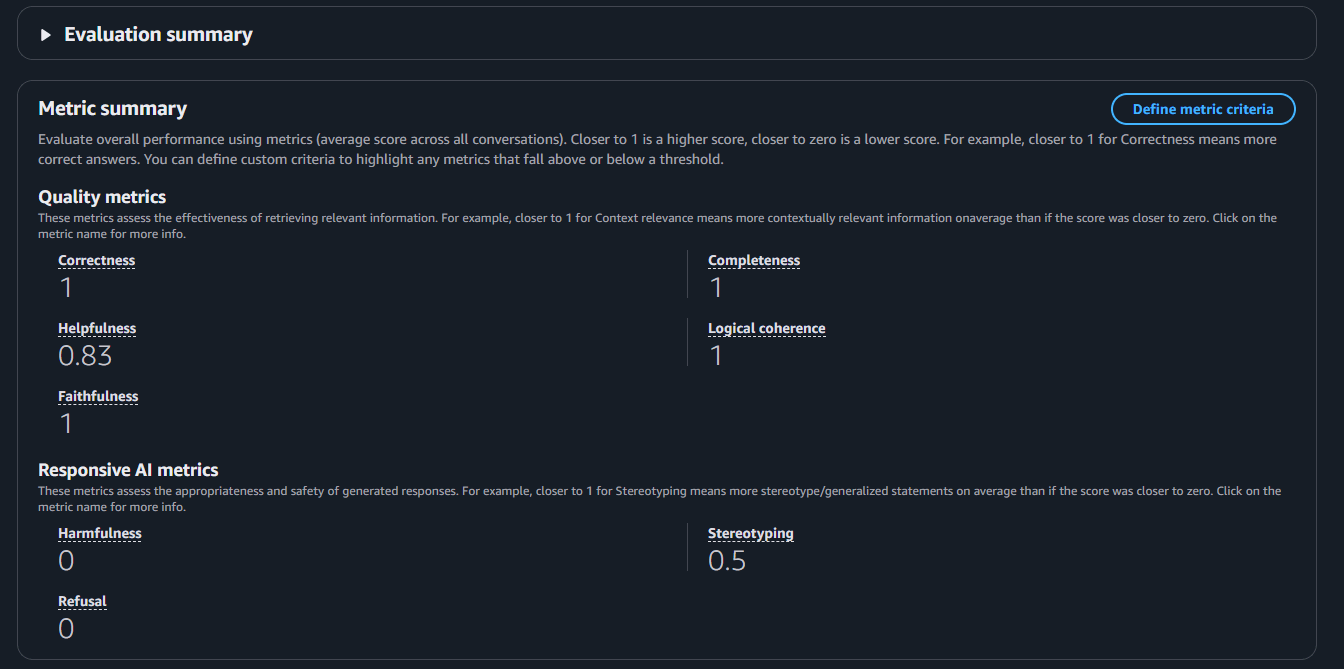

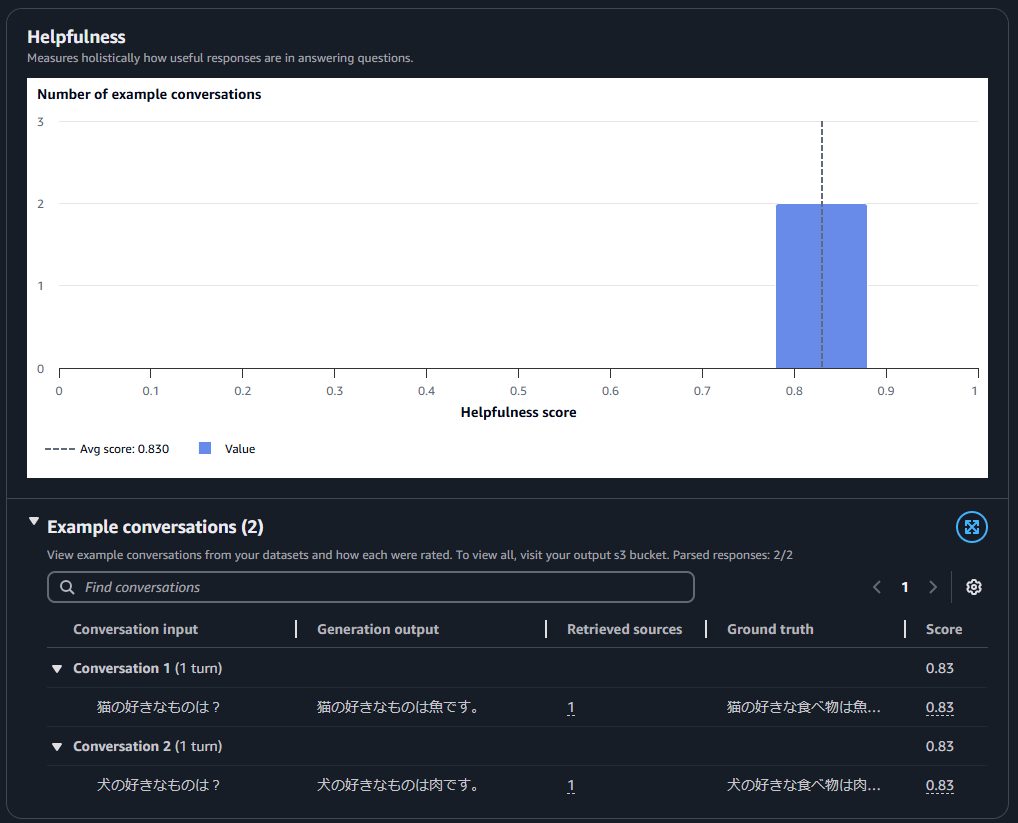

15分~20分ほど待ってjobが完了したので中身を見てみます。

まずはsummaryから確認します。ほぼ答え通りのことを書いているので面白みはないですがCorrectness(正確さ)、Completeness(完全)が1になっていて期待通りの動きになっていることがわかります。

Helpfulness(有用性)のみ0.83という数字ですが評価コメントを確認すると

解答は特に興味深いものでも、予想外のものでもないが、この文脈ではそうである必要はない。

文脈がこれである必要はないということがスコアを下げている原因なのではないかと考えられます。

最後に

弊社内でもBedrock含め生成AIの活用が進んできており実際に利用シーンも増えてきています。今後も様々な機能に触れながらプロジェクトの要件を満たせるよう準備を進めていきたいです。このブログが少しでも参考になればうれしいです。

関連記事 | Related Posts

Bedrock Knowledge baseにTerraformで入門してみた

Achieving Auto Provisioning in ECS Environments

Contributing to OSS from Scratch: 11 Steps to Add a New Resource to Terraform AWS Provider

Implementing BlueGreenDeployment with GitHub Actions + ECS

ゼロから始める OSS 貢献:Terraform の AWS Provider に新規リソースを追加するための 11 のステップ

Experimenting With S3 Bucket Operations Using Agents for Amazon Bedrock+Slack

We are hiring!

【クラウドエンジニア】Cloud Infrastructure G/東京・大阪・福岡

KINTO Tech BlogWantedlyストーリーCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。

【クラウドエンジニア(クラウド活用の推進)】Cloud Infrastructure G/東京・名古屋・大阪・福岡

KINTO Tech BlogCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。

![[Mirror]不確実な事業環境を突破した、成長企業6社独自のエンジニアリング](/assets/banners/thumb1.png)