Rebuilding the Broker Architecture

Hello, I'm Xu Huang from the KINTO ID Platform team. For the past few years now, we have been providing user authentication and authorization systems (known internally as UserPool) across multiple countries. To support this, we adopted a broker model that connects Userpools across multiple regions, building and operating an architecture that allows them to share authentication and authorization information with each other. Last year, as part of our cost optimization efforts, we redesigned and migrated this architecture. In this post, I’ll walk you through what changed and why.

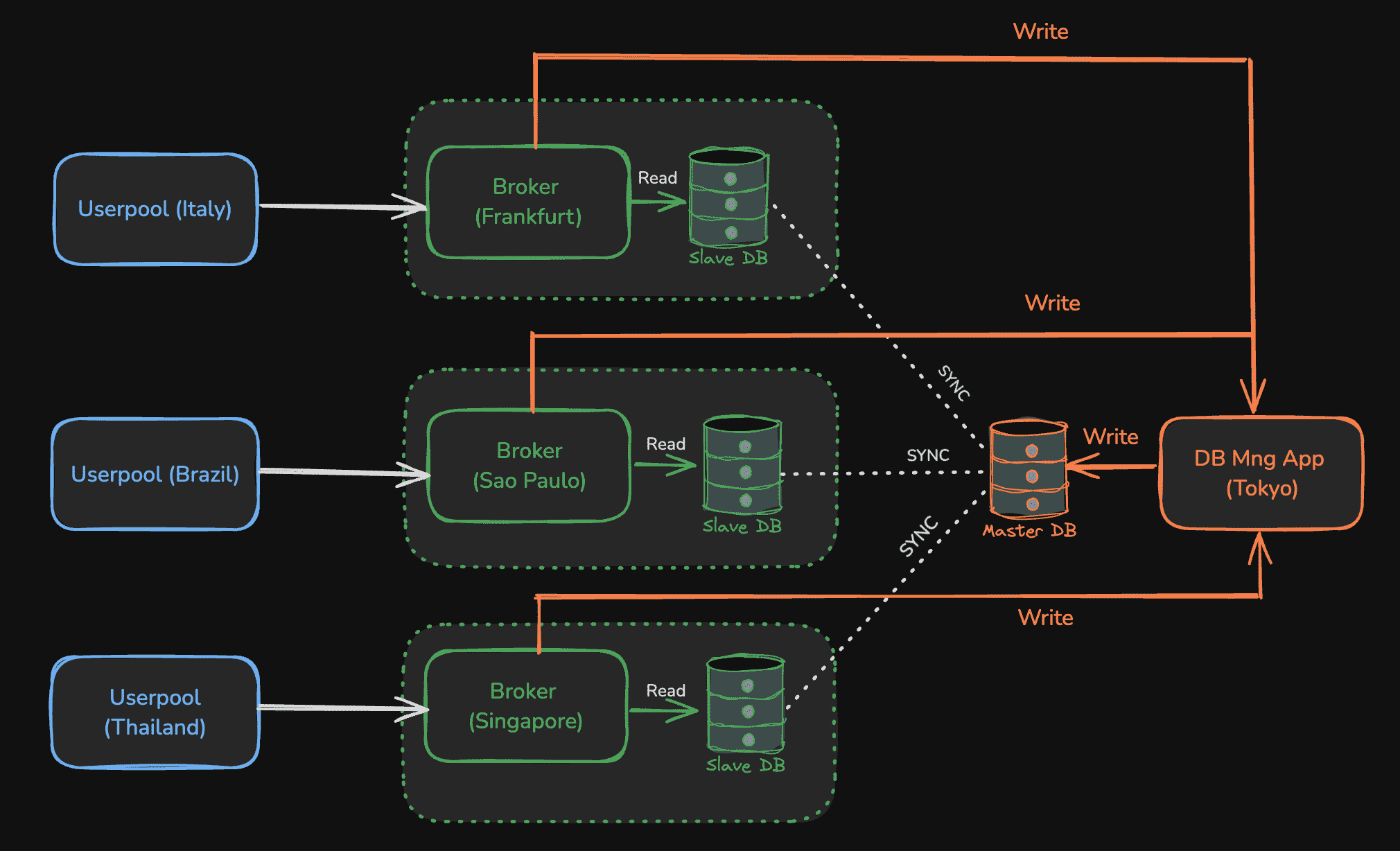

In our initial deployment, we used AWS Aurora Global Database (referred to as Global DB) as part of our global deployment strategy. To minimize access load and latency, we deployed Slave DBs in close proximity to each UserPool and placed Broker servers in the same regions as the corresponding Slave DBs.

(Due to Global DB limitations, only one Master DB is allowed, and it supports up to five Slave DBs.)

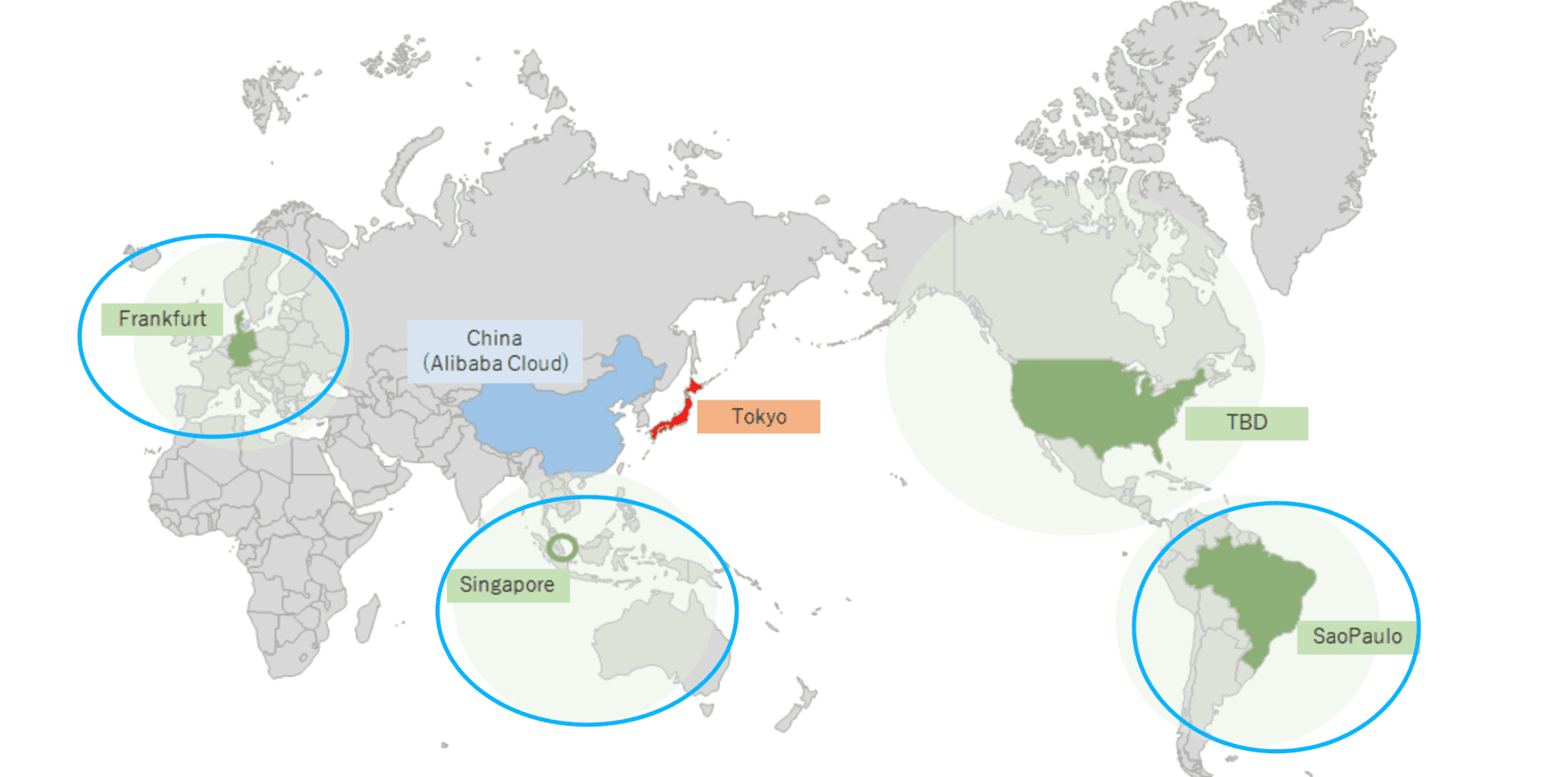

As shown in the diagram above, we gradually rolled out the UserPool service to the regions outlined in blue. To enable centralized user management, we designed the system so that unique IDs would be issued from a central aggregation point and synchronized this information to each region’s sub-DB for local management.

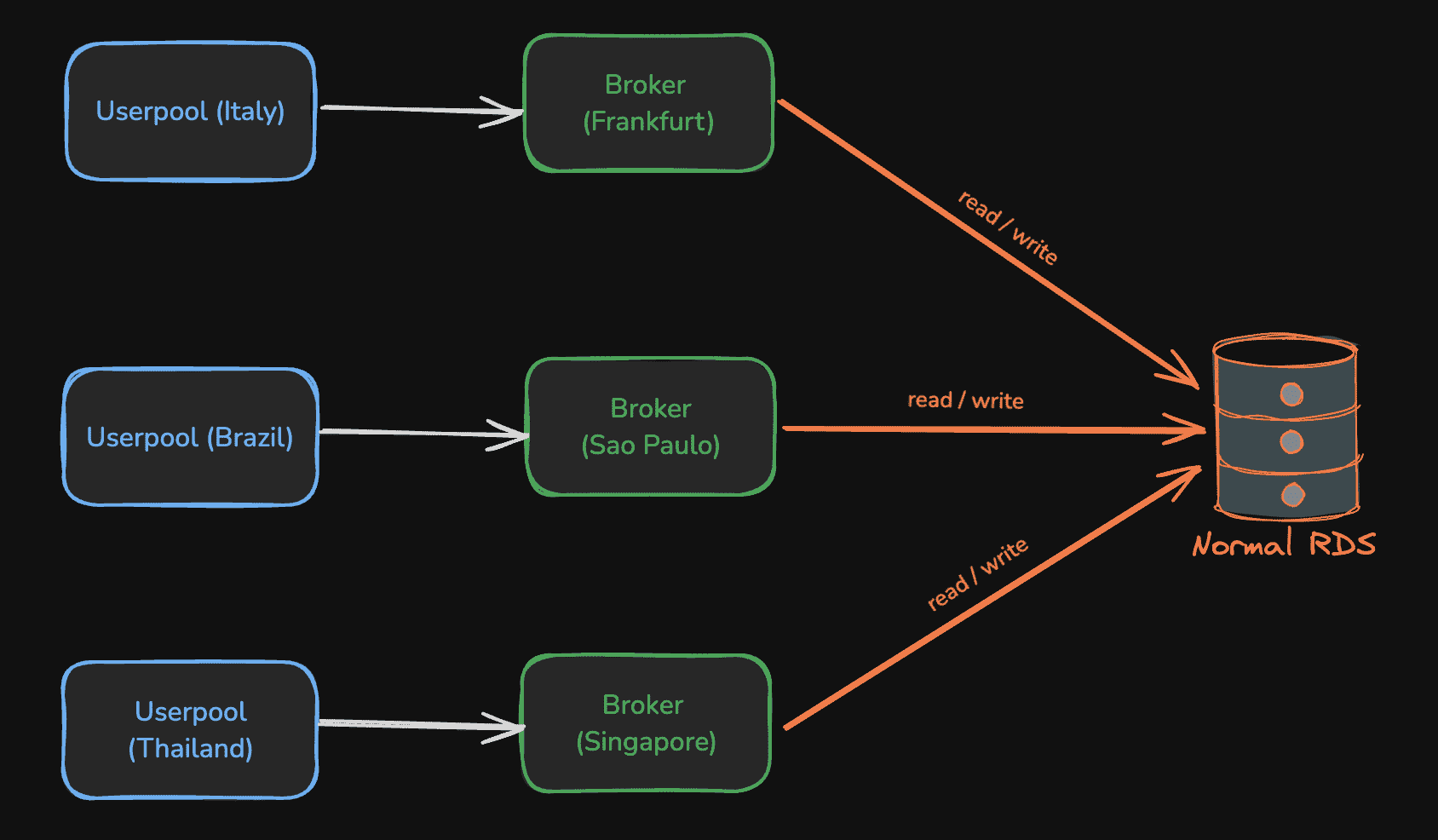

Phase 1: Migrating from Global DB to a Normal DB and Removing Write-Only Applications

The original architecture prioritized minimizing access load by deploying servers across multiple regions. However, in reality, the system had not yet reached a scale that required such complexity, leading to unnecessary operational costs. To optimize the setup, we conducted a thorough evaluation and concluded that a Global DB setup was not essential for our current usage. We then redesigned the system to allow direct R&W access from the Broker to a shared common DB.

The diagram below illustrates the updated architecture:

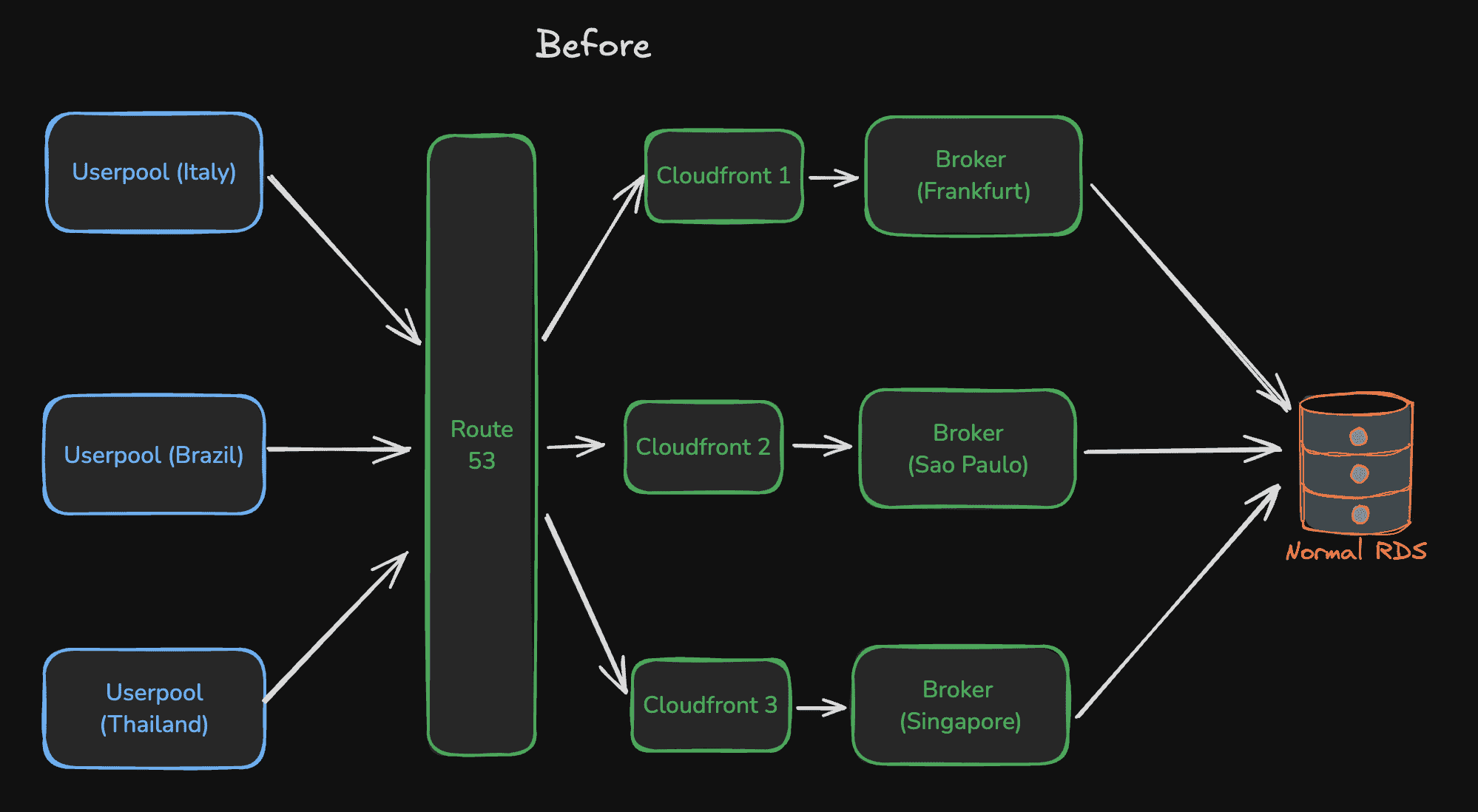

Phase 2: Consolidating the Broker

While Phase 1 significantly delivered cost savings, we looked for further optimization opportunities. This led us to consider whether we could consolidate the system into a single Broker instance. However, there was one challenge: as an identity provider, we also offer redirect URLs to external third party services. If those URLs were to change, it would require the third parties to update their configurations as well. So we started thinking about how we might migrate without changing the domain. With support from our infrastructure team, we realized that by updating DNS settings in Route53 and routing traffic through CloudFront to the new unified server, we could avoid changing the domain altogether and transition to a unified Broker.

When implementing the design as shown above, we were concerned about the impact on latency caused by the increased physical distance between servers, particularly from the UserPool to the now-centralized Broker. So, we measured it.

The results showed that communication between the UserPool and the Broker became about 10% slower, but since the Broker was now located in the same region as the database, DB communication became faster. Overall, there was no significant impact in end-to-end performance before and after the architecture change, so we proceeded with planning for the Phase 2 migration.

Results

Through these two phases, we optimized the system architecture to better align with the actual usage patterns of our business operations.

Going forward, we will continue reviewing system functionality and regularly working on cost-efficiency efforts.

関連記事 | Related Posts

We are hiring!

【クラウドエンジニア】Cloud Infrastructure G/東京・大阪・福岡

KINTO Tech BlogWantedlyストーリーCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。

【ソフトウェアエンジニア(リーダークラス)】共通サービス開発G/東京・大阪・福岡

共通サービス開発グループについてWebサービスやモバイルアプリの開発において、必要となる共通機能=会員プラットフォームや決済プラットフォームなどの企画・開発を手がけるグループです。KINTOの名前が付くサービスやKINTOに関わりのあるサービスを同一のユーザーアカウントに対して提供し、より良いユーザー体験を実現できるよう、様々な共通機能や顧客基盤を構築していくことを目的としています。

![[Mirror]不確実な事業環境を突破した、成長企業6社独自のエンジニアリング](/assets/banners/thumb1.png)