OpenAI vs Google Image Editing Showdown – Testing the "Consistency" of gpt-image-1 and Gemini 2.5 Flash Image

OpenAI vs Google Image Editing Showdown

Testing the Consistency of gpt-image-1 and Gemini 2.5 Flash Image

In recent years, OpenAI's ChatGPT, Google's Gemini, and Anthropic's Claude have emerged as major players in generative AI. Among these, OpenAI and Google offer AI models with image generation and editing capabilities.

This article focuses on OpenAI's gpt-image-1 (as of July 2025) and Google's Gemini 2.5 Flash Image (nicknamed Nano Banana, Web UI as of August 2025) to compare them through actual output examples with an emphasis on image consistency and Japanese text handling.

1. What Are gpt-image-1 and Flash Image?

-

gpt-image-1 (OpenAI)

The underlying model for ChatGPT's 4o ImageGeneration. It has powerful generation and editing capabilities and supports inpainting (mask-based editing).

Note that full functionality requires API access. -

Gemini 2.5 Flash Image (Google)

The model has a fast, lightweight image generation feature that supports generation using reference images. One of the notable characteristics of the model (nicknamed Nano Banana) is its accessibility with a free user account.

2. A Weakness of Image Generation AI: Consistency

When repeatedly generating and editing images with AI tools, you may often face a problem where the image appearance gradually drifts from the original one.

This so-called lack of consistency results in changes in faces, body shapes, clothing textures, and background structures in an image as the number of generation iterations increases.

- Flash Image (August 2025) is relatively stable in this regard and became a topic of discussion on social media immediately after its release.

- gpt-image-1 also improved editing consistency through the input_fidelity parameter introduced in July 2025.

3. Output Comparison 1: Editing Human Poses

Task: Naturally seat the people from a family photo on the right side in a vehicle's back seat in an image on the left side.

|

|

|---|

3-1. gpt-image-1

Since gpt-image-1 cannot directly reference multiple images (placing people from image B into image A), preprocessing with a rough composite was performed beforehand.

The family photo was roughly cut out and overlaid on the seat image. Of course, input_fidelity = high was set.

Prompt I Used

Make this family photo look natural and realistic:

- Fix lighting to match car interior lighting

- Add natural shadows under people

- Adjust color temperature to match

- Make people look naturally seated

- Blend edges smoothly

- Keep faces unchanged but make them fit the scene

- Add subtle reflections on windows if visible

Output Example

Impressions: While there are differences in clothing details and vehicle interiors, the sitting posture and shadow placement looked sufficiently natural only in a single generation. However, the color tone appears to have become yellowish. Facial consistency does not seem to be well maintained.

3-2. Gemini 2.5 Flash Image

Using the reference image feature, the seat image and family photo were specified.

I entered the following prompt for the same purpose of image generation using gpt-image-1.

Prompt Used

In the image of the car's back seat, place the three people from the provided family photo, making it look natural as if they are sitting together.

- Fix lighting to match car interior lighting

- Add natural shadows under people

- Adjust color temperature to match

- Make people look naturally seated

- Blend edges smoothly

- Keep faces unchanged but make them fit the scene

- Add subtle reflections on windows if visible

- Make clothing wrinkles look natural for sitting position

Output Examples (Selection)

|

|

|---|---|

|

|

Impressions: While facial consistency is high, there appears to be some variation in balance.\

3-3. Summary of Human Editing

- gpt-image-1: Requires preprocessing effort but enables high-precision compositing.

- Flash Image: Easy to achieve high quality with the reference image feature. Sitting posture and people's size become sufficiently adjusted with a few generation retries.

- Common: Background consistency for both interiors and lighting are good.

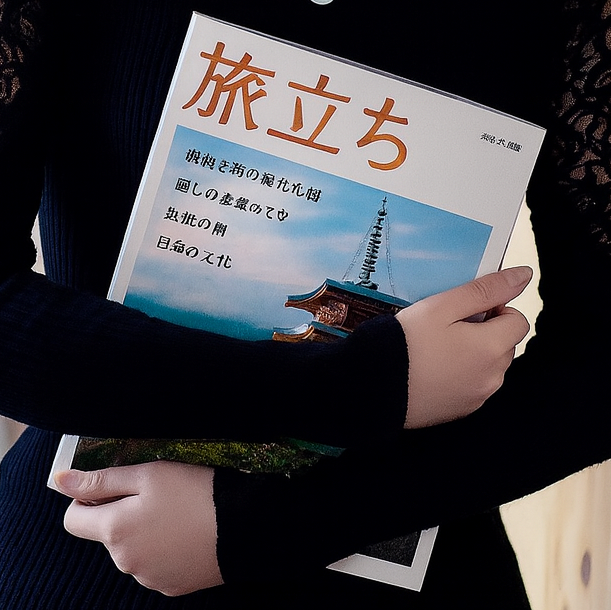

4. Output Comparison 2: Editing with Text (Japanese)

Concern: In addition to consistency, generative AI often struggle with precise reproduction of Japanese text.

Therefore, we compared AI models by assigning them to the task of replacing the magazine cover held by a person with a Japanese title and feature text.

Prompt I Used (in Japanese)

手に抱えている雑誌を以下の内容に置き換えてください。

- 日本の雑誌で、「旅立ち」というタイトル

- おしゃれでモダンな方向性

- 寺院の特集で表紙はお寺の写真をフィーチャー

- 表紙にはコンテンツ紹介の文言をレイアウト

*Note: The prompt was written in Japanese from the beginning to the end because we wanted the magazine title to be converted into Japanese.

4-1. gpt-image-1 (Using the Inpainting Feature)

Output Examples\

|

|

|---|---|

|

|

Impressions: The magazine title Tabidachi was accurately generated in Japanese. However, smaller feature text is prone to be distorted.

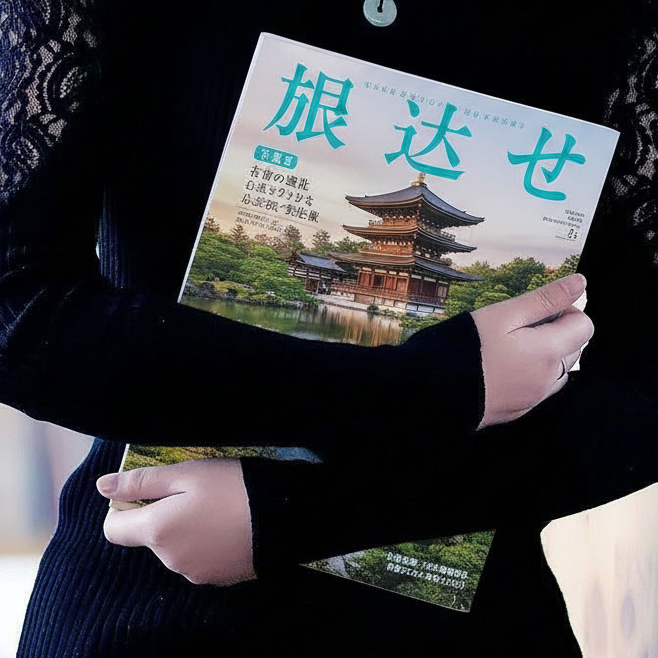

4-2. Flash Image (Only Unsing the Above Prompt)

Since inpainting is not supported, I executed image generation, specifying the entire image via prompt. The prompt content was same as the above-mentioned one.

Output Examples

|

|

|---|---|

|

|

Impressions: While the appearance is reproduced, the precision of generating detailed Japanese text falls slightly behind gpt-image-1.

5. Conclusions and Use Cases

| Aspect | gpt-image-1 | Flash Image 2.5 |

|---|---|---|

| Title reproduction | Accurately outputs in Japanese | Appearance-based |

| Small text reproduction | Prone to distortion | Difficult |

6. Results Summary

| Aspect | gpt-image-1 | Flash Image 2.5 |

|---|---|---|

| Consistency | High (enhanced with input_fidelity) | High (stable with reference images) |

| Editing features | Supports mask editing | Supports reference images |

| Japanese text | Japanese titles is properly reproduced | Appearance-based |

| Convenience | Used via API (for advanced users) | Easily accessible via Web UI |

Key Takeaways

- Choose gpt-image-1 for balance, precision, and control.

- Choose Flash Image for convenience and speed.

- As for Japanese text, both models can generate text that looks like Japanese, but their precision of generating Japanese small text and body sentence still needs improvements.

Both are highly polished, and particularly in terms of maintaining the overall appearance without degradation, they are leagues ahead of previous generation models.

Continuing for our verification, we plan to share findings on prompt design, generation parameter tuning, and testing of new models in the future.

関連記事 | Related Posts

OpenAI vs Google Image Editing Showdown – Testing the "Consistency" of gpt-image-1 and Gemini 2.5 Flash Image

AI画像編集ツール徹底比較!どれが一番自然? Google・OpenAI・ByteDanceの最新モデルを検証【2025秋】

画像生成AIでテックブログのカバー画像を自動生成する方法

![Cover Image for AI Image Editing Tool Comparison! Which is the Most Realistic Image Generator through Testing the Latest Models: Google, OpenAI, and ByteDance? [Fall 2025]](/assets/blog/authors/aoshima/image-edit-evaluation/title.jpg)

AI Image Editing Tool Comparison! Which is the Most Realistic Image Generator through Testing the Latest Models: Google, OpenAI, and ByteDance? [Fall 2025]

仕事で使える画像生成AI入門 – 「クオリティ」と「安全性」を両立する、現場目線のはじめの一歩 –

ChatGPTに全任せ!AIとつくる、バーチャルキャラクター映像(Midjourney・Runway)

We are hiring!

【UI/UXデザイナー】クリエイティブ室/東京・大阪・福岡

クリエイティブGについてKINTOやトヨタが抱えている課題やサービスの状況に応じて、色々なプロジェクトが発生しそれにクリエイティブ力で応えるグループです。所属しているメンバーはそれぞれ異なる技術や経験を持っているので、クリエイティブの側面からサービスの改善案を出し、周りを巻き込みながらプロジェクトを進めています。

生成AIエンジニア/AIファーストG/東京・名古屋・大阪・福岡

AIファーストGについて生成AIの活用を通じて、KINTO及びKINTOテクノロジーズへ事業貢献することをミッションに2024年1月に新設されたプロジェクトチームです。生成AI技術は生まれて日が浅く、その技術を業務活用する仕事には定説がありません。

![[Mirror]不確実な事業環境を突破した、成長企業6社独自のエンジニアリング](/assets/banners/thumb1.png)