Aurora MySQL でレコードが存在するのに SELECT すると Empty set が返ってくる事象を調査した話

こんにちは。 KINTO テクノロジーズの DBRE チーム所属の@p2skです。

DBRE(Database Reliability Engineering)チームでは、横断組織としてデータベースに関する課題解決や、組織のアジリティとガバナンスのバランスを取るためのプラットフォーム開発などを行なっております。DBRE は比較的新しい概念で、DBRE という組織がある会社も少なく、あったとしても取り組んでいる内容や考え方が異なるような、発展途上の非常に面白い領域です。

弊社における DBRE の取り組み例としては、あわっち(@_awache)による DBRE ガードレール構想の実現に向けた取り組みについてというテックブログや、今年の AWS Summit の登壇内容を是非ご覧ください。

今回の記事は、データベースに関する課題解決の事例として「Aurora MySQL でレコードが存在するのに SELECT すると Empty set が返ってくる」という不思議な事象を調査した話をご紹介します。

発生した事象

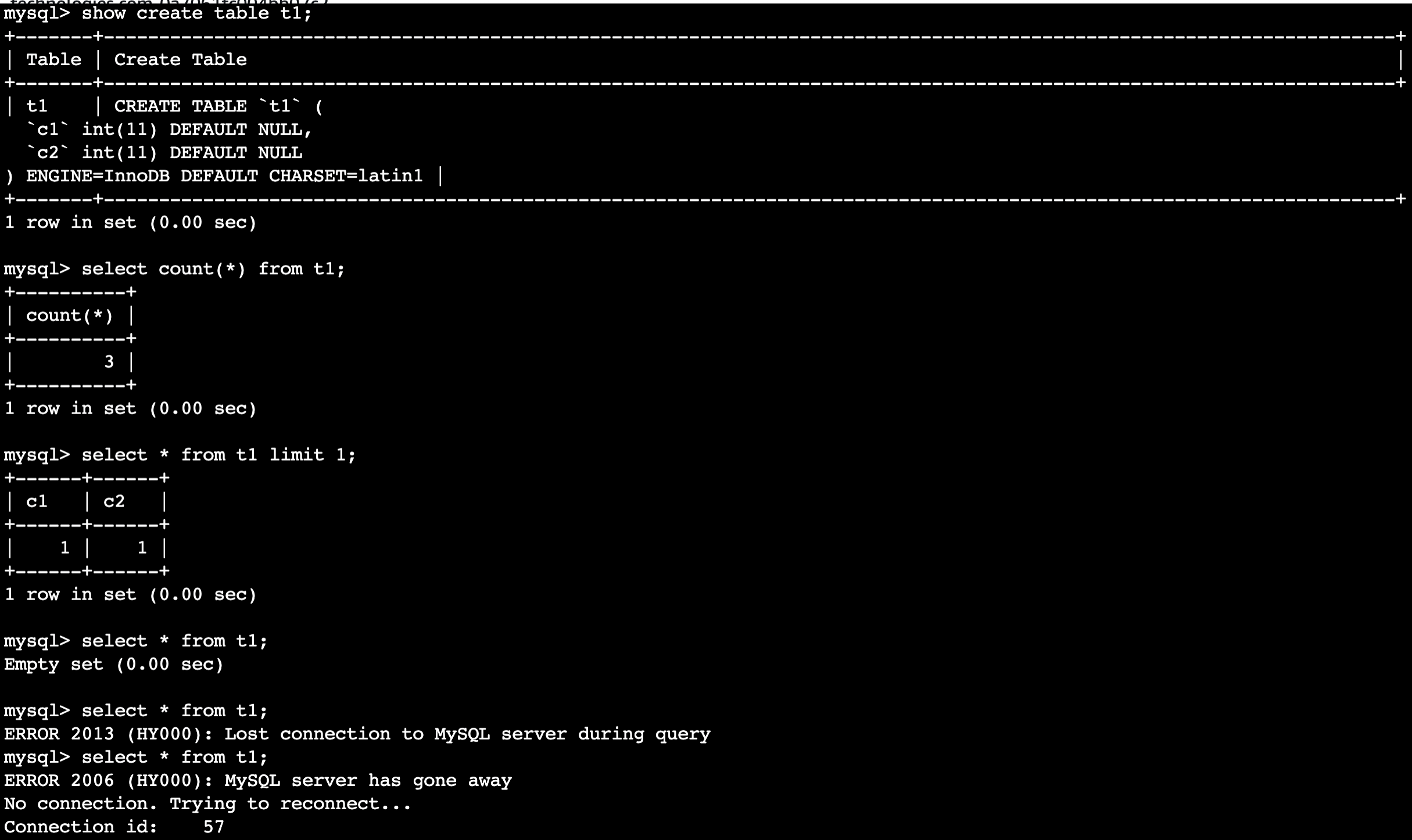

プロダクトの開発者から、「踏み台サーバーからデータベースに対して特定のクエリを実行すると挙動がおかしくなる」という問い合わせを受けました。データベースは Aurora MySQL 2.07.2 (MySQL 5.7.12)を使っており、 MySQL クライアントのバージョンは 5.7.38 for Linux (x86_64) でした。その時に共有してもらった挙動のイメージは以下の画像の通りです。

画像の中にある通り、レコードが存在しているテーブルに対して

select * from t1;

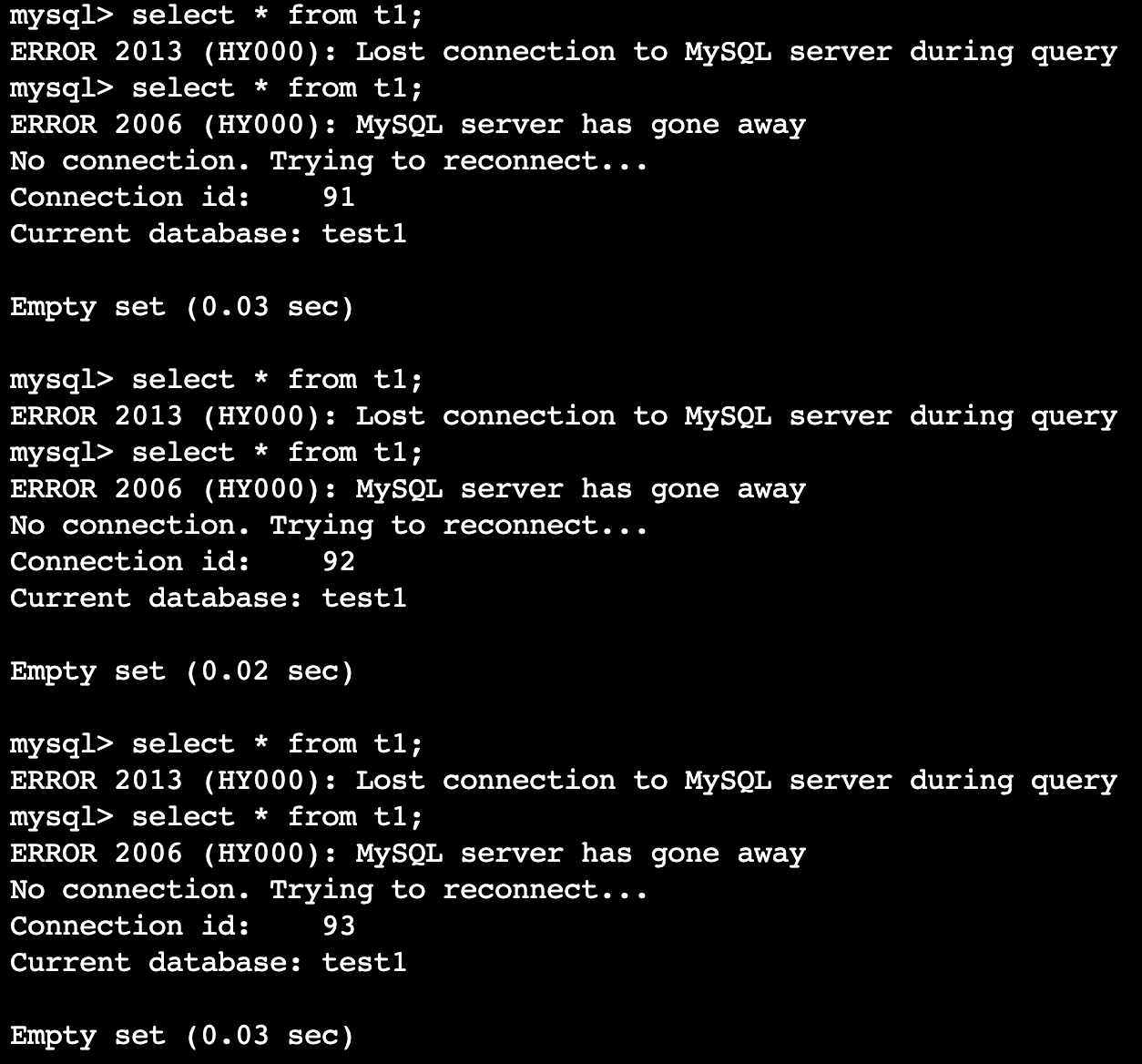

という全レコードを取得するクエリを実行したところ、Empty set が返ってきます。また、その直後にクエリを実行すると ERROR 2013 (HY000): Lost connection to MySQL server during query というエラーが返ってきます。さらにその後は ERROR 2006 (HY000): MySQL server has gone away No connection. Trying to reconnect... というエラーが返ってきました。それ以降は、以下の画像のように Empty set / ERROR 2013 / ERROR 2006 のループになります。

一方で、

select * from t1 limit 1;

というクエリの場合は、期待通り 1 レコードが返ってきました。

この時点では原因について全く思い当たる節が無く、また別の環境で再現する方法も分からない状態でした。幸い、事象が再現するテーブルが複数あったので、様々な条件で再現の有無や事象解消の有無について調査を実施しました。

調査の実施

再現の有無を調査

以下のクエリ達は、事象が発生するクエリと取得対象のデータは同じ(全レコード、全カラム)ですが、全て問題なく結果が返ってきました。

select c1, c2 from t1; -- 全カラム指定

SELECT * FROM t1; -- 予約語を大文字にして実行

Select * from t1; -- 最初の1文字だけ大文字にして実行

他にも、以下のような確認を実施しました。

- ライターインスタンスでは再現するが、リーダーインスタンスでは再現しない

- ライターインスタンスでも、同一データベース内で再現するテーブルと再現しないテーブルがある

- MySQL クライアントを 8.0 系に変えると再現しない

- テーブルを構成するカラムに特殊なものはなく、入っているデータもおかしい点は無さそう

事象解消の有無を調査

続いて、データやメタデータを変更することで事象が解消するかを調査しました。結果は以下の通りです。

- 再現するテーブルを対象に analyze table を実行しても、解消しなかった

- テーブルを新規作成し、ダンプファイルから同じデータを投入した場合、解消した

- 再現するテーブルのダンプファイルを作成後、 DROP & CREATE で同名のテーブルを再作成し、ダンプファイルからデータを投入した場合、解消した

- 再現するテーブルのレコードを全件 DELETE 後にダンプファイルから同じデータを投入した場合、解消した

Aurora のアーキテクチャを踏まえた切り分けの実施

ここまでの調査ですと、テーブル再作成で解消するためデータに問題があるようにも見えますし、MySQL クライアントを 8.0 系に変えて解消するためデータには問題がないようにも見えます。そこで、Aurora のアーキテクチャを改めて確認しました。

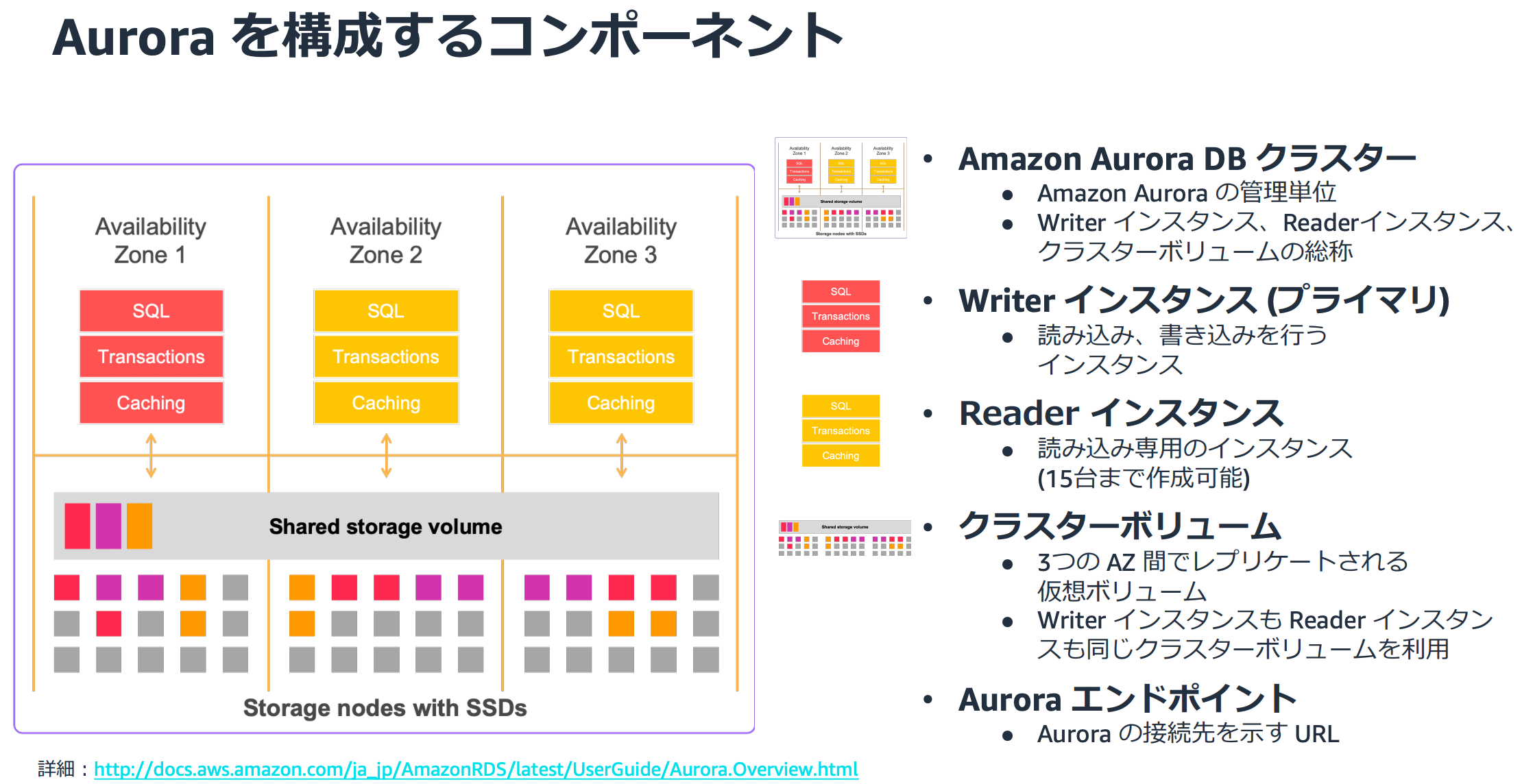

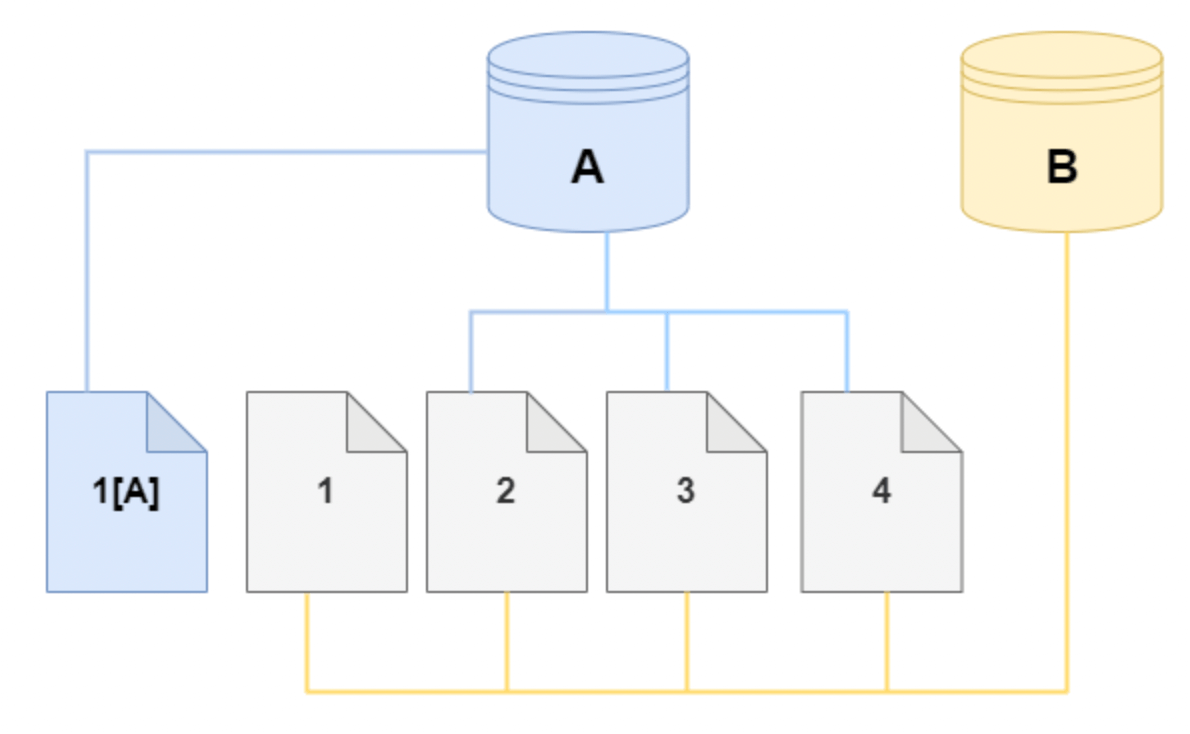

こちらの AWS 公式資料によると、以下のことが確認できます。

- Aurora のコンピュートレイヤとストレージレイヤは完全に分離されている

- ライターインスタンスもリーダーインスタンスも同一のクラスターボリュームを参照する

最もわかりやすい図を下図に引用しました。

このアーキテクチャを踏まえて、コンピュートレイヤとストレージレイヤのどちらが関係していそうかを切り分けるために、Aurora クローンを作成して再現の有無を確認しました。

クローンを作成しても、データはコピーされずにクローン元と同一のストレージを参照し続けます。

下図のように、どちらかのクラスタで新たなデータ更新が行われた時だけ新しいデータページが作成されますが、更新が行われない限り、ストレージレイヤに変更はありません。

作成したクローンに接続して同様のクエリを実行したところ、事象は再現しませんでした。したがって、ストレージレイヤは今回の問題とは無関係な可能性が高いと判断しました。ライターインスタンスでは再現するが、リーダーインスタンスでは再現しないという結果もこの判断を補強してくれそうです。

ということで、Aurora のコンピュートレイヤが今回の事象に関連していると推定しました。コンピュートレイヤが保持している何らかのデータに関連があると考え、改めてアーキテクチャ図を確認したところキャッシュ機構の関連性を疑いました。

現在の設定がどうなっているかを以下のクエリで確認したところ、クエリキャッシュは有効化されていました。

select @@session.query_cache_type;

そこで、以下のようにクエリキャッシュをセッションレベルで無効化したときに事象が再現するか確認しました。

set session query_cache_type = 1; -- クエリキャッシュON

select @@session.query_cache_type; -- 確認

SELECT * FROM t1; -- 再現しなかった

select * from t1; -- 再現した

set session query_cache_type = 0; -- クエリキャッシュOFF

select @@session.query_cache_type; -- 確認

SELECT * FROM t1; -- 再現しなかった

select * from t1; -- 再現しなかった(!)

ということで、クエリキャッシュを無効化すると事象が再現しなくなることが確認できました。MySQL 8.0 ではクエリキャッシュは廃止されているため、8.0 系のクライアントを使うと事象が再現しなかったという結果も納得できます。

また、クエリキャッシュを RESET すると、クエリキャッシュを ON にしていても再現しなくなりました。ちなみに FLUSH QUERY CACHE だと引き続き再現しました。 RESET でキャッシュを削除してあげる必要があるようです。

set session query_cache_type = 1; -- クエリキャッシュON

select @@session.query_cache_type; -- 確認

RESET QUERY CACHE; -- クエリキャッシュのリセット

SELECT * FROM t1; -- 再現しなかった

select * from t1; -- 再現しなかった

これまでの結果から、今回の事象はクエリキャッシュに関連していることが分かりました。

似た事例の調査

原因の切り分けが進んだところで、似た事例が報告されていないかを調査しました。その結果、こちらのバグレポートに辿り着きました。内容としてはバグレポートのタイトル「Query caching with two different clients causes errors」にある通り、2種類のバージョンの MySQL クライアントを使うと、片方のバージョンでキャッシュされた内容にもう片方からもアクセスしようとしてエラーになる、というものです。

こちらのレポートをもとに事象を再現できるか試したところ、バージョン 5.6.35 と 5.7.38 を使った場合に再現することができました。再現手順を記事末尾の appendix に記載しておりますので、ご興味のある方はお試しください。(appendix では 5.7.41 を使っていますが、再現します。)

異なるバージョンの MySQL クライアントを使用した可能性について、担当者に確認したところ「踏み台サーバーを新しく構築したタイミングで事象が発生するようになった」ということが分かりました。以前使っていた踏み台サーバーの MySQL クライアントまでは分からなかったので断定はできませんが、バグレポートの内容と起きている事象は同じです。したがって、今回の原因は異なる MySQL クライアントで select * from t1 というクエリが実行されてキャッシュされたことで、エラーにつながった可能性が非常に高いと判断しました。

対応策の検討

事象が発生してしまった場合は、 RESET QUERY CACHE を実行するのが最も簡単な解消方法ですが、そもそも事象が発生しなくなる方法についても検討しました。

試しに Aurora MySQL のバージョンを 2.07.2 からバージョンアップした時の発生有無について調査しました。その結果、2.07.x 系の最新パッチバージョンである 2.07.9 だと引き続き事象が再現しました。しかし、マイナーバージョンも上げて 2.11.x 系で試したところ、 2.11.1 でも 2.11.2 でも事象が発生しなくなりました。マイナーバージョンアップに伴って、クエリキャッシュに関連した何らかの修正が入った可能性があります。したがって予防策としては Aurora のバージョンを 2.11.x 系にバージョンアップすると良さそうです。

まとめ

本記事では、 DBRE 活動の一環として行なっているデータベースに関する課題解決の事例として「Aurora MySQL でレコードが存在するのに SELECT すると Empty set が返ってくる」という不思議な事象を調査した話をご紹介しました。原因は、クエリキャッシュが有効化された Aurora MySQL 2.07.x 系に対して異なる MySQL クライアントから同じクエリを実行すると結果がおかしくなるという MySQL のバグによるものでした。事象発生時の解消方法としては(一時的なパフォーマンス劣化に注意は必要なものの) RESET QUERY CACHE を実行するのが最も簡単な方法です。また、Aurora 2.11.x 系では事象の発生が確認できなかったため、Aurora のバージョンアップを実施するのが最も確実な対応かと思います。もしくは 2024 年 10 月 31 日には Aurora 2 系がサポート終了となるため、早期に Aurora 3 系へとバージョンアップするのも手かと思います。

そもそもかなりレアケースですので、あまり気にする必要はないかもしれませんが、どなたかの参考になれば幸いです。なお、今回の調査はいろいろな方のご協力によって行うことができました。

KINTO テクノロジーズ DBRE チームでは一緒に働いてくれる仲間を絶賛募集中です!カジュアルな面談も歓迎ですので、 少しでも興味を持っていただけた方はお気軽に Twitter DM 等でご連絡ください。併せて、弊社の採用 Twitter もよろしければフォローお願いします!

Appendix : 再現手順

以下の再現手順は、OS が Amazon Linux 2 の踏み台サーバー上で動作することを確認しています。また、Aurora MySQL のバージョンは 2.07.x 系であることを前提とします。(全てのパッチバージョンで再現するかは確認しておりませんが、少なくとも最新のパッチバージョン 2.07.9 での再現は確認しています。)

まず、踏み台サーバーに接続し、MySQL 5.6 系のクライアント(5.6.35)をインストールします。

sudo mkdir -pvm 2755 /usr/local/mysql-clients-56;

sudo curl -LO https://dev.mysql.com/get/Downloads/MySQL-5.6/mysql-5.6.35-linux-glibc2.5-x86_64.tar.gz;

sudo tar -zxvf mysql-5.6.35-linux-glibc2.5-x86_64.tar.gz -C /usr/local/mysql-clients-56/;

cd /usr/local/mysql-clients-56/;

sudo mv -v mysql-5.6.35-linux-glibc2.5-x86_64 mysql56;

sudo ln -s /usr/local/mysql-clients-56/mysql56/bin/mysql /usr/local/bin/mysql56

次に、MySQL 5.7 系のクライアント(5.7.41)をインストールします。

sudo mkdir -pvm 2755 /usr/local/mysql-clients-57;

sudo curl -LO https://dev.mysql.com/get/Downloads/MySQL-5.7/mysql-5.7.41-linux-glibc2.12-x86_64.tar.gz;

sudo tar -zxvf mysql-5.7.41-linux-glibc2.12-x86_64.tar.gz -C /usr/local/mysql-clients-57/;

cd /usr/local/mysql-clients-57/;

sudo mv -v mysql-5.7.41-linux-glibc2.12-x86_64 mysql57;

sudo ln -s /usr/local/mysql-clients-57/mysql57/bin/mysql /usr/local/bin/mysql57

そのまま MySQL56 でデータベースに接続します。

mysql56 -h xxx -u xxx -p

サンプルのデータベースとテーブルを作成し、データを INSERT します。

create database d1;

use d1;

create table t1 (c1 int, c2 int);

insert into t1 (c1, c2) values (1, 1);

insert into t1 (c1, c2) values (2, 2);

insert into t1 (c1, c2) values (3, 3);

クエリキャッシュをセッションレベルで有効化し、クエリを発行してキャッシュさせます。

set session query_cache_type = 1;

select * from t1;

次に、別のウインドウから同一の踏み台サーバーに接続し、 MySQL57 でデータベースに接続します。

mysql57 -h xxx -u xxx -p

クエリキャッシュをセッションレベルで有効化します。

use d1;

set session query_cache_type = 1;

MySQL56 から実行したクエリと 1 文字違いのクエリを実行すると、正常にデータが返ってきます。

Select * from t1;

MySQL56 から実行したクエリと同じクエリを実行すると、 Empty set が返ってきます。

select * from t1;

これで事象が再現できました。

解消するためには、クエリキャッシュをリセットします。

RESET QUERY CACHE;

関連記事 | Related Posts

Aurora MySQL でレコードが存在するのに SELECT すると Empty set が返ってくる事象を調査した話

Developing a System to Investigate Lock Contention (Blocking) Causes in Aurora MySQL

What is the cause of invisible errors? Concerning the issue observed in Amazon Aurora MySQL 2, where the mysqldump command unexpectedly terminates without displaying an error message, attributed to the database collation.

Aurora MySQL におけるロック競合(ブロッキング)の原因を事後調査できる仕組みを作った話

Event Report: DBRE Summit 2023

Investigating the Cause of a Deadlock in Aurora MySQL 3 series in a Production Environment

We are hiring!

【SRE】DBRE G/東京・大阪・名古屋・福岡

DBREグループについてKINTO テクノロジーズにおける DBRE は横断組織です。自分たちのアウトプットがビジネスに反映されることによって価値提供されます。

【クラウドエンジニア】Cloud Infrastructure G/東京・大阪・福岡

KINTO Tech BlogWantedlyストーリーCloud InfrastructureグループについてAWSを主としたクラウドインフラの設計、構築、運用を主に担当しています。

![[Mirror]不確実な事業環境を突破した、成長企業6社独自のエンジニアリング](/assets/banners/thumb1.png)