Investigating Why Aurora MySQL Returns “Empty set” in Response to SELECT, Even Though Corresponding Records Exist

Hello, p2sk from the KINTO Technologies DBRE team here.

In the DBRE (Database Reliability Engineering) team, our cross-functional efforts are dedicated to addressing challenges such as resolving database-related issues and developing platforms that effectively balance governance with agility within our organization. DBRE is a relatively new concept, so very few companies have dedicated organizations to address it. Even among those that do, there is often a focus on different aspects and varied approaches. This makes DBRE an exceptionally captivating field, constantly evolving and developing.

For some great examples of KINTO Technologies’ DBRE activities, check out (@_awache)'s AWS Summit presentation from this year and Tech Blog article about working toward making the DBRE guardrail concept a reality.

In this article, I’m going delve into our database problem solving case files and share the details of our investigation into an intriguing issue. We encountered a peculiar situation where Aurora MySQL returned an "Empty set" in response to a SELECT query, despite the presence of corresponding records.

The issue

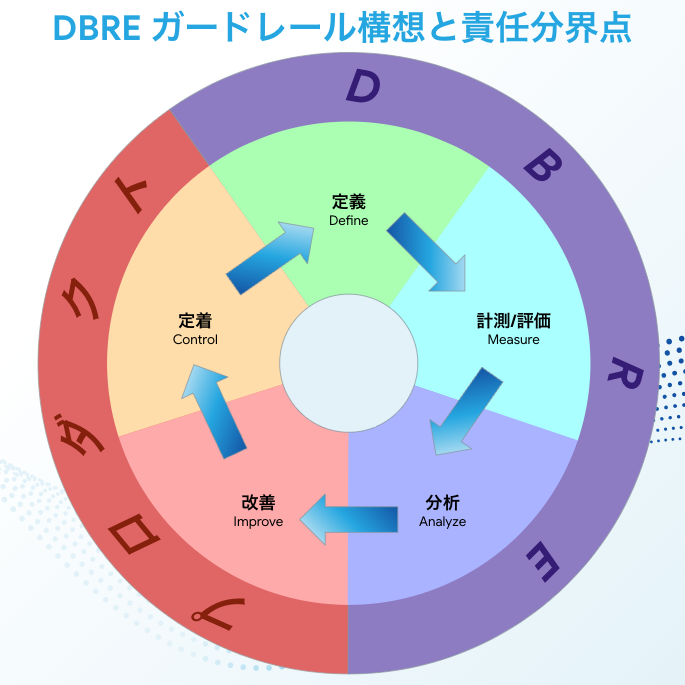

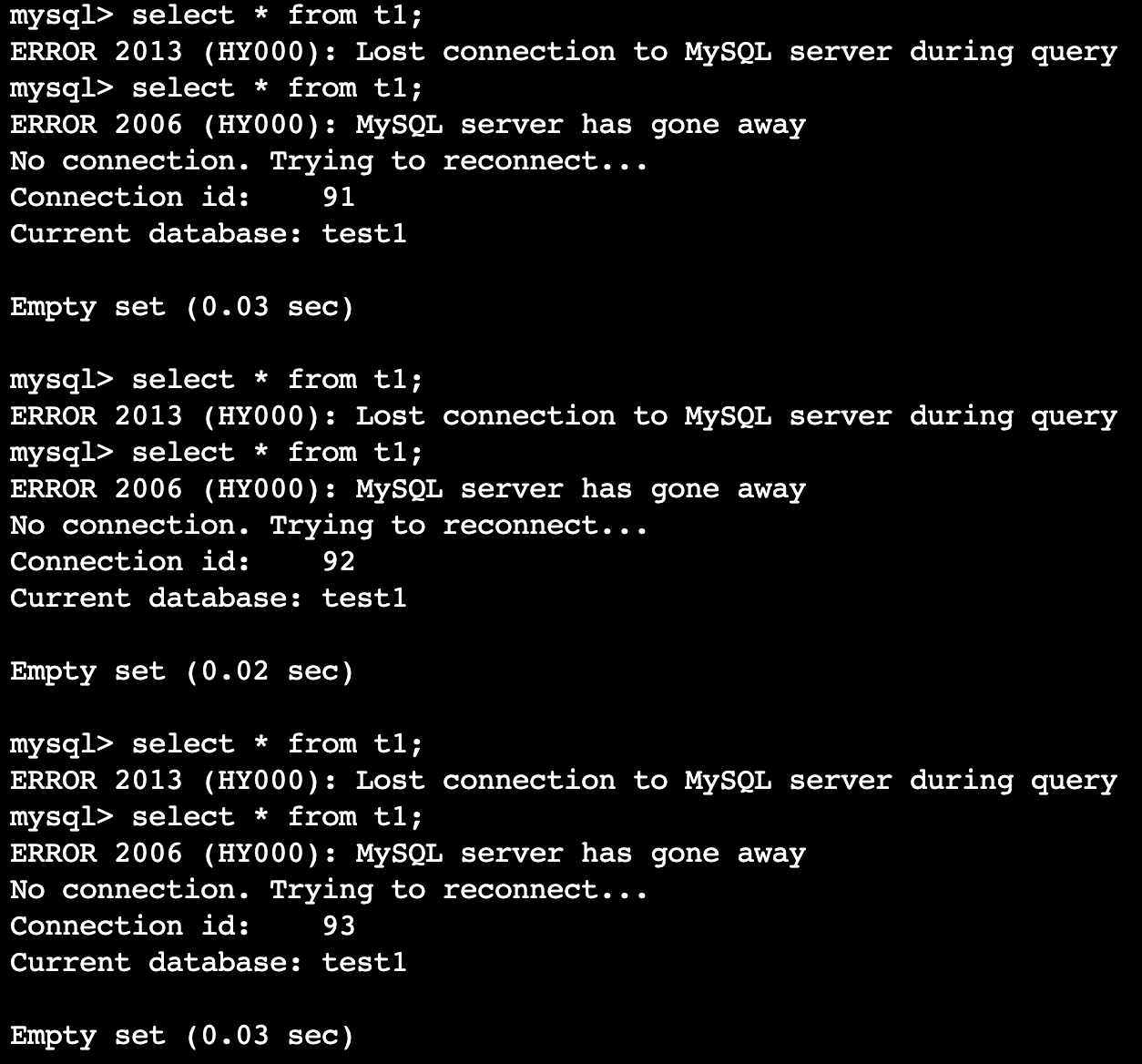

We had an inquiry from a product developer about some strange behavior they were experiencing when certain queries were sent from the jump server to the database. The database they were using was Aurora MySQL 2.07.2 (MySQL 5.7.12), and their MySQL client version was 5.7.38 for Linux (x86_64). Below, you can find an image they shared with us at that time, illustrating the observed behavior.

As the image shows, even for a table with some records in it, running the query

select * from t1;

to retrieve all the records produces the response Empty set. In addition, running another query immediately after that results in ERROR 2013 (HY000): Lost connection to MySQL server during query. Then, that’s followed by ERROR 2006 (HY000): MySQL server has gone away No connection. Trying to reconnect... From then on, the system will be stuck in a loop of Empty set, ERROR 2013, and ERROR 2006, as the image below shows.

Meanwhile, the query

select * from t1 limit 1;

returns 1 record as expected.

At this point, we had no idea what could be causing the issue, or how we could reproduce it in a different environment. Fortunately, the anomalous behavior was observed in multiple tables, providing us with an opportunity to investigate its reproducibility and explore potential resolutions under various conditions.

Investigating the issue

Reproducibility

Despite the fact that the data (including all records and columns) to be retrieved was identical to the query that triggered the issue, all subsequent queries returned results without any problems:

select c1, c2 from t1; -- Specify all columns.

SELECT * FROM t1; -- Run the query with the reserved words all capitalized.

Select * from t1; -- Run the query with only the first letter capitalized.

We also confirmed the following:

- The problem can be replicated when using the writer instance but not when using the reader one.

- Even when using the writer instance, the issue is reproduced for certain tables within the same database, but not others.

- It will not be reproduced if the MySQL client is changed to a client belonging to the 8.0 family

- There appear to be no particular peculiarities or abnormalities related to the columns in the tables or the data itself.

Resolvability

Next, we investigated whether changing the data or metadata could resolve the issue. Our findings were as follows:

- Running “analyze table” on tables that reproduced the issue didn’t resolve it.

- If we created a new table and imported the same data into it from a dump file, the issue was resolved.

- Doing the following resolved the issue: create dump files of the tables that reproduce it, then create new tables with the same names using DROP & CREATE, and import the data into them from the dump files.

- The issue was resolved if we deleted all the records from the tables that reproduced it, then imported the same data into them from a dump file.

Isolating the causes in light of Aurora’s architecture

In our investigations thus far, the fact that recreating the tables resolved the issue suggested there was a problem with the data, while the fact that switching to an 8.0-family MySQL client resolved it suggested that there wasn’t. So, we checked Aurora’s architecture once again.

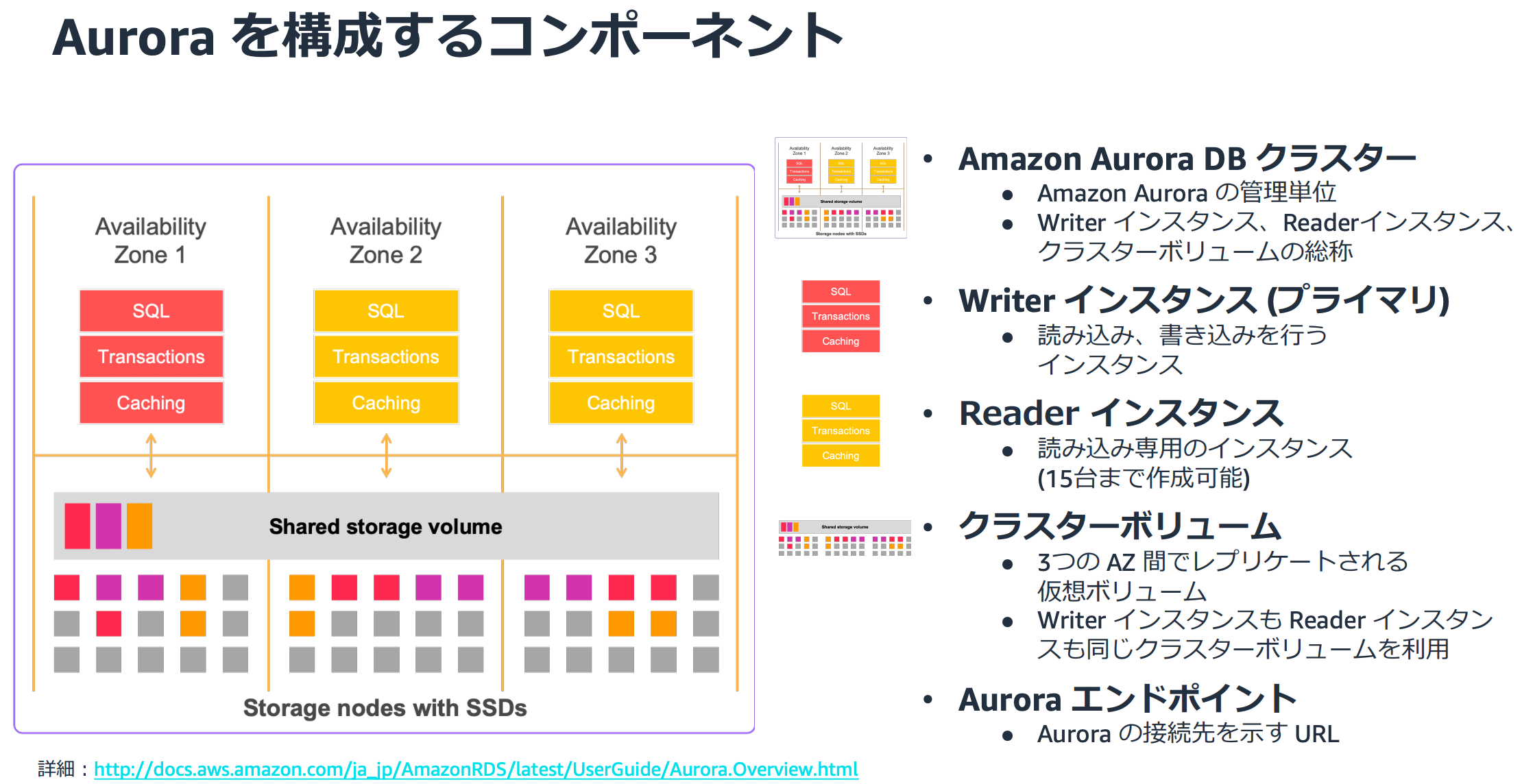

This official AWS document showed us the following:

- Aurora’s compute and storage layers are completely separate.

- The writer and reader instances both reference the same cluster volume.

The image cited below shows this in a very easy-to-understand way.

— Source: Overview of Amazon Aurora’s architecture

— Source: Overview of Amazon Aurora’s architecture

In light of this architecture, we created an Aurora clone and used it to check reproducibility, so as to identify whether the issue was related to the compute layer or the storage one.

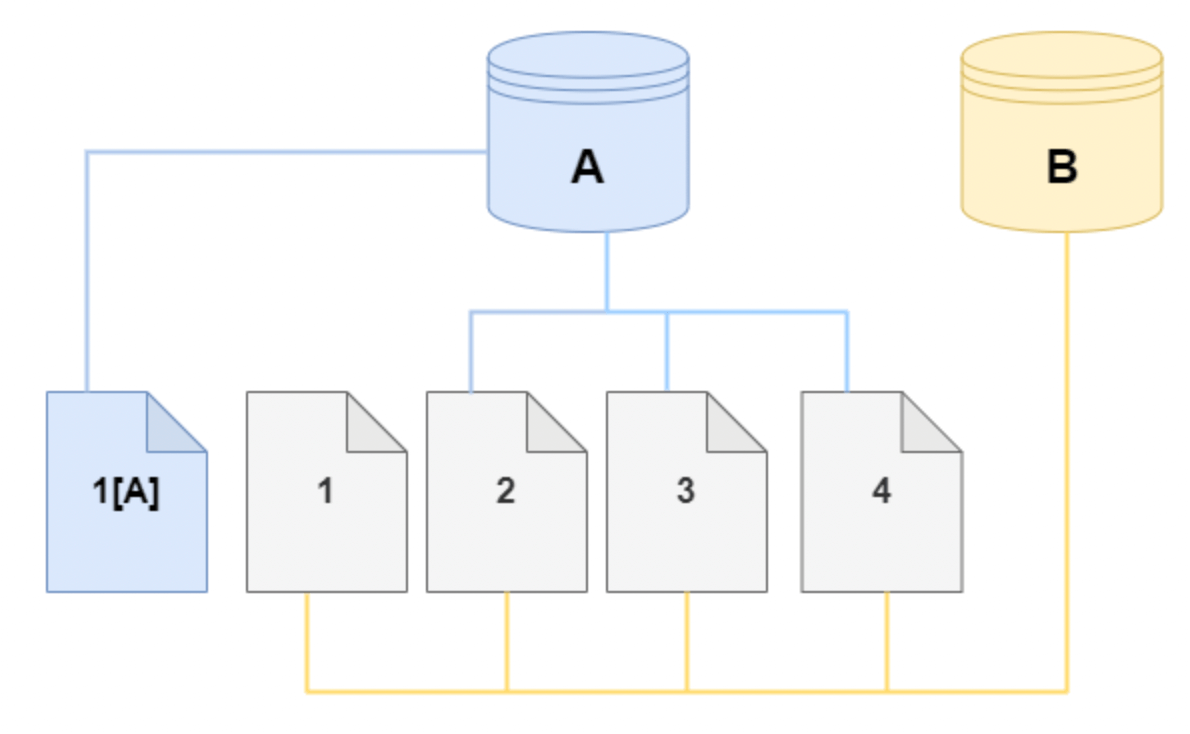

Even when you create a clone, the data doesn’t get copied. Instead, the clone continues to reference the same storage data as the original. As shown in the figure below, new data is only created when data is updated in one of the clusters, but there won’t be any changes in the storage layer unless an update is made.

— Source: How Aurora clones are created

— Source: How Aurora clones are created

Connecting to the newly created clone and running the query reproduced the issue, so we concluded that the storage layer probably wasn’t involved. This conclusion was also supported by fact that the issue could be reproduced with the writer instance but not the reader one.

Based on this, we inferred that the issue had something to do with Aurora’s compute layer. Thinking it might be related to some kind of data held by the compute layer, we checked the architectural diagrams again. This led us to suspect that the cache management system might be involved.

Running the following query to see what the current settings were, we found that the query cache was enabled.

select @@session.query_cache_type;

Next, we checked to see if the issue was reproduced with the query cache disabled at the session level, as shown below.

set session query\_cache\_type = 1; -- Query cache ON.

select @@session.query\_cache\_type; -- Check.

SELECT * FROM t1; -- Wasn’t reproduced.

select * from t1; -- Was reproduced.

set session query\_cache\_type = 0; -- Query cache OFF.

select @@session.query\_cache\_type; -- Check.

SELECT * FROM t1; -- Wasn’t reproduced.

select * from t1; -- Wasn’t reproduced (!)

This confirmed that disabling the query cache stopped the issue. The query cache has been removed in MySQL 8.0, so this also clears up why the issue wasn’t reproduced with an 8.0-family client.

Also, running RESET on the query cache stopped the issue from occurring even when the latter was enabled. Incidentally, if FLUSH QUERY CACHE was run, the issue continued. This suggested that the cache needed to be deleted with RESET.

set session query_cache_type = 1; -- Query cache ON.

select @@session.query_cache_type; -- Check.

RESET QUERY CACHE; -- Reset the query cache.

SELECT * FROM t1; -- Wasn’t reproduced.

select * from t1; -- Wasn’t reproduced.

These results showed that the issue was related to the query cache.

Investigating similar examples

Having thus narrowed down the cause, we investigated whether any similar cases had been reported, and came across this bug report. As its title suggests, it says that errors occur if 2 versions of the MySQL client try to access each other’s caches.

We tried to reproduce the issue based on this report, and succeeded with versions 5.6.35 and 5.7.38. If you’re interested in trying it yourself, the procedure is outlined in the appendix. (Version 5.7.41 is used in the appendix, but the issue will still be reproduced.)

We asked the inquirers about whether different versions of the MySQL client might have been used, they told us that the issue had started when they’d created a new jump server. We didn’t know which MySQL client they’d used with their previous jump server so we couldn’t be sure, but the inquirers’ issue matched what was in the bug report. So, we concluded that the issue was very likely happening because select * from t1 queries were being executed and cached by different MySQL clients, leading to an error.

Considering countermeasures

The easiest way to resolve the issue if it occurs is to run RESET QUERY CACHE, but we also looked into ways to prevent it in the first place.

We tried updating Aurora MySQL from version 2.07.2 to a newer one to see if that would resolve it. The issue continued with the latest patch version of the 2.07.x release, version 2.07.9. However, when we also updated to a minor version and tried some 2.11.x ones, the issue stopped with both version 2.11.1 and version 2.11.2. This minor-version update may have included some kind of fix for the query cache. So, it looks like updating Aurora to a 2.11.x version might be a good way to prevent the issue.

Summary

In this article, I’ve illustrated our DBRE activities with an example from our database problem solving case files in which we investigated a strange issue where Aurora MySQL returned “Empty set” in response to SELECT even though corresponding records existed. The cause was a bug in MySQL that meant that erroneous results were given when different MySQL client versions sent the same query to Aurora MySQL 2.07.x with the query cache enabled. The easiest way to resolve it is to run RESET QUERY CACHE (although you do need to bear in mind that the performance will temporarily drop). We didn’t observe the issue with Aurora 2.11.x, so the best option might be to upgrade Aurora to a newer version. Alternatively, with support for Aurora version 2.x due to end on October 31, 2024, upgrading to Aurora version 3 early might also be a good idea.

It’s a pretty rare case in the first place so it might not warrant much attention anyway, but all the same, I do hope this article proves to be a useful reference for some readers. We couldn’t have done the investigation without lots of help from lots of people, so thank you everyone!

KINTO テクノロジーズ DBRE チームでは一緒に働いてくれる仲間を絶賛募集中です!カジュアルな面談も歓迎ですので、 少しでも興味を持っていただけた方はお気軽に Twitter DM 等でご連絡ください。併せて、弊社の採用 Twitter もよろしければフォローお願いします!

Appendix: Reproduction procedure

We’ve confirmed that the reproduction procedure will work on a jump server whose OS is Amazon Linux 2. It also assumes that a 2.07.x version of Aurora MySQL is used. (We haven’t confirmed whether the issue is reproduced with all patch versions, but have at least confirmed that it is with the latest one, 2.07.9.)

First, connect to the jump server and install the MySQL 5.6 client (5.6.35).

sudo mkdir -pvm 2755 /usr/local/mysql-clients-56;

sudo curl -LO https://dev.mysql.com/get/Downloads/MySQL-5.6/mysql-5.6.35-linux-glibc2.5-x86_64.tar.gz;

sudo tar -zxvf mysql-5.6.35-linux-glibc2.5-x86_64.tar.gz -C /usr/local/mysql-clients-56/;

cd /usr/local/mysql-clients-56/;

sudo mv -v mysql-5.6.35-linux-glibc2.5-x86_64 mysql56;

sudo ln -s /usr/local/mysql-clients-56/mysql56/bin/mysql /usr/local/bin/mysql56

Next, install the MySQL 5.7 client (5.7.41).

sudo mkdir -pvm 2755 /usr/local/mysql-clients-57;

sudo curl -LO https://dev.mysql.com/get/Downloads/MySQL-5.7/mysql-5.7.41-linux-glibc2.12-x86_64.tar.gz;

sudo tar -zxvf mysql-5.7.41-linux-glibc2.12-x86_64.tar.gz -C /usr/local/mysql-clients-57/;

cd /usr/local/mysql-clients-57/;

sudo mv -v mysql-5.7.41-linux-glibc2.12-x86_64 mysql57;

sudo ln -s /usr/local/mysql-clients-57/mysql57/bin/mysql /usr/local/bin/mysql57

Connect to the database with MySQL56.

mysql56 -h xxx -u xxx -p

Create a sample database and a sample table, and INSERT the data.

create database d1;

use d1;

create table t1 (c1 int, c2 int);

insert into t1 (c1, c2) values (1, 1);

insert into t1 (c1, c2) values (2, 2);

insert into t1 (c1, c2) values (3, 3);

Enable the query cache at the session level, and set it so that queries will be issued and cached.

set session query_cache_type = 1;

select * from t1;

Next, connect to the same jump server from a different window, then connect to the database with MySQL57.

mysql57 -h xxx -u xxx -p

Enable the query cache at the session level.

use d1;

set session query_cache_type = 1;

If you run a query that differs from the one from MySQL56 by 1 character, it returns the data successfully.

Select * from t1;

If you run the same query as the one from MySQL56, it returns Empty set.

select * from t1;

This procedure enabled us to reproduce the issue. To resolve it, reset the query cache.

RESET QUERY CACHE;

関連記事 | Related Posts

We are hiring!

【DBRE】DBRE G/東京・名古屋・大阪

DBREグループについてKINTO テクノロジーズにおける DBRE は横断組織です。自分たちのアウトプットがビジネスに反映されることによって価値提供されます。

【データエンジニア】分析G/東京・名古屋・大阪

分析グループについてKINTOにおいて開発系部門発足時から設置されているチームであり、それほど経営としても注力しているポジションです。決まっていること、分かっていることの方が少ないぐらいですので、常に「なぜ」を考えながら、未知を楽しめるメンバーが集まっております。